Testing effectively

Recently, there was a heaty debate regarding TDD which started by DHH when he claimed that TDD is dead.

This ongoing debate managed to capture the attention of developers world, including us.

Some mini debates have happened in our office regarding the right practices to do testing.

In this article, I will present my own view.

How many kinds of tests have you seen?

From the time I joined industry, here are the kinds of tests that I have worked on:

- Unit Test

- System/Integration/Functional Test

- Regression Test

- Test Harness/Load Test

- Smoke Test/Spider Test

The above test categories are not necessarily mutually exclusive. For example, you can crate a set of automated functional tests or Smoke tests to be used as regression test. For the benefit of newbie, let’s do a quick review for these old concepts.

Unit Test

Unit Test aims to test the functionality of a unit of code/component. For Java world, unit of code is the class and each Java class is supposed to have a unit test. The philosophy of Unit Test is simple. When all the components are working, the system as a whole should work.

A component rarely works alone. Rather, it normally interacts with other components. Therefore, in order to write Unit Test, developers need to mock other components. This is the problem that DHH and James O Coplien criticize Unit Test for, huge effort that gains little benefit.

System/Integration/Functional Test

There is no concrete naming as people often use different terms to describe similar things. Contradictory to Unit Test, for functional test, developers aim to test a system function as a whole, which may involve multiple components.

Normally, for functional test, the data is retrieved and stored to the test database. Of course, there should be a pre-step to set-up test data before running. DHH likes this kind of test. It helps developers test all the functions of the system without huge effort to set-up mock object.

Functional test may involve asserting web output. In the past, it was mostly done with htmlUnit but with the recent improvement of Selenium Grid, Selenium became the preferred choice.

Regression Test

In this industry, you may end up spending more time maintaining system than developing a new one. Software changes all the time and it is hard to avoid risk whenever you make changes. Regression Test is supposed to capture any defect that caused by changes.

In the past, a software house did have one army of testers but the current trend is automated testing. It means that developers will deliver software with a full set of tests that is supposed to be broken whenever a function is spoiled.

Whenever a bug is detected, a new test case should be added to cover the new bug. Developers create the test, let it fail, and fix the bug to make it pass. This practice is called Test Driven Development.

Test Harness/Load Test

Normal test case does not capture system performance. Therefore, we need to develop another set of tests for this purpose. In the simplest form, we can set the time out for the functional test that runs in continuous integration server. The tricky part in this kind of test is that it’s very system dependent and may fail if the system is overloaded.

The more popular solution is to run load test manually by using a profiling tool like JMeter or create our own load test app.

Smoke Test/Spider Test

Smoke Test and Spider Test are two special kinds of tests that may be more relevant to us. WDS provides KAAS (Knowledge as a Service) for the wireless industry. Therefore, our applications are refreshed everyday with data changes rather than business logic changes. It is specific to us that system failure may come from data change rather than business logic.

Smoke Tests are set of pre-defined test cases run on integration server with production data. It helps us to find out any potential issues for the daily LIVE deployment.

Smoke Tests are set of pre-defined test cases run on integration server with production data. It helps us to find out any potential issues for the daily LIVE deployment.

Similar to Smoke Test, Spider Test runs with real data but it works like a crawler that randomly clicks on any link or button available. One of our system contains so many combinations of inputs that it is not possible to be tested by human (closed to 100.000 combinations of inputs).

Our Smoke Test randomly chooses some combination of data to test. If it manages to run for a few hours without any defect, we will proceed with our daily/weekly deployment.

The Test Culture in our environment

To make it short, WDS is a TDD temple. If you create the implementation before writing test cases, better be quiet about it. If you look at WDS self introduction, TDD is mentioned only after Agile and XP “We are:- agile & XP, TDD & pairing, Java & JavaScript, git & continuous deployment, Linux & AWS, Jeans & T-shirts, Tea & cake”

Many high level executives in WDS start their career as developers. That helps fostering our culture as an engineering-oriented company. Requesting resources to improve test coverage or infrastructure are common here.

We do not have QA. In worst case, Product Owner or customers detect bugs. In best case, we detect bugs by test cases or by team mates during peer review stage.

Regarding our Singapore office, most of our team members grew up absorbing Ken Beck and Martin Fowler books and philosophy. That’s why most of them are hardcore TDD worshipers. Even, one member of our team is Martin Fowler’s neighbour.

The focus of testing in our working environment did bear fruits. WDS production defects rate is relatively low.

My own experience and personal view with testing

That is enough about self appraisal. Now, let me share my experience about testing.

Generally, Automated Testing works better than QA

Comparing the output of a traditional software house that is packed with an army of QA with a modern Agile team that delivers fully test coverage products, the latter normally outperforms in terms of quality and may even cost effectiveness. Should QA jobs be extinct soon?

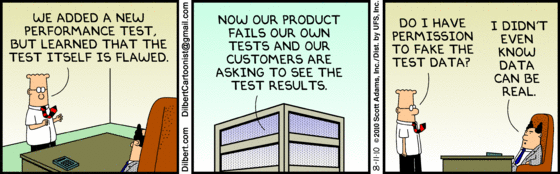

Over monitoring may hint lack of quality

It sounds strange but over the years, I developed an insecure feeling whenever I saw a project that had too many layers of monitoring. Over-monitoring may hint to a lack of confidence and indeed, these systems crash very often with unknown reasons.

Writing test cases takes more time that developing features

DDH is definitely right on this. Writing Test Cases means that you need to mock input and assert lots of things. Unless you keep writing spaghetti code, developing features take much less time compared to writing tests.

UI Testing with javascript is painful

You knew it when you did it. Life would be much better if you only needed to test Restful API or static html pages. Unfortunately, the trend of modern web application development involves lots of javascript on client side. For UI Testing, Asynchronous is evil.

Whether you want to go with full control testing framework like htmlUnit or using a more practical, generic one like Selenium, it will be a great surprise for me if you never encounter random failures.

I guess every developer knows the feeling of failing to get the build pass at the end of the week due to random failure test cases.

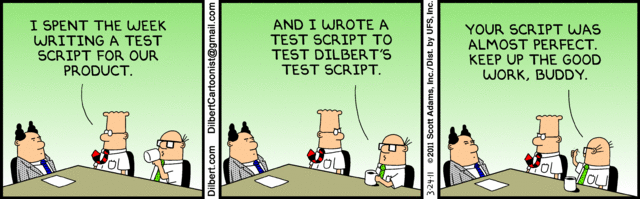

Developers always over-estimate their software quality

It is applicable to me as well because I am an optimistic person. We tend to think that our implementation is perfect until the tests fail or someone helps to point out a bug.

Sometimes, we change our code to make writing test cases easier

Want it or not, we must agree with DHH on this point. Pertaining to Java world, I have seen people exposing internal variables, creating dummy wrappers for framework objects (like HttpSession, HttpRequest,…) so that it is easier to write Unit Test. DHH finds it so uncomfortable that he chose to walk way from Unit Test.

On this part, I half agree and half disagree with him. From my own view, altering design, implementation for the sake of testing is not favourable. It is better if developers can write the code without any concern of mocking input.

However, aborting Unit Testing for the sake of having a simple and convenient life is too extreme. The right solution should be designing the system in such a way that business logic won’t be so tight-coupling with framework or infrastructure.

This is what is called Domain Driven Design.

Domain Driven Design

For a newbie, Domain Driven Design gives us a system with the following layers.

If you notice, the above diagram has more abstract layers than Rails or the Java adoption of Rails, Play framework. I understand that creating more abstract layers can cause bloated system but for DDD, it is a reasonable compromise.

Let’s elaborate further on the content of each layer:

Infrastructure

This layer is where you store your repository implementation or any other environment specific concerns. For infrastructure, keep the API as simple, dummy as possible and avoid having any business logic implemented here.

For this layer, Unit Test is a joke. If there is anything to write, it should be integration test, which works with real database.

Domain

Domain layer is the most important layer. It contains all system business logic without any framework, infrastructure, environment concern. Your implementation should look like a direct translation of user requirements. Any input, output, parameter are POJO only.

Domain layer should be the first layer to be implemented. To fully complete the logic, you may need the interface/API of the infrastructure layer. It is a best practice to keep the API in the Domain Layer and concrete implementation in the Infrastructure layer.

The best kind of test cases for the Domain layer is Unit Test as your concern is not the system UI or environment. Therefore, it helps developers to avoid doing dirty works of mocking framework object.

For mocking internal state of object, my preferred choice is using a Reflection utility to setup objects rather than exposing internal variables through setters.

Application Layer/User Interface

Application Layer is where you start thinking about how to represent your business logic to the customer. If the logic is complex or involving many consecutive requests, it is possible to create Facades.

Reaching this point, developers should think more about clients than the system. The major concerns should be customer’s devices, UI responsiveness, load balance, stateless or stateful session, Restful API. This is the place for developers to showcase framework talent and knowledge.

For this layer, the better kind of test cases is functional/integration test.

Similar as above, try your best to avoid having any business logic in Application Layer.

Why it is hard to write Unit Test in Rails?

Now, if you look back to Rails or Play framework, there is no clear separation of layers like above. The Controllers render inputs, outputs and may contain business logic as well. Similar behaviours applied if you use the ServletAPI without adding any additional layer.

The Domain object in Rails is an active record and has a tight-coupling with database schema.

Hence, for whatever unit of code that developers want to write test cases, the inputs and output are not POJO. This makes writing Unit Test tough.

We should not blame DHH for this design as he follows another philosophy of software development with many benefits like simple design, low development effort and quick feedback. However, I myself do not follow and adopt all of his ideas for developing enterprise applications.

Some of his ideas like convention over configuration are great and did cause a major mindset change in developers world but other ideas end up as trade off. Being able to quickly bring up a website may later turn to troubles implementing features that Rails/Play do not support.

Conclusion

- Unit Test is hard to write if your business logic is tight-coupling to framework.

- Focusing and developing business logic first may help you create better design.

- Each kinds of components suit different kinds of test cases.

This is my own view of Testing. If you have any other opinions, please provide some comments.

| Reference: | Testing effectively from our JCG partner Tony Nguyen at the Developers Corner blog. |