Easy Blue-Green Deployments on Amazon EC2

Amazon EC2 Container Service (ECS) is Amazon’s solution for running and orchestrating Docker containers. It provides an interface for defining and deploying Docker containers to run on clusters of EC2 instances.

The initial setup and configuration of an ECS cluster is not exactly trivial, but once configured it works well and makes running and scaling container-based applications relatively easy. ECS also has support for blue-green deployments built in, but first we’ll cover some basics about getting set up with ECS.

Within ECS, you create task definitions, which are very similar to a docker-compose.yml file. A task definition is a collection of container definitions, each of which has a name, the Docker image to run, and options to override the image’s entrypoint and command. The container definition is also where you define environment variables, port mappings, volumes to mount, memory and CPU allocation, and whether or not the specific container should be considered essential, which is how ECS knows whether the task is healthy or needs to be restarted.

You can set up multiple container definitions within the task definition for multi-container applications. ECS knows how to pull from the Official Docker Hub by default and can be configured to pull from private registries as well. Private registries, however, require additional configuration for the Docker client installed on the EC2 host instances.

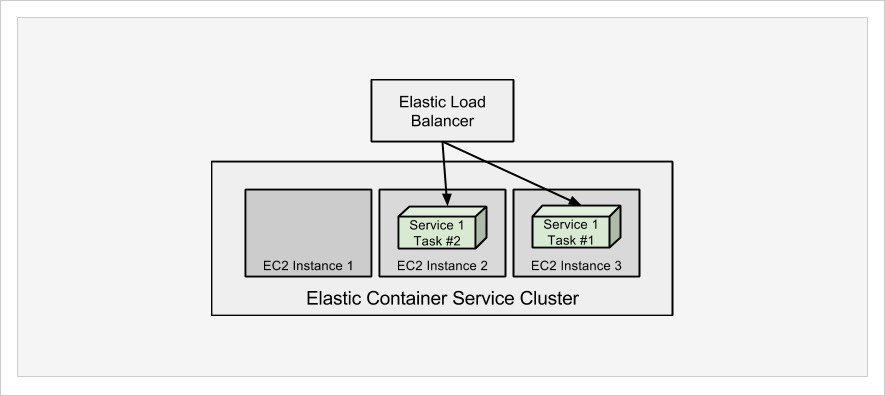

Once you have a task definition, you can create a service from it. A service allows you to define the number of tasks you want running and associate with an Elastic Load Balancer (ELB). When a task maps to particular ports, like 443, only one task instance can be running per EC2 instance in in the ECS cluster. Therefore, you cannot run more tasks than you have EC2 instances. In fact, you’ll want to make sure you run at least one less task than the number of EC2 instances in order to take advantage of blue-green deployments. Task definitions are versioned, and Services are configured to use a specific version of a task definition.

What Are Blue-Green Deployments?

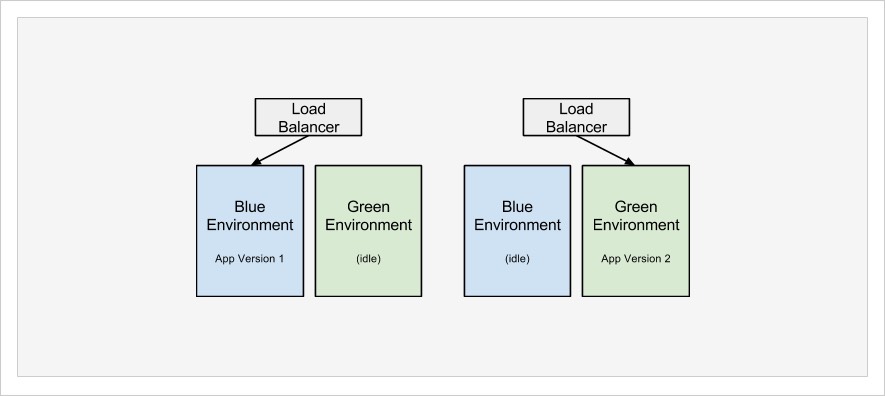

Blue-green, black-red, fuchsia-periwinkle, it really doesn’t matter what colors are used. The point is that there are two separate but equal environments.

At any given moment, your application is running on one of the environments while the other environment is either destroyed to conserve resources or sits idle waiting for the next update. This second environment allows you to deploy updates without interrupting the currently live environment. After the deployment is ready, the load balancer/router can be updated to route traffic to the new environment.

This concept is not new, but it has not been widely adopted due to the requirement of a second environment. Depending on the size and complexity of your application architecture, a second environment can be quite costly and difficult to manage. Utilizing Docker containers and a microservices architecture can help alleviate this challenge a bit. Using ECS for managing containers on EC2 can further ease this burden.

How Amazon ECS Handles Blue-Green Deployments

ECS facilitates blue-green deployments when a service is updated to use a newer version of a task definition. When you define a service and set the number of tasks that should be running, ECS will ensure that many are running, assuming you have enough capacity for them. When a service is updated to use a new version of a task definition, it will start new tasks from the new definition, as long as there is spare capacity in the cluster. As new tasks are started, it will drain connections from the old tasks and kill them off.

Looking at a most basic example, consider having two EC2 instances in an ECS cluster. You have a service defined to run a single task instance. That task will be running on just one of the EC2 instances. When the task definition is updated and the service is updated to use the new task definition, ECS will start a new task on the second EC2 instance, register it with the ELB, drain connection from the first, and then kill the old task.

As I mentioned earlier, you’ll want to make sure you have at least one extra EC2 instance available in the cluster than the number of tasks you have set in the service. If in this basic example we had two tasks running, there would be one on each of the EC2 instances, there would be no spare capacity for ECS to start a new one, and therefore a blue-green deployment could not happen. You would have to manually kill at least one of the tasks to start the process.

It is also worth noting that every time ECS starts a task, it will pull the Docker image specified in the definition. So when you build a new version of your images and push them to a registry, the next task to start in ECS will pull that version. So from a continuous integration and delivery standpoint, you just need to build your image, push to registry, and trigger blue-green deployment on ECS for your updated application to go live.

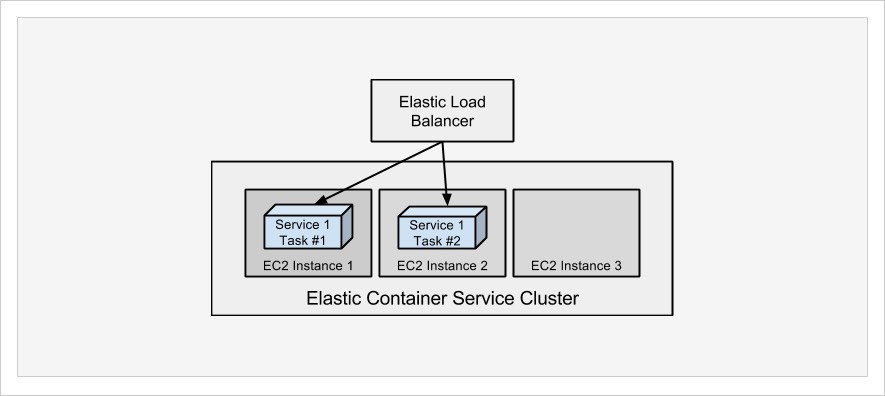

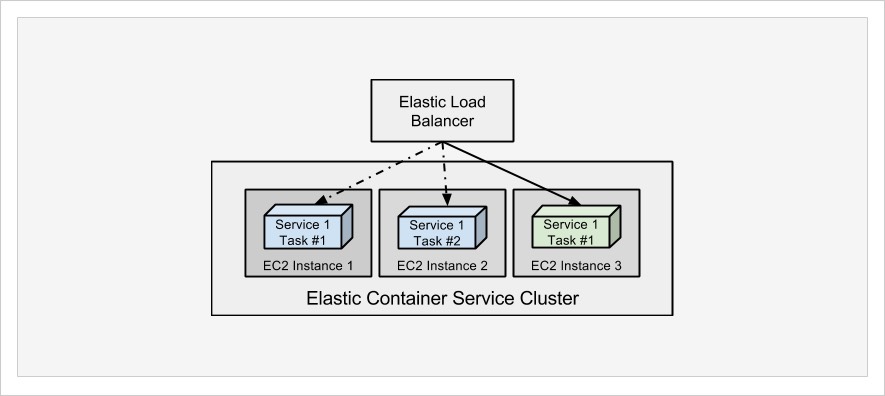

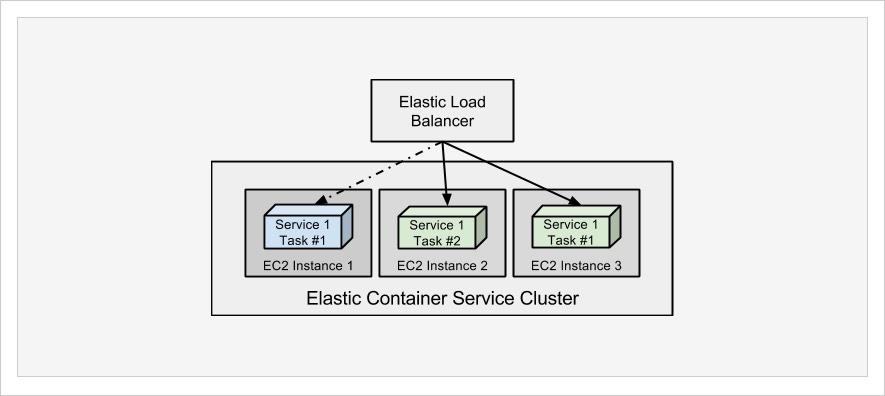

Below is a series of diagrams that illustrate a simplified blue-green deployment process on ECS.

- To begin, we have a single service running two tasks. The two tasks are split between EC2 Instance 1 and EC2 Instance 2.

- An updated task definition has been created, and Service has been updated to use the new task definition. ECS launches a new task on EC2 Instance 3 and begins draining connections from previous tasks.

- As connections are drained from existing tasks, ECS will kill one at a time and launch additional tasks until the desired count is met.

- When ECS has met the desired count of tasks running, it kills any remaining tasks that were still running the previous version of the task.

And that’s it. The updated version of the application is running in a new “green” environment. With ECS, the concept of separate blue and green environments is a bit virtual and fluid, but since containers are isolated, it really doesn’t matter.

ECS Deploy: A Simple and Elegant Way to Trigger Blue-Green Deployments

Triggering blue-green deployments on ECS is quite simple: Create a new version of a task definition (no changes required) and update service to use new definition. Doing this manually every time you want to deploy is a bit of a nuisance though, especially if nothing needs to change about the task definition.

As a development team we like to operate a continuous integration and delivery process that allows us to easily trigger deployments by merging code against appropriate branches in a git repo. A merge against develop means it should be deployed to our staging environment, and a merge against master means it should be deployed to production. We don’t want any further manual processing other than the merge and push to git.

Our continuous integration process clones our repo, builds our Docker images, executes unit tests against the image, pushes the image to our private registry (which runs on ECS), and finally triggers a blue-green deployment on ECS. When we looked for solutions for triggering the update/deployment on ECS, the options were complicated. We could use Amazon’s CodeDeploy, or Elastic Beanstalk, but those required a different build process that did not match what we were running in CI.

Since all that is required to trigger a blue-green deployment is an update to the task definition and service, we wrote a shell script that takes a few parameters and then works with the AWS command line tools to fetch the current task definition, create a new version from it, and update the service to use it. It works quite well and is very simple. After triggering the update, it monitors the service to be sure it is running the updated version before exiting. If it sees the new version running, it will exit with a normal zero status code; otherwise it exits with an error. This way, our CI/CD process knows whether or not deployment was successful, and we can be notified of failed deployments.

By the way, our script is available open source with an MIT license.

ecs-deploy is available both as a shell script and a Docker image. The script uses Linux utilities like sed, which do not behave the same on Linux and Mac, not to mention Windows. Using the Docker image may give you more consistency.

Requirements for ecs-deploy

ecs-deploy makes use of other software to perform its work. Notably it uses the AWS CLI tool, commonly installed via pip by running pip install awscli. It also uses jq which is a command line JSON parser.

While the script does not require you to set any environment variables, it is highly recommended that the AWS API credentials be set this way in order to keep them out of your shell history and process list. The AWS region can also be set via environment variable in order to keep command line options to a minimum.

Using the shell script

If you’ve cloned the repo or downloaded the ecs-deploy script into your path, you can just run it to get full usage options. Here’s an example:

$ ecs-deploy -c clusterName -n serviceName -i repo/name:tag

That example assumes you’ve configured environment variables for AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_DEFAULT_REGION.

Using the Docker image

If you don’t want to install jq and AWS CLI (or the dependant Python tools like easy_install), you can just run the Docker image.

The best way to use the Docker image is to clone the ecs-deploy project repository and use the docker-compose.yml configuration provided. By using Docker Compose to run the image, you can provide the AWS-related environment variables via a file to keep them out of the command line arguments. When you clone the repository, copy the local.env.dist file to local.env and add your credentials into the file. Then you can use docker-compose run ecsdeploy to run the image.

The Docker image uses the entrypoint of the ecs-deploy script, so you just need to provide the arguments in the same way as you would for the shell script. Here is an example:

$ git clone https://github.com/silinternational/ecs-deploy.git $ cd ecs-deploy/ $ cp local.env.dist local.env (edit local.env to add your credentials and default region) $ docker pull silintl/ecs-deploy:latest $ docker-compose run --rm ecsdeploy \ -c clusterName -n serviceName -i repo/name:tag

If you want to incorporate ecs-deploy into a docker-compose project of your own, you can just add another service with this:

ecsdeploy:

image: silintl/ecs-deploy:latest

env_file:

- local.envBe sure to have an env_file configured with your AWS credentials for safe operation.

In Conclusion

Blue-green deployments provide a great way to minimize production impact during a release, and Amazon’s EC2 Container Service simplifies many of the complexities involved. I recognize that our use case is relatively simple and that larger and more complex applications may not be as easy to deploy in this way, but it is absolutely worth investigation. The comfort we have in automating deployments triggered by code changes has really changed our behaviors and development processes for the better. It makes us much more agile, and our developers are happier not having extensive build and release procedures.

We have found our ecs-deploy script to be very helpful, easy to use, and reliable for deployments, and I hope you can benefit from it too. We’d appreciate your input on improving it and welcome pull requests for new features. Post your comments and questions below to keep the conversation going.

Resources

| Reference: | Easy Blue-Green Deployments on Amazon EC2 from our JCG partner Phillip Shipley at the Codeship Blog blog. |