Lock Less Java Object Pool

It has been a while since I wrote anything, I have been busy with my new job that involves doing some interesting work on performance tuning. One of the challenges is to reduce object creation during the critical part of the application.

Garbage Collection hiccups has been a main pain point in java for some time, although java has improved over time the GC algorithms. Azul is a market leader developing pause-less GC but the Azul JVM is not free as speech!

Creating too many temporary/garbage objects doesn’t work too well because it creates work for the GC and it is going to have a negative effect on the latency. Too much garbage also doesn’t work well with multi-core system because it causes cache pollution.

So how should we fix this ?

Garbage less coding

This is only possible if you know how many objects you need upfront and pre-allocate them, but in reality this is very difficult to find. But even if you managed to do that, then you have to worry about another issue

- You might not have enough memory to hold all the objects you need

- You have to also handle concurrency

So what is the solution for the above problems

There is the Object Pool design pattern that can address both of the above issues. It lets you to specify a number of objects that you need in a pool and handles concurrent requests to serve the requested objects.

Object Pool has been the base of many applications that have low latency requirements. A flavor of the object pool is the Flyweight design pattern.

Both of the patterns above will help us in avoiding object creation. That is great so now GC work is reduced and in theory our application performance should improve. In practice, this doesn’t happen that way because Object Pool/Flyweight has to handle concurrency and whatever advantage you get by avoiding object creation is lost because of concurrency issue.

What is the most common way to handle concurrency

Object pool is a typical producer/consumer problem and it can be solved by using the following techniques:

Synchronized: This was the only way to handle concurrency before JDK 1.5. Apache has written a wonderful object pool API based on synchronized

Locks: Java added excellent support for concurrent programming after JDK 1.5. There has been some work to use Locks to develop Object Pool for eg furious-objectpool

Lock Free: I could not find any implementation that is built using fully lock free technique, but furious-objectpool uses a mix of ArrayBlocking queue & ConcurrentLinked queue

Lets measure performance

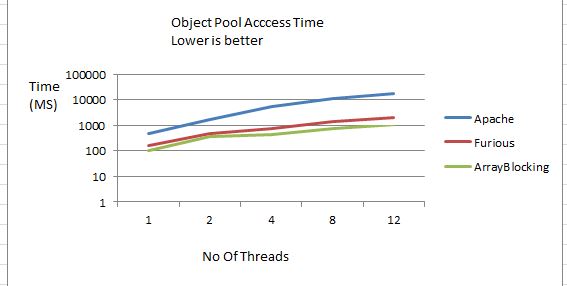

In this test I have created a pool of 1 Million objects and those objects are accessed by a different pool implementation, objects are taken from the pool and returned back to the pool.

This test first starts with 1 thread and then the number of threads is increased to measure how the different pool implementations perform under contention

- X Axis – No Of Threads

- Y Axis – Time in Ms – Lower time is better

This test includes pools from Apache, Furious Pool & an ArrayBlocking based Pool

The Apache performed the worst and as the number of threads increases, performance degrades further. The reason is that the Apache pool is based on heavy use of “synchronized”

The other two, Furious & ArrayBlocking based pool performs better but both of them also slow down as contention increases.

ArrayBlocking queue based pool takes around 1000 ms for 1 Million items when 12 threads are trying to access the pool. Furious pool which internally uses Arrayblocking queue takes around 1975 ms for the same thing.

I have to do a more detailed investigation to find out why Furious is taking double time because it is also based on the ArrayBlocking queue.

Performance of arrayblocking queue is decent but it is a lock based approach. What type of performance do we get if we can implement lock free pool?

Lock free pool

Implementing lock free pool is not impossible but a bit difficult because you have to handle multiple producers & consumers.

I will implement a hybrid pool which will use lock on the producer side & non blocking technique on the consumer side.

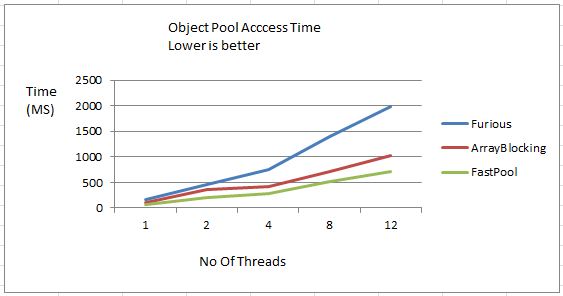

Lets have a look at some numbers

I performed same test with new implementation (FastPool) and it is almost 30% faster than ArayBlocking queue.

30% improvement is not bad, it can definitely help us meet the latency goal.

What makes Fast Pool fast!

I used a couple of techniques to make it fast

- Producers are lock based – Multiple producers are managed using locks, this is same as Array Blocking queue, so nothing great about this.

- Immediate publication of released item – it publishes the element before the lock is released using cheap memory barrier. This gives some gain

- Consumers are non blocking – CAS is used to achieve this, consumers are never blocked due to producers. Array Blocking queue blocks the consumer because it uses the same lock for the producer & the consumer

- Thread Local to maintain value locality – Thread Local is used to acquire the last value that was used, this reduces contention to a great extent.

If you are interested in having a look at code then it is available @ FastObjectPool.java

Hi Ashkrit – the link for code does not work?

I have put code on github, try below link

https://github.com/ashkrit/blog/blob/master/FastObjectPool/FastObjectPool.java

Is there any chance of modifying your fastObjectPool so it behaves more like a ConcurrentLinkedqueue so that the take and release return and consume type T instead of holder objects so users of the library don’t have to mess around with managing holder objects everywhere. It would also be great if you didn’t have to set the size at creation so that the pool grows on demand as objects are released to the pool. The problem with ConcurrentLinkedqueue is that it’s offer method creates garbage with line 327> final Node newNode = new Node(e); you’r fastObjectPool does not seem to… Read more »

You idea will make it much clean , but reason why i did that way was to maintain state related object whether it is used/free. If i start returning T object then i have to also find then better way of threadlocal optimization that i have done for reducing contention. Other option that came to my mind while implementation was exploring dynamic proxy but then it will be over engineering for simple problem, so i choose this trade off. Regarding – flexible size, most of the object pool that i have seen/used are bounded by size, it is good to… Read more »

1. Performance Object pooling provides better application performance As object creation is not done when client actually need it to perform some operation on it Instead objects are already created in the pool and readily available to perform any time. So Object creation activity is done much before So it does help in achieving better run-time performance 2. Object sharing : Object Pooling encourage the concept of sharing. Objects available in pool can be shared among multiple worker threads . One thread Use the Object and once used it returns back to its Object pool and then it can be… Read more »