Reactive Queue with Akka Reactive Streams

Reactive streams is a recently announced initiative to create a standard for asynchronous stream processing with built-in back-pressure, on the JVM. The working group is formed by companies such as Typesafe, Red Hat, Oracle, Netflix, and others.

One of the early, experimental implementations is based on Akka. Preview version 0.3 includes actor producers & consumers, which opens up some new integration possibilities.

To test the new technology, I implemented a very simple Reactive Message Queue. The code is at a PoC stage, lacks error handling and such, but if used properly – works!

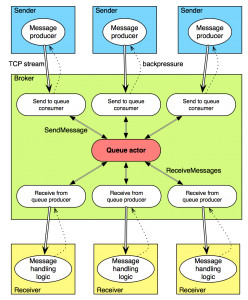

The queue is reactive, meaning that messages will be delivered to interested parties whenever there’s demand, without polling. Back-pressure is applied both when sending messages (so that senders do not overwhelm the broker), and when receiving messages (so that the broker sends only as much messages as the receivers can consume).

Let’s see how it works!

The queue

First, the queue itself is an actor, and doesn’t know anything about (reactive) streams. The code is in the com.reactmq.queue package. The actor accepts the following actor-messages (the term “message” is overloaded here, so I’ll use plain “message” to mean the messages we send to and receive from the queue, and “actor-messages” to be the Scala class instances sent to actors):

SendMessage(content)– sends a message with the specifiedStringcontent. A reply (SentMessage(id)) is sent back to the sender with the id of the messageReceiveMessages(count)– signals that the sender (actor) would like to receive up tocountmessages. The count is cumulated with previously signalled demand.DeleteMessage(id)– unsurprisingly, deletes a message

The queue implementation is a simplified version of what’s in ElasticMQ. After a message is received, if it is not deleted (acknowledged) within 10 seconds, it becomes available for receiving again.

When an actor signals demand for messages (by sending ReceiveMessages to the queue actor), it should expect any number of ReceivedMessages(msgs) actor-messages replies, containing the received data.

Going reactive

To create and test our reactive queue, we need three applications:

We can run any number of Senders and Receivers, but of course we should run only one Broker.

The first thing that we need to do is to connect the Sender with the Broker, and the Receiver with the Broker over a network. We can do that with the Akka IO extension and the reactive TCP extension. Using a connect & bind pair, we get a stream of connections on the binding side:

// sender:

val connectFuture = IO(StreamTcp) ? StreamTcp.Connect(settings, sendServerAddress)

connectFuture.onSuccess {

case binding: StreamTcp.OutgoingTcpConnection =>

logger.info("Sender: connected to broker")

// per-connection logic

}

// broker:

val bindSendFuture = IO(StreamTcp) ? StreamTcp.Bind(settings, sendServerAddress)

bindSendFuture.onSuccess {

case serverBinding: StreamTcp.TcpServerBinding =>

logger.info("Broker: send bound")

Flow(serverBinding.connectionStream).foreach { conn =>

// per-connection logic

}.consume(materializer)

}There’s a different address for sending and receiving messages.

The sender

Let’s look at the per-connection logic of the Sender first.

Flow(1.second, () => { idx += 1; s"Message $idx from $senderName" })

.map { msg =>

logger.debug(s"Sender: sending $msg")

createFrame(msg)

}

.toProducer(materializer)

.produceTo(binding.outputStream)We are creating a tick-flow which produces a new message every second (very convenient for testing). Using the map stream transformer, we are creating a byte-frame with the message (more on that later). But that’s only a description of how our (very simple) stream should look like; it needs to be materialized using the toProducer method, which will provide concrete implementations of the stream transformation nodes. Currently there’s only one FlowMaterializer, which – again unsurprisingly – uses Akka actors under the hood, to actually create the stream and the flow.

Finally, we connect the producer we have just created to the TCP binding’s outputStream, which happens to be a consumer. And we now have a reactive over-the-network stream of messages, meaning that messages will be sent only when the Broker can accept them. Otherwise back-pressure will be applied all the way up to the tick producer.

The broker: sending messages

On the other side of the network sits the Broker. Let’s see what happens when a message arrives.

Flow(serverBinding.connectionStream).foreach { conn =>

logger.info(s"Broker: send client connected (${conn.remoteAddress})")

val sendToQueueConsumer = ActorConsumer[String](

system.actorOf(Props(new SendToQueueConsumer(queueActor))))

// sending messages to the queue, receiving from the client

val reconcileFrames = new ReconcileFrames()

Flow(conn.inputStream)

.mapConcat(reconcileFrames.apply)

.produceTo(materializer, sendToQueueConsumer)

}.consume(materializer)First, we create a Flow from the connection’s input stream – that’s going to be the incoming stream of bytes. Next, we re-construct the String instances that were sent using our framing, and finally we direct that stream to a send-to-queue consumer.

The SendToQueueConsumer is a per-connection bridge to the main queue actor. It uses the ActorConsumer trait from Akka’s Reactive Streams implementation, to automatically manage the demand that should be signalled upstream. Using that trait we can create a reactive-stream-Consumer[_], backed by an actor – so a fully customisable sink.

class SendToQueueConsumer(queueActor: ActorRef) extends ActorConsumer {

private var inFlight = 0

override protected def requestStrategy = new MaxInFlightRequestStrategy(10) {

override def inFlightInternally = inFlight

}

override def receive = {

case OnNext(msg: String) =>

queueActor ! SendMessage(msg)

inFlight += 1

case SentMessage(_) => inFlight -= 1

}

}What needs to be provided to an ActorConsumer, is a way of measuring how many stream items are currently processed. Here, we are counting the number of messages that have been sent to the queue, but for which we have not yet received an id (so they are being processed by the queue).

The consumer receives new messages wrapped in the OnNext actor-message; so OnNext is sent to the actor by the stream, and SentMessage is sent in reply to a SendMessage by the queue actor.

Receiving

The receiving part is done in a similar way, though it requires some extra steps. First, if you take a look at the Receiver, you’ll see that we are reading bytes from the input stream, re-constructing messages from frames, and sending back the ids, hence acknowledging the message. In reality, we would run some message-processing-logic between receiving a message and sending back the id.

On the Broker side, we create two streams for each connection.

One is a stream of messages sent to receivers, the other is a stream of acknowledged message ids from the receivers, which are simply transformed to sending DeleteMessage actor-messages to the queue actor.

Similarly to the consumer, we need a per-connection receiving bridge from the queue actor, to the stream. That’s implemented in ReceiveFromQueueProducer. Here we are extending the ActorProducer trait, which lets you fully control the process of actually creating the messages which go into the stream.

In this actor, the Request actor-message is being sent by the stream, to signal demand. When there’s demand, we request messages from the queue. The queue will eventually respond with one or more ReceivedMessages actor-message (when there are any messages in the queue); as the number of messages will never exceed the signalled demand, we can safely call the ActorProducer.onNext method, which sends the given items downstream.

Framing

One small detail is that we need a custom framing protocol (thanks to Roland Kuhn for the clarification), as the TCP stream is just a stream of bytes, so we can get arbitrary fragments of the data, which need to be recombined later. Luckily implementing such a framing is quite simple – see the Framing class. Each frame consists of the size of the message, and the message itself.

Summing up

Using Reactive Streams and the Akka implementation it is very easy to create reactive applications with end-to-end back-pressure. The queue above, while missing a lot of features and proofing, won’t allow the Broker to be overloaded by the Senders, and on the other side the Receivers to be overloaded by the Broker. And all that, without the need to actually write any of the backpressure-handling code!

| Reference: | Reactive Queue with Akka Reactive Streams from our JCG partner Adam Warski at the Blog of Adam Warski blog. |