Bugs and cracks

Compile-time and run-time villains.

Consider the software system on which you currently work. Would you release that system with 31,197 bugs? Not potential bugs, mind; actual identified bugs.

If you answer yes to this then you can stop reading now. This post is not for you.

Taking a ridiculously simplistic view of software, we can split software development into three large boxes, two of which being user-experience realization and structure.

User-experience realization is the process of making software do what the user wants. This concerns the software’s run-time behavior.

Structure, on the other, encompasses the science of composing the system of, and establishing relations between, components: methods, classes, packages, bundles, etc. This box concerns itself entirely with compile-time: it focuses on source code, on text, on textual relations.

Programmers care about both run-time behavior and structure.

Users care only about run-time behavior. They do not care about structure. Number of software systems in the world available in two versions of identical behavior – one poorly-structured, the other well-structured – thereby offering the user choice based on structure alone: 0.

This disparity of interest has produced the modern field of software development in which programmers have a universal term for a run-time fault, “Bug,” because users care about such things. No such term exists, however, for a structural fault. This omission has wasted – and continues to waste, every year – untold billions of dollars and (very told) miserable programmer-hours.

Code smells.

“Bug,” is perhaps not a great word for a behavior fault, but the word is old and has acquired gravitas. A common misconception holds that the great Grace Hopper first used the word, “Bug,” to describe an insect found skittering through the snapping relays of primordial computer hardware back in the 1940s. The word, however, had already enjoyed currency among engineers who used it, long before computers, to denote various engineering problems. Edison, of all people, coined the phrase.

Hopper did, nonetheless, popularize the term among software engineers, and whatever its provenance, every programmer in the world today knows that sickly fear when an emails arrives with subject, “I found a bug in your program. Again! FFS!”

Of course, programmers discuss structural faults also (usually in the programs of others), but couch such discussions in vagueness: “This code looks messy,” “That code has too many dependencies,” “This other code is difficult to change.”

Worse still, many programmers associate Fowler’s phrase, “Code smell” with a structural fault. This is regrettable, not because the code smell concept lacks merit but because a code smell is not the same as a structural fault. Though both beasts exist at compile-time, the equating of the two is wrong.

Fowler offers the definition: “A code smell is a surface indication that usually corresponds to a deeper problem in the system.”

A structural fault, however, is not a surface indication of a deeper problem: a structural fault is the deeper problem.

Programmers often decide to live with code smells as code smells do not always represent problems to be fixed and only, “Correspond,” to problems, and then only, “Usually.” A programmer receiving an email with the subject, “I found a code smell in your program,” would probably just tool up for a good argument.

Structural faults automatically scream for correction just as much as bugs automatically scream for correction.

Further highlighting the discrepancy between compile-time and run-time arenas, note that, in the run-time arena, there are bugs but no, “Run-time smells,” that is, there are no run-time faults that programmers decide to live with. (True, management might force them to live with a fault by refusing to finance a correction, but the programmer will not take this decision in the same way that she might a compile-time code smell.)

Crack.

So we lack a word to describe a structural fault that should prevent a software release just as a bug would prevent that release. Fortunately, the field of real-world masonry-and-steel structural engineering already has such a word: “Crack.”

You do not buy a pot with a cracked lid. You do not buy a phone with a cracked screen. You do not buy a car with a cracked axle.

In all these cases, you could still use the item – you could use that phone and drive that car – but the crack tells you immediately that, structurally, something is wrong.

Of course, even in the real world, cracks exists at different scales. A tunneling electron microscope will reveal even the smoothest surface to be scoured with micro-cracks, leaving the notion of whether a surfaced is cracked somewhat statistical but at least objectively so. And so it is in software.

The recent review of Apache’s Lucene characterized its structure using four types of crack.

Firstly, Java systems avail of both packages and interfaces, so most programmers employ the facade pattern to ensure that packages of cohesive functionality remain separated from one another’s implementations by exporting interfaces through which clients use their services. This allows packages to be tested in isolation and updated with minimal system impact. Therefore any method dependency chain stretched over more than two packages must access an implementation directly, rather then via a facade. This is a crack.

Secondly, Java systems avail of access modifiers, so any element declared at a scope at which it is unused is a crack. A public class accessed only with a package is crack. A public method only accessed within a class is a crack.

Thirdly, and statistically, is dependency length. The longer the dependencies that run though a system, the more costly that system will be to maintain. Thus, long dependencies are cracks.

Finally, and again statistically, the more dependencies per method that run through a system, the more costly that system will be to maintain. Thus, high dependency-to-method densities are cracks.

Once a software development project has defined the character and threshold of these cracks, further negotiation can be brutally suppressed, just as it is in the case of bugs. Rarely can a programmer argue the case for introducing a bug into the product; so rarely should a programmer be able to argue the case for introducing a crack into the product (as they currently can for code smells).

More important than using these specific four types of crack, however, a software development project that rigorously defines any cracks and sets up a source code analyzer to continuously measure these cracks and fails a release based on an excessive build up of cracks, this software project will gain a tremendous advantage in reduced development lead-time over its competitors. Because today’s level of software structural quality is, compared to other engineering fields, shamefully embarrassing.

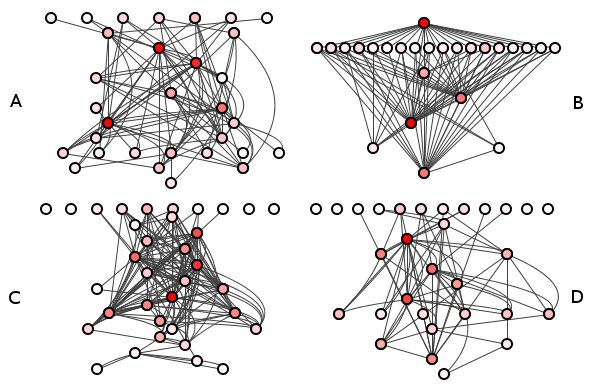

Figure 1 shows the package structures of four different systems. All these systems were designed using modern development practices and all are well-tested. Only one, however, used automated crack-detection during its construction. Which do you think it was? And which system would you rather maintain?

Lucene does not, apparently, perform such automated detection: it has 31,197 cracks of the first type alone. Would Lucene have considered releasing its product with 31,197 bugs?

Summary

This post calls not for the identification of new structural faults that may be considered unacceptable, but for a re-appraisal of all structural faults as unacceptable.

It simply defies belief that the field of software engineering chooses to treat structural faults as less important than run-time faults.

Those software companies that realize this will reap rich reward.

| Reference: | Bugs and cracks from our JCG partner Edmund Kirwan at the A blog about software. blog. |