For Java Developers – Akka HTTP In The Cloud With Maven and Docker

From time to time it is necessary to just take the little effort to think outside the box. This is a good habit for every developer and even if you just spend 10% of your time, with new and noteworthy technology, you will gain experience and broaden your knowledge. I wanted to look into Scala and Akka since a while. Both well known old acquaintances on many conference agendas. But honestly, I’ve never felt the need to take a second look. This changed quite a bit when I started to look deeper into microservices and relevant concepts around it. Let’s get started and see what’s in there.

What Is Akka? And why Scala?

But first some sentences about what Akka is. The name AKKA is the a palindrome of letters A and K as in Actor Kernel.

“Akka is a toolkit and runtime for building highly concurrent, distributed, and resilient message-driven applications on the JVM.”

It was built with the idea in mind to make writing concurrent, fault-tolerant and scalable applications easier. With the help of the so called “Actor” model the abstraction level for those applications has been re-defined and by adopting the “let it crash” concept you can build applications that self-heal and systems that stand very high workloads. Akka is Open Source, available under the Apache 2 License and can be downloaded from http://akka.io/downloads. Learn more about it in the official Akka documentation. Akka comes in two flavors: With a Java and a Scala API. So, you’re basically free to choose which version you want to use in your projects. I went for Scala in this blog post because I couldn’t find enough Akka Java examples out there.

Why Should A Java Developer Care?

I don’t know a lot about you, but I was just curious about it and started to browse the documentation a bit. The “Obligatory Hello World” didn’t lead me anywhere. Maybe because I was (am) still thinking too much in Maven and Java but we’re here to open our minds a bit so it was about time to change that. Resilient and message driven systems seem to be the most promising way of designing microservices based applications. And even if there are things like Vert.x which are a lot more accessible for Java developers it never is bad to look into something new. Because I didn’t get anywhere close to where I wanted to be with the documentation, I gave Konrad `@ktosopl` Malawsk a ping and asked for help. He came up with a nice little Akka-HTTP example for me to take apart and learn. Thanks for your help!

Akka, Scala and now Akka-HTTP?

Another new name. The Akka HTTP modules implement a full server- and client-side HTTP stack on top of akka-actor and akka-stream. It’s not a web-framework but rather a more general toolkit for providing and consuming HTTP-based services. And this is what I wanted to take a look at. Sick of reading? Let’s get started:

Clone And Compile – A Smoke-Test

Git clone Konrad’s example to a folder of choice and start to compile and run it:

git clone https://github.com/ktoso/example-akka-http.git mvn exec:java

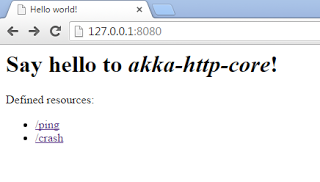

After downloading the internet point your browser to http://127.0.0.1:8080/ and try the “ping” link. You get a nice “PONG!” answer.

Congratulations. Now let’s look at the code.

The Example Code

Looking at the pom.xml and the exec-maven-plugin configuration points us to the com.example. ExampleServer.scala class. It extends the ExampleRoutes.scala and obviously has some routes defined. Not surprisingly those map to the links you can use from the index page. It kinds of makes sense, even if you don’t understand Scala. For the Java developers among us, Konrad was kind enough to add a Java Akka example (JavaExampleServer.java). If I compare both of them, I still like the Java example a lot better, but it is also probably also a little longer. Just choose what you like best.

There’s one very cool thing in the example that you might want to check out. The line is emitting an Reactive Streams source of data which is pushed exactly as fast as the client can consume it, and it is only generated “on demand”. Compare http://www.reactive-streams.org/ for more details.

The main advantage of the example is obviously that it provides a complete Maven based build for both languages and can be easily used to learn a lot more about Akka. A good jumping off point. And because there is not a lot more in this example from a feature perspective let’s see if we can get this to run in the cloud.

Deploying Akka – In A Container

According to the documentation there are three different ways of deploying Akka applications:

- As a library: used as a regular JAR on the classpath and/or in a web app, to be put into WEB-INF/lib

- Package with sbt-native-packager which is able to build *.deb, *.rpm or docker images which are prepared to run your app.

- Package and deploy using Typesafe ConductR.

I don’t know anything about sbt and ConductR so, I thought I just go with what I was playing around lately anyway: In a container. If it runs from a Maven build, I can easily package it as an image. Let’s go. The first step is to add the Maven Shade Plugin to the pom.xml:

<plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>2.4.1</version> <executions> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> <configuration> <shadedArtifactAttached>true</shadedArtifactAttached> <shadedClassifierName>allinone</shadedClassifierName> <artifactSet> <includes> <include>*:*</include> </includes> </artifactSet> <transformers> <transformer implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer"> <resource>reference.conf</resource> </transformer> <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> <main-Class>com.example.ExampleServer</main-Class> </transformer> </transformers> </configuration> </execution> </executions> </plugin>

The shade plugin creates an Uber jar, which is exactly, what I want to have in this case. There are three little special cases in the configuration. We need to:

- attach the shaded artifact to access it from the Docker Maven Plugin

- use the AppendingTransformer, because the configuration file is concatenated and not a “pick one” thing during the build process.

- define the main class, that we want to run.

When this is done, it is about time to configure our Docker Maven Plugin accordingly:

<plugin>

<groupId>org.jolokia</groupId>

<artifactId>docker-maven-plugin</artifactId>

<version>0.13.5</version>

<configuration>

<images>

<image>

<name>myfear/akka-sample:latest</name>

<build>

<from>jboss/base-jdk:8</from>

<maintainer>markus@jboss.org</maintainer>

<ports>

<port>8080</port>

</ports>

<entryPoint>

<exec>

<arg>java</arg>

<arg>-jar</arg>

<arg>/opt/akka-http/akka-http-service.jar</arg>

</exec>

</entryPoint>

<assembly>

<inline>

<dependencySets>

<dependencySet>

<useProjectAttachments>true</useProjectAttachments>

<includes>

<include>com.example:akka-http-example:jar:allinone</include>

</includes>

<outputFileNameMapping>akka-http-service.jar</outputFileNameMapping>

</dependencySet>

</dependencySets>

</inline>

<user>jboss:jboss:jboss</user>

<basedir>/opt/akka-http</basedir>

</assembly>

</build>

<run>

<ports>

<port>${swarm.port}:8080</port>

</ports>

<wait>

<http>

<url>http://${docker.host.address}:${swarm.port}</url>

<status>200</status>

</http>

<time>30000</time>

</wait>

<log>

<color>yellow</color>

<prefix>AKKA</prefix>

</log>

</run>

</image>

</images>

</configuration>

</plugin>Couple of things to notice:

- the output file mapping, which needs to be the same in the entrypoint argument.

- the project attachment include “allinone” which we defined in the Maven shade plugin.

- the user in the image assembly (needs to be one that has been defined in the base image. In this case jboss/base-jdk which only knows the user jboss.)

And while we’re on it, we need to tweak the example a bit. The binding to localhost for the Akka-Http server is not really helpful on a containerized environment. So, we use the java.net library to find out about the actual IP of the container. And while we’re at it: Comment out the post startup procedure. The new ExampleServer looks like this:

package com.example

import akka.actor.ActorSystem

import akka.http.scaladsl.Http

import akka.stream.ActorMaterializer

import java.net._

object ExampleServer extends ExampleRoutes {

implicit val system = ActorSystem("ExampleServer")

import system.dispatcher

implicit val materializer = ActorMaterializer()

// settings about bind host/port

// could be read from application.conf (via system.settings):

val localhost = InetAddress.getLocalHost

val interface = localhost.getHostAddress

val port = 8080

def main(args: Array[String]): Unit = {

// Start the Akka HTTP server!

// Using the mixed-in testRoutes (we could mix in more routes here)

val bindingFuture = Http().bindAndHandle(testRoutes, interface, port)

println(s"Server online at http://$interface:$port/\nON Docker...")

}

}Let’s build the Akka Application and the Dockerfile by executing:

mvn package docker:build

and give it a test-run:

docker run myfear/akka-sample

Redirecting your browser to http://192.168.99.100:32773/ (Note: IP and port will be different in your environment. Make sure to list the container port mapping with docker ps and get the ip of your boot2docker or docker-machine instance) will show your the working example again.

Some Final Thoughts

The next step in taking this little Akka example to the cloud would be to deploy it on a PaaS. Take OpenShift for example (compare this post on how to do it). As a fat-jar application, it can be easily packaged into an immutable container. I’m not going to compare Akka with anything else in the Java space but wanted to give you a starting point for your own first steps and encourage you always stay curious and educate yourself about some of the technologies out there.

Further Readings and Information:

- Akka Website

- @AkkaTeam on Twitter

- Source Code on GitHub

- User Mailinglist

| Reference: | For Java Developers – Akka HTTP In The Cloud With Maven and Docker from our JCG partner Markus Eisele at the Enterprise Software Development with Java blog. |