Integrating Syslog w/ Kinesis : Anticipating use of the Firehose

On the heals of the Kinesis Firehose announcement, more people are going to be looking to integrate Kinesis with logging systems. (to expedite/simplify the ingestion of logs into S3 and Redshift) Here is one take on solving that problem that integrates syslog-ng with Kinesis.

First, let’s have a look at the syslog-ng configuration. In the syslog-ng configuration, you wire sources to destinations:

source s_sys {

udp(ip(127.0.0.1) port(514));

};

destination d_test { file("/var/log/monetate/test.log"

perm(0644)

template("$MSGONLY\n")

template_escape(no)

); };

destination d_fact_kinesis { tcp("localhost"

port(4242)

template("$MSGONLY\n")

template_escape(no)

); };

log { source(s_sys); destination(d_test); destination(d_kinesis); };In this example, we have one source of events: UDP, and we have two destinations: one file and one TCP. We wire them together in the last log statement. With this configuration, any packets syslog-ng receives via UDP, are written to the TCP port and to file. File is our backup strategy, just in case something happens to Kinesis, we will also persist the events locally. TCP is our path to Kinesis.

On the TCP side of things, we can easily implement a simple TCP server in Java that receives the messages from syslog-ng and sends them out via KPL. At Monetate, we’ve done exactly that. (and I’ll look to see if we can open source the solution). However, it is really important to understand the syslog-ng flow control, and more specifically how we will handle the back pressure if/when Kinesis starts to back up.

Let’s consider how we back that thang’ up.

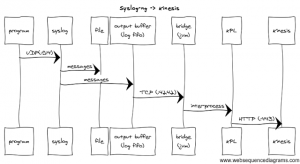

Here is a visual that shows the whole flow:

At each point in the sequence, we need to make sure that we are prepared to handle back-pressure. Specifically, we need to validate the behavior of each component when the next downstream component refuses to accept additional data.

For example, if our little TCP server (“bridge”) blindly accepted packets from the TCP stream, we would quickly OoM the JVM if/when Kinesis slowed down. Thus, in the “bridge” we need to apply back-pressure upstream, to prevent that from happening. More specifically, we need to stop consuming off of the TCP stream, and hope that syslog-ng reacts appropriately.

Fortunately, syslog-ng has accounted for this case with their “output buffer”, shown above. The output buffer is used to store messages for delivery to destinations. If the max-size is reached on that buffer, than the destination is “disconnected” as not to crash syslog. You control the max-size of the buffer through the log_fifo_size setting in syslog-ng.

Different destionation types have different behaviors under this scenario, and we tested out a few of them. First we considered a straight-up pipe between syslog and our bridge process using the “program” destination within syslog-ng. That worked well, but in the event of a backed-up queue, syslog-ng kills the program and respawns a new process. This was less than ideal. We also considered using UDP. That worked as well, and in effect eliminated the back pressure scenario because UDP is “fire and forget”. Under heavy load however, we noticed packet drops, which meant we were losing events.

In the end, we decided to go with TCP. With TCP we won’t silently lose messages, and syslog-ng won’t continually restart our process. With that decision, we needed to confirm the behavior of syslog-ng. And for that, we needed to monitor syslog-ng. This handy dandy command-line does exactly that:

syslog-ng -Fevd >& syslog.log

While monitoring syslog, we tested two failure scenarios. The first tested a dead bridge (no TCP listener). In this situation, syslog-ng won’t be able to connect. We needed to make sure syslog-ng behaves well under that case. To test this, we ran 100K messages through the system over 40 seconds (>2K/sec). When monitoring syslog-ng, we saw it trying to reconnect:

$grep 4242 syslog.log | grep failed Connection failed; server='AF_INET(127.0.0.1:4242)', error='Connection refused (111)', time_reopen='10' ... Connection failed; server='AF_INET(127.0.0.1:4242)', error='Connection refused (111)', time_reopen='10'

All the messages made it through to our log file (hallelujah!), which means the downed TCP connection had no affect on the log file destination. And furthermore, syslog-ng continually retried to establish the TCP connection, so when the process did come back up, it reconnected! nice! One very important thing to note from the above output is the “time_reopen”. It turns out that this is a global configuration option, which tells syslog-ng how long to wait until re-attempting to establish the connection. So, the behavior was solid – and we can actually configure how noisy things get if/when we lose the bridge process.

For the final test, we needed to see what happens when we apply back-pressure from the java process itself. In this case, syslog-ng can connect, but the java process refuses to read off the stream/socket. For this, we ran the same 100K test, but paused consuming in the java process to simulate a Kinesis slow-down. And again, we saw good things… All the messages made it through to the log file, and this time we saw messages from syslog-ng indicating that the ouput buffer (fifo queue) was full:

syslog.log:Destination queue full, dropping message; queue_len='1000', mem_fifo_size='1000' ... syslog.log:Destination queue full, dropping message; queue_len='1000', mem_fifo_size='1000'

And the log message tells you everything, after the queue fills up, it drops messages on the floor until it can re-establish the socket.

So, there you have it… to connect syslog to Kinesis, I’d recommend using a TCP output destination with some glue code between that and the Kinesis Producer Library (KPL). (and again, I’ll see if we can open source some of that) Just be careful, and apply the back pressure to syslog-ng.

Per our experiments, syslog-ng can back that thang up!

| Reference: | Integrating Syslog w/ Kinesis : Anticipating use of the Firehose from our JCG partner Brian ONeill at the Brian ONeill’s Blog blog. |