Building Apache Zeppelin for MapR using Spark under YARN

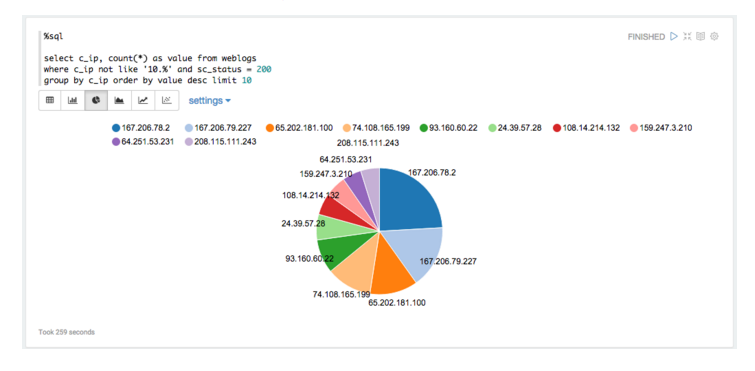

Apache Zeppelin is a web-based notebook that enables interactive data analytics. You can make beautiful data-driven, interactive and collaborative documents with Spark SQL, Scala, Hive, Flink, Kylin and more. Zeppelin enables rapid development of Spark and Hadoop workflows with simple, easy visualizations. The code from Zeppelin can be used in the Zeppelin notebooks or compiled and packaged into complete applications.

As of the current master branch (and release candidate), all the MapR build profiles are now included in the Apache Zeppelin repository. Four profiles, mapr3, mapr40, mapr41, and mapr50 will build Zeppelin with the appropriate MapR dependencies.

This blog provides instructions for building with the MapR profiles. Building the Hive interpreter for MapR is included, but the dependencies are commented out in the Hive pom.xml file.

Some assumptions

- A cluster with MapR 4.0.x/5.x and Apache Spark (1.2.x, 1.3.x or 1.4.x) running under YARN

- The ability to edit a couple of text files

- A decent browser

- A machine (node or edge) to run the Zeppelin server on. This requires mapr-spark and at least the MapR client installed

- Git client, npm and Maven 3.x

What do you need to do?

- Make sure you have at least the MapR client & Spark installed on your machine. Test this by executing a

hadoop fs -ls /and the Spark shell (for example version 1.2.1)/opt/mapr/spark/spark-1.2.1/bin/spark-shell

- Find a nice directory and run

git clonehttps://github.com/apache/incubator-zeppelin zeppelin

cd zeppelin- Build it (version MapR 4.0.x):

mvn clean package -Pbuild-distr -Pmapr40 -Pyarn -Pspark-1.2 -DskipTests

(for version MapR 4.1):

mvn clean package -Pbuild-distr -Pmapr41 -Pyarn -Pspark-1.3 -DskipTests

(for version MapR 5.x):

mvn clean package -Pbuild-distr -Pmapr50 -Pyarn -Pspark-1.3 -DskipTests

- This will create a directory called

zeppelin-distribution. In this directory will be a runnable version of Zeppelin and a tar file. The tar file is a complete Zeppelin installation. Use it. - Untar

zeppelin-x.x.x-incubating-SNAPSHOT.tar.gzwhere you want to execute the Zeppelin server. Everything is local to that machine, so it is not necessary to have the Zeppelin server on a MapR cluster node. - Configuration … assuming you have a working MapR client and Spark installation, there is little to configure. In the

zeppelin-x.x.x-incubating-SNAPSHOT/confdirectory, you will need to copyzeppelin-env.sh.template to zeppelin-env.sh - Edit

zeppelin-env.sh… you need to export two items.

a.export HADOOP_CONF_DIR="/opt/mapr/hadoop/hadoop-x.x.x/etc/hadoop"

insert the correct Hadoop version & path)

b.export ZEPPELIN_JAVA_OPTS="-Dspark.executor.instances=4 -Dspark.executor.memory=2g"

The Hadoop conf directory is where yarn-site.xml lives. The Zeppelin Java Options set information about your Spark deployment. These options are explained in the Spark documentation here.

This should be all you need to do at the command line …. to start the Zeppelin server, execute

bin/zeppelin-daemon.sh start

Now you need to configure Zeppelin to use your Spark cluster. Point your browser to

http://:8080

Click on Interpreter (top of the page), and edit the Spark section:

- master == yarn-client

- Save

You can configure your HiveServer2 on this page as well, if you are using one. Now click on Notebook (top of the page) and select the tutorial.

NOTES

Be aware of the port number Zeppelin runs on.

- If you are on a node of a cluster, port 8080 will probably conflict with any number of Hadoop services.

- In the conf directory (steps 8 & 9) there is also a zeppelin-site.xml template. Copy this and edit it …. the port number is at the top.

| Reference: | Building Apache Zeppelin for MapR using Spark under YARN from our JCG partner Paul Curtis at the Mapr blog. |