Deployment Automation Of Docker Java Application On VSphere And Photon

Background

Java developers and DevOps professionals have long struggled to automate the deployment of enterprise Java applications. The complex nature of these applications usually meant that application dependencies and external integrations had to be re-configured each time an application was deployed in DEV/TEST environments.

Many solutions advertised the “model once, deploy anywhere” message for application deployments. In reality, however there were always intricacies that made it very difficult to re-use an application template across both an on-premise vSphere virtual environment and an AWS environment, for example.

More recently, however, Docker containers popularized the idea of packaging application components into Linux Containers that can be deployed exactly the same on any Linux host as long as Docker Engine is installed.

Unfortunately containerizing enterprise Java applications is still a challenge mostly because existing application composition frameworks do not address complex dependencies, external integrations or auto-scaling workflows post-provision. Moreover, the ephemeral design of containers meant that developers had to spin up new containers and re-create the complex dependencies & external integrations with every version update.

DCHQ, available in hosted and on-premise versions, addresses all of these challenges and simplifies the containerization of enterprise Java applications through an advance application composition framework that extends Docker Compose with cross-image environment variable bindings, extensible BASH script plug-ins that can be invoked at request time or post-provision, and application clustering for high availability across multiple hosts or regions with support for auto scaling.

Once an application is provisioned, a user can monitor the CPU, Memory, & I/O of the running containers, get notifications & alerts, and perform day-2 operations like Scheduled Backups, Container Updates using BASH script plug-ins, and Scale In/Out. Moreover, out-of-box workflows that facilitate Continuous Delivery with Jenkins allow developers to refresh the Java WAR file of a running application without disrupting the existing dependencies & integrations.

In this blog, we will go over the end-to-end deployment automation of a Docker-based 3-tier Java application on Photon running on vSphere. DCHQ not only automates the deployment of Docker-based apps, but it also automates the provisioning of virtual machines (including Photon) on vSphere. We will cover:

- Registering the Cloud Provider for vSphere

- Creating a Cluster for vSphere

- Building the Machine Compose Template for Photon

- Deploying the 3-tier Java application on Photon Running on vSphere

- Accessing the In-Browser Terminal for Running Containers

- Monitoring the CPU, Memory & I/O of the Running Containers

- Scaling Out the Tomcat Application Server Cluster

- Enabling the Continuous Delivery Workflow with Jenkins to update the WAR file of the running applications when a build is triggered

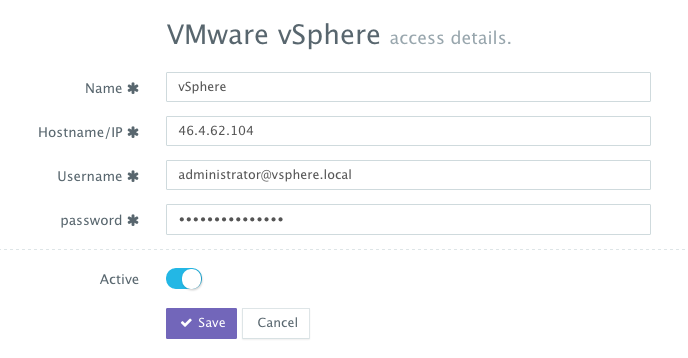

Registering a Cloud Provider for vSphere

A user can register a Cloud Provider to automate the provisioning and auto-scaling of clusters on 13 different cloud end-points including vSphere, OpenStack, CloudStack, Amazon Web Services, Rackspace, Microsoft Azure, DigitalOcean, HP Public Cloud, IBM SoftLayer, Google Compute Engine, and many others.

First, a user can register a Cloud Provider for vSphere by navigating to Manage > Repo & Cloud Provider and then clicking on the + button to select vSphere. The IP of vCenter along with the username and password need to be provided.

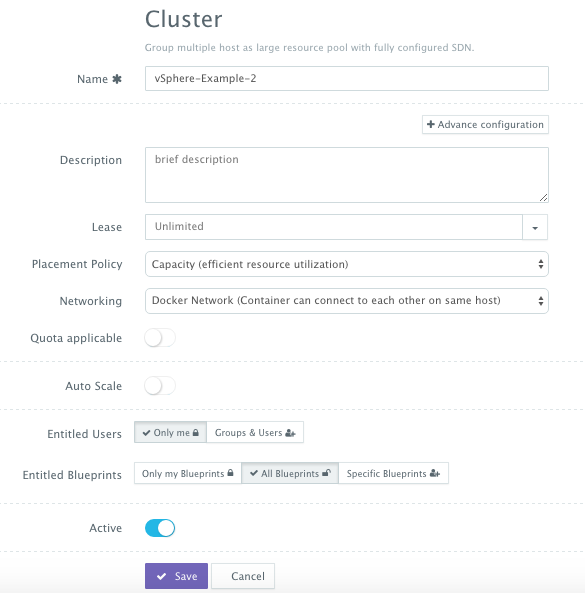

Creating a Cluster for vSphere

A user can then create a cluster of Linux hosts that could span hybrid clouds. This can be done by navigating to Manage > Clusters page and then clicking on the + button. You can select a capacity-based placement policy, the required lease for this cluster and then the networking layer needed.

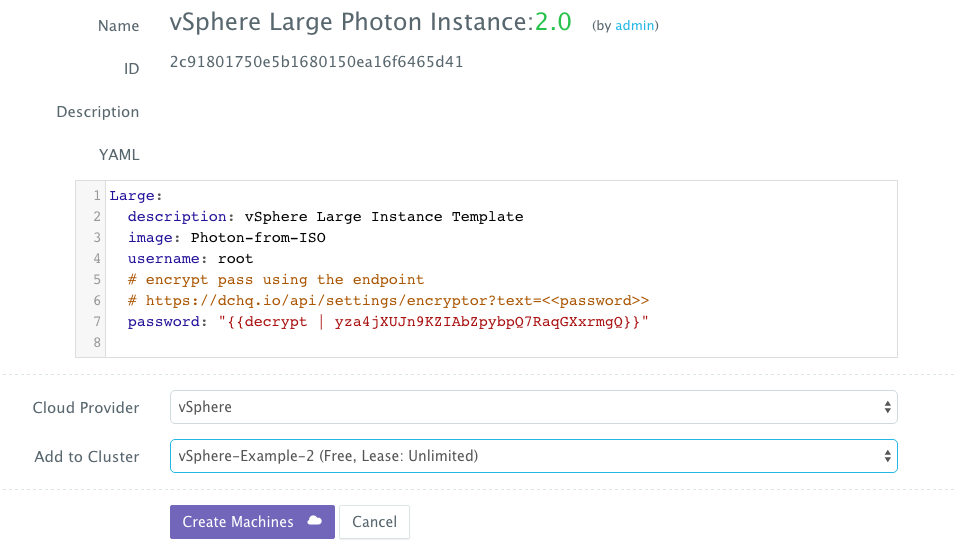

Building the Machine Compose Template for Photon

A user can now provision a number of Cloud Servers on the newly created cluster. This can be done in two ways. A user can follow the UI-based workflows by navigating to Manage > Hosts and then clicking on the + button to select vSphere.

The other option is to create a Machine Compose Template – using a simple YAML-based syntax to standardize deployments on any cloud. A user can navigate to Manage > Templates and then select Machine Compose. The template created for Photon includes the following parameters:

- image: to refer to the actual VM template name

- description – a generic description of the template

- username – the username configured for this VM

- password – the password configured for this VM. “{{decrypt | <encrypted_password>}} can be used to keep the password secure.

A user can then request this template from the self-service Library.

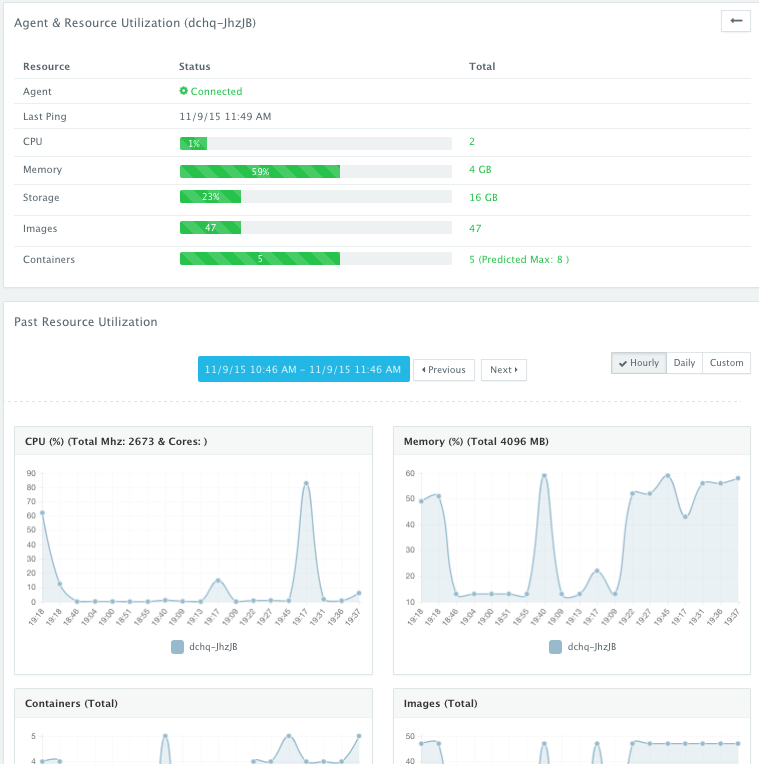

Once the virtual machine is provisioned, a user can navigate to Manage > Hosts and the click on the Monitoring icon next to the provisioned VM to track the historical performance of the VM. Collected metrics include CPU, Memory, and Disk Utilization, along with images pulled and containers running.

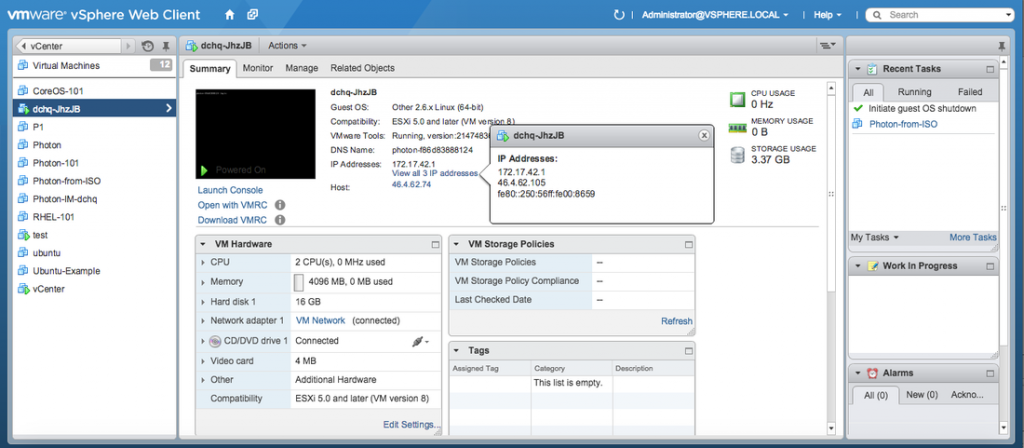

Once logged into the vSphere Web Client, a user can see the provisioned Photon VM in vCenter.

Deploying the 3-tier Java application on Photon Running on vSphere

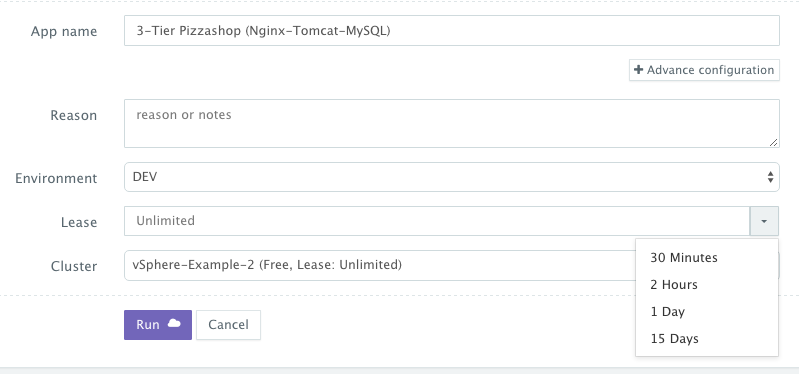

Once logged in to DCHQ (either the hosted DCHQ.io or on-premise version), a user can navigate to Library and then click on Customize button to request the “3-Tier Pizzashop (Nginx-Tomcat-MySQL)” application.

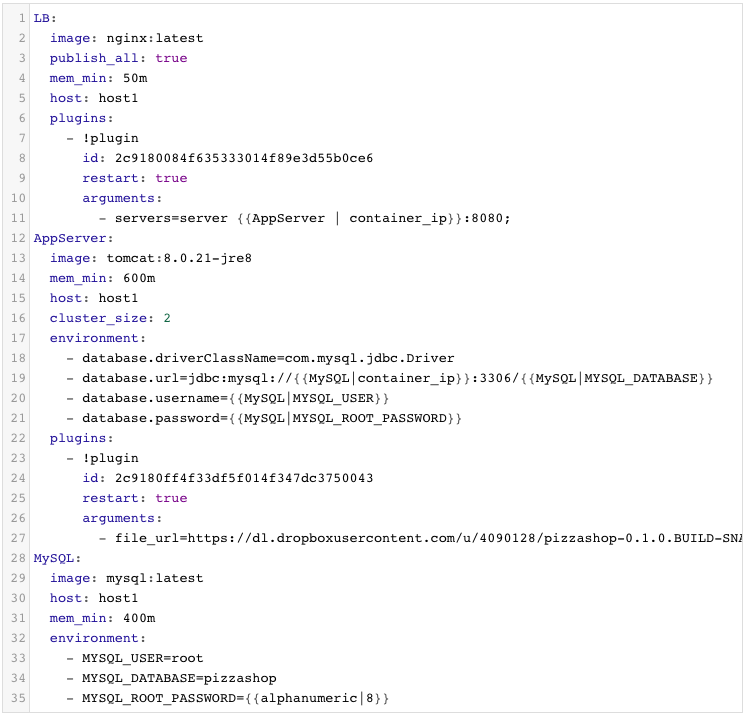

In this example, we have a multi-tier application consisting of Nginx (for load balancing), Tomcat (the clustered application server) and MySQL (as the database).

You will notice Nginx is invoking a BASH script plug-in to add the container IP’s of the application servers in the default.conf file dynamically (or at request time). Tomcat is also invoking a BASH script plug-in to deploy a Java WAR file from a specified URL.

You will notice that the cluster_size parameter allows you to specify the number of containers to launch (with the same application dependencies).

The host parameter allows you to specify the host you would like to use for container deployments. Here are the values supported for the host parameter:

- host1, host2, host3, etc. – selects a host randomly within a data-center (or cluster) for container deployments

- <IP Address 1, IP Address 2, etc.> — allows a user to specify the actual IP addresses to use for container deployments

- <Hostname 1, Hostname 2, etc.> — allows a user to specify the actual hostnames to use for container deployments

- Wildcards (e.g. “db-*”, or “app-srv-*”) – to specify the wildcards to use within a hostname

Additionally, a user can create cross-image environment variable bindings by making a reference to another image’s environment variable. In this case, we have made several bindings – including database.url=jdbc:mysql://{{MySQL|container_ip}}:3306/{{MySQL|MYSQL_DATABASE}} – in which the database container IP and name are resolved dynamically at request time and are used to configure the database URL in the application servers.

Here is a list of supported environment variables:

- {{alphanumeric | 8}} – creates a random 8-character alphanumeric string. This is most useful for creating random passwords.

- {{<Image Name> | ip}} – allows you to enter the host IP address of a container as a value for an environment variable. This is most useful for allowing the middleware tier to establish a connection with the database.

- {{<Image Name> | container_ip}} – allows you to enter the name of a container as a value for an environment variable. This is most useful for allowing the middleware tier to establish a secure connection with the database (without exposing the database port).

- {{<Image Name> | container_private_ip}} – allows you to enter the internal IP of a container as a value for an environment variable. This is most useful for allowing the middleware tier to establish a secure connection with the database (without exposing the database port).

- {{<Image Name> | port _<Port Number>}} – allows you to enter the Port number of a container as a value for an environment variable. This is most useful for allowing the middleware tier to establish a connection with the database. In this case, the port number specified needs to be the internal port number – i.e. not the external port that is allocated to the container. For example, {{PostgreSQL | port_5432}} will be translated to the actual external port that will allow the middleware tier to establish a connection with the database.

- {{<Image Name> | <Environment Variable Name>}} – allows you to enter the value an image’s environment variable into another image’s environment variable. The use cases here are endless – as most multi-tier applications will have cross-image dependencies.

A user can select the Cluster created for vSphere before clicking on Run.

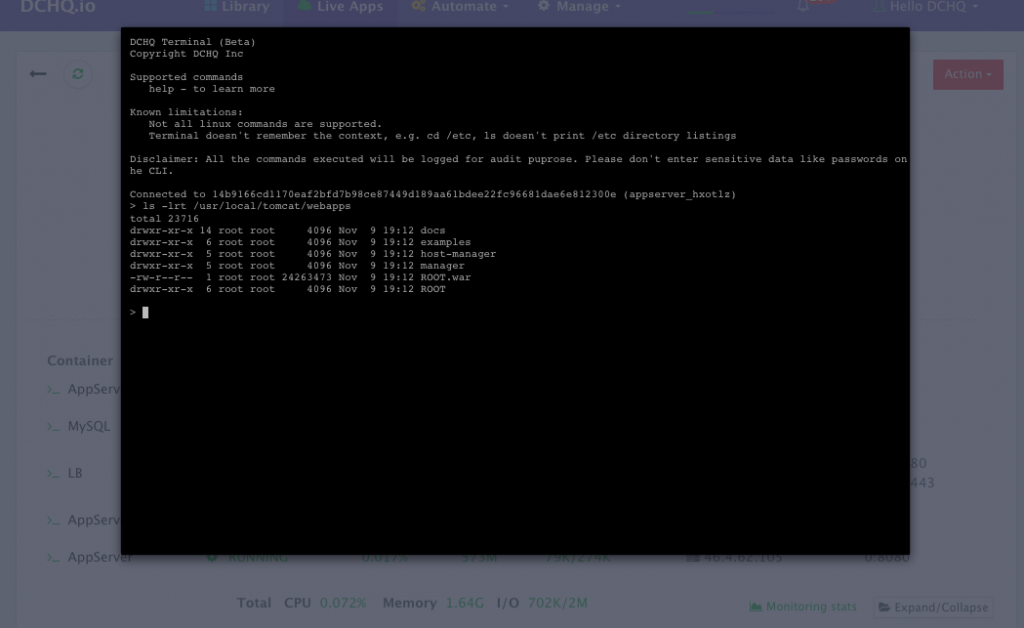

Accessing the In-Browser Terminal for the Running Containers

A command prompt icon should be available next to the containers’ names on the Live Apps page. This allows users to enter the container using a secure communication protocol through the agent message queue. A white list of commands can be defined by the Tenant Admin to ensure that users do not make any harmful changes on the running containers.

In this case, we used the command prompt to make sure that the Java WAR file was deployed under the /usr/local/tomcat/webapps/ directory.

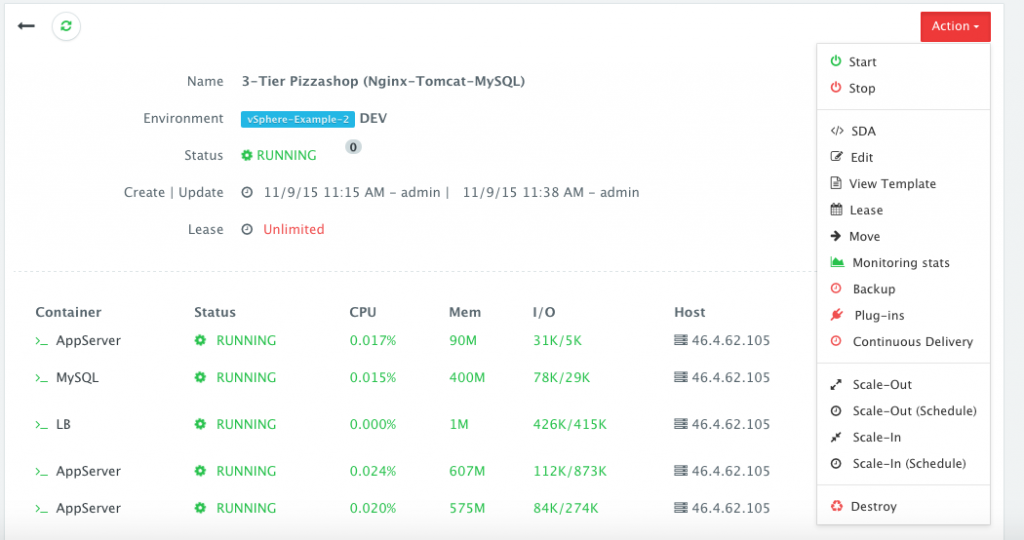

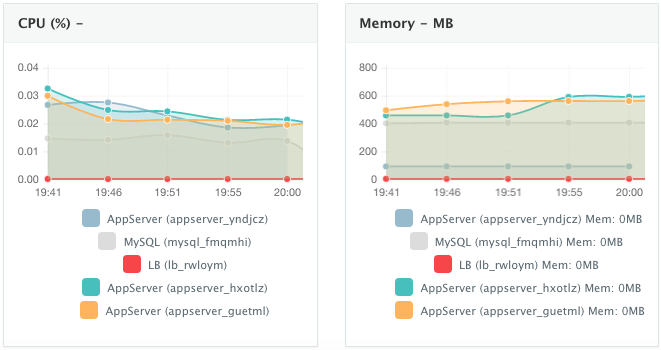

Monitoring the CPU, Memory & I/O Utilization of the Running Containers

Once the application is up and running, our developers monitor the CPU, Memory, & I/O of the running containers to get alerts when these metrics exceed a pre-defined threshold. This is especially useful when our developers are performing functional & load testing.

A user can perform historical monitoring analysis and correlate issues to container updates or build deployments. This can be done by clicking on the Actions menu of the running application and then on Monitoring. A custom date range can be selected to view CPU, Memory and I/O historically.

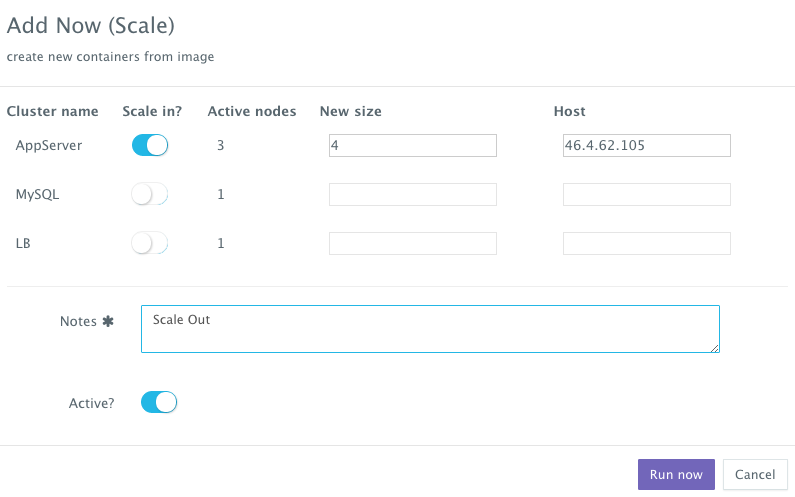

Scaling Out the Tomcat Application Server Cluster

If the running application becomes resource constrained, a user can to scale out the application to meet the increasing load. Moreover, a user can schedule the scale out during business hours and the scale in during weekends for example.

To scale out the cluster of Tomcat servers from 3 to 4, a user can click on the Actions menu of the running application and then select Scale Out. A user can then specify the new size for the cluster and then click on Run Now.

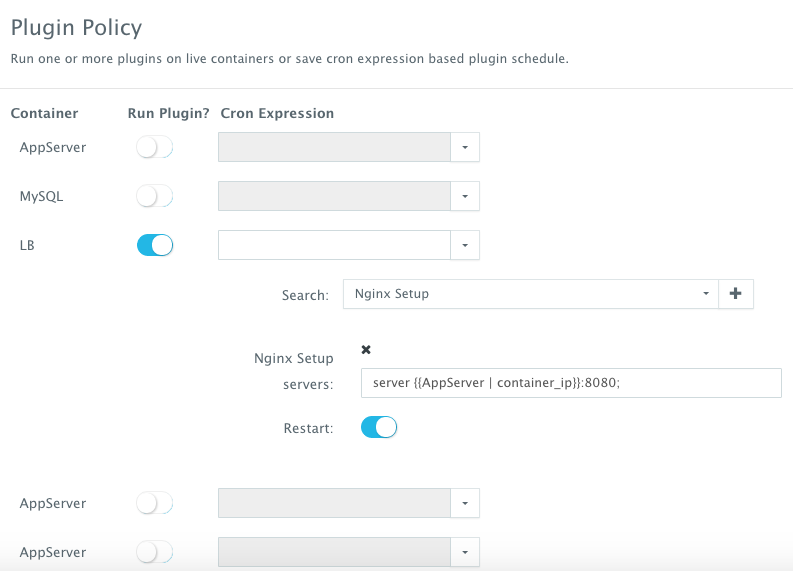

Once the scale out is complete, a user can execute a BASH plug-in to update Nginx’s default.conf file so that it’s aware of the new application server added. The BASH script plug-ins can also be scheduled to accommodate use cases like cleaning up logs or updating configurations at defined frequencies.

To execute a plug-in on a running container, a user can click on the Actions menu of the running application and then select Plug-ins. A user can then select the load balancer (Nginx) container, search for the plug-in that needs to be executed, enable container restart using the toggle button. The default argument for this plug-in will dynamically resolve all the container IP’s of the running Tomcat servers and add them as part of the default.conf file.

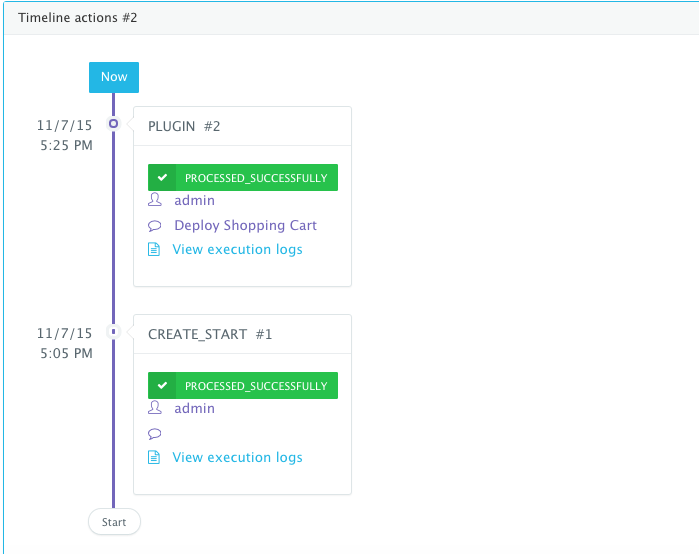

An application time-line tracks all the changes made to the application.

Enabling the Continuous Delivery Workflow with Jenkins to Update the WAR File of the Running Application when a Build is Triggered

For developers wishing to follow the “immutable” containers model by rebuilding Docker images containing the application code and spinning up new containers with every application update, DCHQ provides an automated build feature that allows developers to automatically create Docker images from Dockerfiles or private GitHub projects containing Dockerfiles.

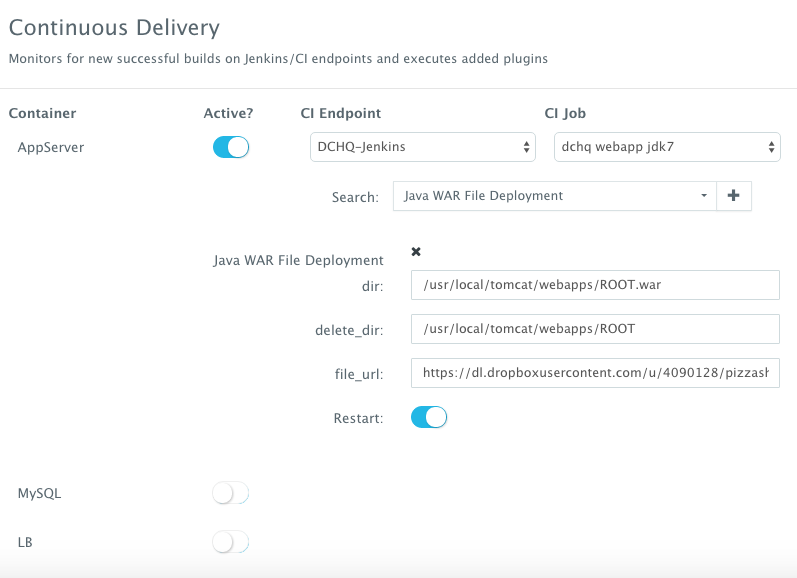

However, many developers may wish to update the running application server containers with the latest Java WAR file instead. For that, DCHQ allows developers to enable a continuous delivery workflow with Jenkins. This can be done by clicking on the Actions menu of the running application and then selecting Continuous Delivery. A user can select a Jenkins instance that has already been registered with DCHQ, the actual Job on Jenkins that will produce the latest WAR file, and then a BASH script plug-in to grab this build and deploy it on a running application server. Once this policy is saved, DCHQ will grab the latest WAR file from Jenkins any time a build is triggered and deploy it on the running application server.

Developers, as a result will always have the latest Java WAR file deployed on their running containers in DEV/TEST environments.

Conclusion

Containerizing enterprise Java applications is still a challenge mostly because existing application composition frameworks do not address complex dependencies, external integrations or auto-scaling workflows post-provision. Moreover, the ephemeral design of containers meant that developers had to spin up new containers and re-create the complex dependencies & external integrations with every version update.

DCHQ, available in hosted and on-premise versions, addresses all of these challenges and simplifies the containerization of enterprise Java applications through an advance application composition framework that facilitates cross-image environment variable bindings, extensible BASH script plug-ins that can be invoked at request time or post-provision, and application clustering for high availability across multiple hosts or regions with support for auto scaling.

Sign Up for FREE on http://DCHQ.io or download DCHQ On-Premise

to get access to out-of-box multi-tier Java application templates along with application lifecycle management functionality like monitoring, container updates, scale in/out and continuous delivery.

| Reference: | Deployment Automation Of Docker Java Application On VSphere And Photon from our JCG partner Amjad Afanah at the DCHQ.io blog. |