Apache Spark: A Quick Start With Python

Spark Overview

As per the official website, “Apache Spark is a fast and general engine for large scale data processing”

It is best used with clustered environment where the data processing task or job is split to run on multiple computers or nodes quickly and efficiently. It claims to run program 100 times faster than Hadoop platform.

Spark uses something called as RDD (Resilient Distributed Dataset) object to process and filter out the data. RDD object provide various useful functions to process the data in distributed fashion. The beauty of Spark is you need not understand how it distributes or splits the data across nodes in a cluster. As a developer, you only focus on writing a RDD function to process and transform the data. Spark is natively built using Scala language. But you can use either Java, Python or Scala to write your Spark program.

Spark is composed of different modules or components one can use for their data processing needs. The component model of Spark is as follows:

The primary component is the Spark Core that uses RDD to process (map and reduce) the data in a distributed environment. The other components built on Spark Core are:

- Spark Stream: Used to analyse real time data streams

- Spark SQL: One can write SQL based queries using Hive context to process data

- MLLib: Support Machine Learning algorithms and tools to train your data

- GraphX: Create data graphs and perform operations like transform and join

Setting Up Environment

First install JDK 8 and set the JAVA_HOME environment variable pointing to JDK home. For Python, you could install popular IDEs like Enthought Canopy or Anaconda or you can install basic Python from the python.org website. For Spark, download and install the latest version from the official Apache Spark website.

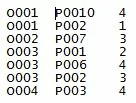

Once installed, set the SPARK_HOME environment variable to point to Spark home folder. Also make sure to set the PATH variable to point to respective bin folders: JAVA_HOME\bin and SPARK_HOME\bin. Next, create a sample dataset that we will use to process using Spark. The dataset will signify the collection of product ratings. The format of the data is as follows:

First column represents Order ID, the second column is Product ID and the third column depicts Ratings. It tells you product ratings as part of every order. We will process the data to find out total products under each rating. The above is just a small subset of data to make you understand how easy it is to process with Spark. You may want to download or generate a real life data to implement similar use case. Store this data file in a folder (say, /spark/data). It can be folder of your choice.

A Look At The Code

01 02 03 04 05 06 07 08 09 10 11 12 | from pyspark import SparkConf, SparkContextimport collectionsconf = SparkConf().setMaster("local").setAppName("Product Ratings")sc = SparkContext(conf = conf)rows = sc.textFile("file:///spark/data/product-ratings-data.py")ratings = rows.map(lambda x: x.split()[2])output = ratings.countByValue()sortedOutput = collections.OrderedDict(sorted(output.items()))for key, value in sortedOutput.items():print("%s %i" % (key, value)) |

Python integrates with Spark through the use of pyspark module. You will need two core classes from this module: SparkConf and SparkContext. SparkContext is used to create RDD object. SparkConf is used to configure or set application properties. The fist propety states whether our Spark job will use cluster (distributed) environment or on a single machine. We can set it to local for now as this is the basic program the we will run to get an overview of Spark and therefore we don’t need distributed environment. We also set the name of the application and we will call it ‘Basic Spark App’. If you are using Web UI to manage your Spark jobs, this name is displayed for your job. You then obtain the SparkContext (sc) object from the configured SparkConf object.

Load the data

1 | rows = sc.textFile("file:///spark/data/product-ratings-data") |

The next code snippet loads the data from the local file system. We already have a file stored in the spark/data folder. The file contains the product rating information that we will load to find the total number of product under a specific rating. The textFile() function is used to load initial data from the specified file path and it returns a Spark RDD object – rows. This is the core object we will use to further process and transform our data. This RDD object will contain data in the form of line record. Every line in the file will be a record or value in the RDD object.

Transform the data

1 2 | ratings = rows.map(lambda x: x.split()[2])output = ratings.countByValue() |

The map() function uses lambda feature (inner function defined as lambda) to split the line of data by space and get the third column (index starts from 0 and therefore 2 becomes third column). The third column so extracted is the ratings column. The split will be executed on each line and the resulting output will be stored in a new RDD object, we call it as rating.

Our new ratings RDD will now hold the ratings as shown below:

Now to find the total count of products for each rating, we will use countByValue() function. It will count each repeated ratings and place the count value along side each rating. The final output will look like the following.

You can run you python program using spark-submit application as show below:

spark-submit product-ratings-data.py

There are total three products with rating 4, two products with rating 3 and one product with rating 1 and 2 respectively. The countByValue() function will return a normal Python collection object which can be eventually iterated to display the results.

| Reference: | Apache Spark: A Quick Start With Python from our JCG partner Rajeev Hathi at the TECH ORGAN blog. |