Reduce Production Bugs with Continuous Integration

Continuous integration is so often preached from the pulpit of careful software craftsmanship that you might think it’s nothing more than KoolAidtm. However, continuous integration is neither transitory nor hollow; it’s a valuable and scientifically verifiable means of reducing production bugs.

Today, let’s step through how CI does that. But before we do, consider this quote from Kent Beck, taken from Extreme Programming Explained:

DCI (Defect Cost Increase) is one of the few empirically verifiable truths about software development: The sooner you find a defect, the cheaper it is to fix…However, most defects end up costing more than it would have cost to prevent them. Defects are expensive when they occur, both the direct costs of fixing the defects and the indirect costs because of damaged relationships, lost business, and lost development time.

Consider the core assertion in that quote: The sooner a bug is identified, the sooner it can be addressed. Why is that? Because the more recently a bug was created, the more available the knowledge about why it was introduced and by whom.

Let’s consider a hypothetical example, one any software developer can relate to. What’s easier to debug, a defect created this morning or one created three months ago? Or even one month ago? If we’re all honest with each other, I’d be willing to put money on the fact that the bug from this morning would be everyone’s answer, primarily for two reasons.

First, the bug was created this morning. Regardless of whether it was one of us who created it or someone else on our team, it would still be relatively fresh in someone’s mind. As a result, we’d be able to look at the code and recall what we were trying to achieve with the code in question. It’s easier to remember what brought us there and perhaps what we should have done.

Second, it’s less likely that there’s any code using our bug. Given said recency and lack of subsequent code, it’s possible that we could quite correct it quite quickly. So it’s safe to believe that Kent Beck’s assertion is correct. That’s why I’m a firm believer that continuous integration, regardless of the project nor organization, reduces production bugs. I’ve seen it in my work and in the work of many colleagues.

Let’s step through several of the routine practices of CI, and you tell me if you disagree that these techniques would significantly reduce production bugs.

Maintain a Single-Source Repository

A single-source repository improves collaboration. Why? Because more than one developer can work on a given piece of code, whether that’s implementing a new feature or correcting a bug, at the same time. What’s more, they can do so with greater confidence.

Consider this: I’m working on a piece of code that calculates multivariate sales tax for an ecommerce shopping basket. As a result of the code being stored under version control, I can make my changes in a feature branch entirely independent of any other developer in the team. Any number of other developers can do the same, working on, or around, that same segment of code. In this scenario, we can all merge our changes with near-complete confidence.

This isn’t to say that code merges will be smooth. We should all know that this is an unrealistic expectation. But we have a system in place that facilitates merging, one that allows us to handle merge conflicts and back out if necessary.

Automate The Build

Now that we’re all working from the same source and all the changes are centralized, we can create an automated build process around it. This needn’t be complex.

For example, it could be as simple as syncing a copy of the changed source files from the source repository to the remote server — let’s say there’s only one — and clearing out cache directories. Or it could involve the deployment of the latest release to several servers, running a database migration, priming cache servers, along with a lot more.

Regardless of specifics, the build process should be automated because it removes the human factor from the equation. Let’s check our egos at the door and admit that this is a prime source of mistakes. What’s more, this is precisely why we have computers: for repetitive and automated processes. They excel at running the same, often tedious, process repeatedly at all times of the day, at all times of the year.

More specifically, an automated build process removes the possibility of random issues cropping up intermittently, the kinds that are hard to track down. If something does go wrong, it’s easier to identify and correct.

Make Your Build Self-Testing

When a build is self-testing, we’re able to get near-instant feedback when an introduced change causes defects. It immediately flags that something has gone wrong.

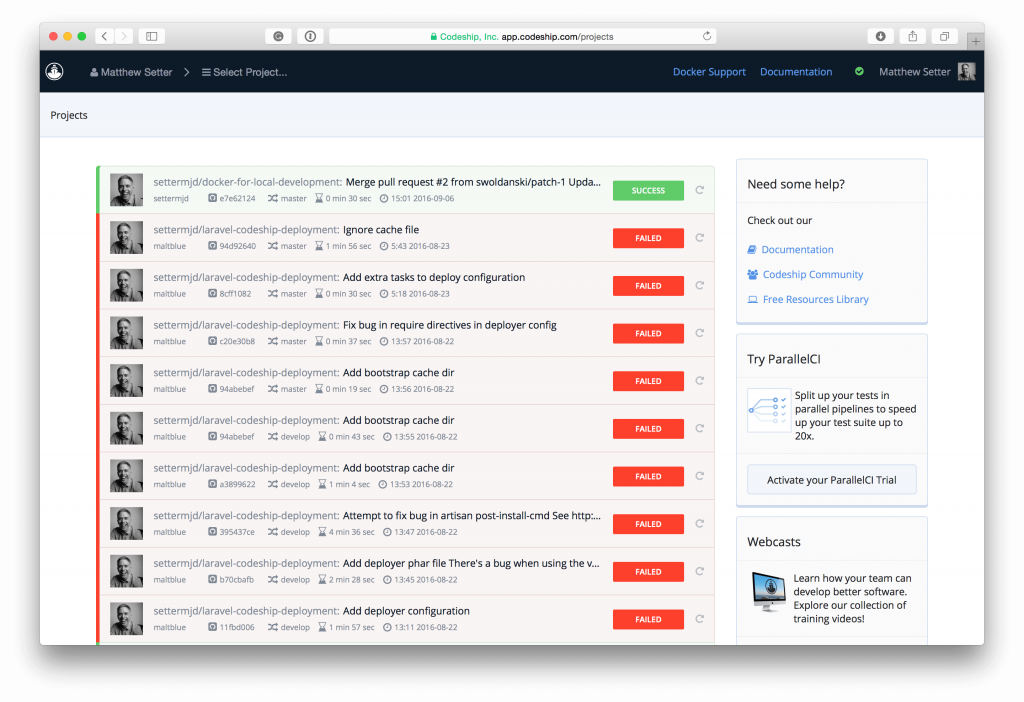

Take a simplistic example, which you can see above. If everything’s green, then we know we can continue with a deployment or with building the next feature. If the build’s red, we know we need to back out the recent change. There’s no need to guess, to infer, to go on gut feelings. We know if a change worked or not.

Given that a self-testing build has these advantages, it’s essential to have as high a level of code coverage as possible. If you only have a small degree of coverage, not every code path is testable. That means it’s not always clear if a change has caused a defect in a seemingly unrelated part of the application.

However, even with a low degree of coverage, code changes can be proofed. What’s more, having a self-testing build, we find out if something broke before a client or customer does. For a solid reputation, this is invaluable.

Keep The Build Fast

When the build is fast, we have a rapid feedback cycle. We don’t have to wait too long to proof assumptions. Consequently, this reduces technical risk, since we’re able to quickly know if a feature is going to work or if a fix actually corrected a defect. We know this sooner rather than later. We don’t have to endure long integration cycles to know if the work was valuable.

If a build isn’t fast, however, we may invest weeks, months even, only to find out that the work was wasted. What’s more, it increases the likelihood that the CI process can be considered pointless or not worth the effort. Trust in CI can begin to break down, leading to the process no longer used and eventually discarded. When that happens, a team might go back to its old way of working, with no code coverage, opaque visibility into a project’s progress, and bugs.

Test In A Clone Of The Production Environment

When variances exist between development and production environments, bugs can occur. These can arise because of different versions of third-party libraries, language extensions, operating system, and services such as the web, database, and caching server.

Using PHP as an example, an application could be in development around one release of a caching library, yet use a different version in production. When the tests run, the build succeeds. However, users report bugs following a recent release. Given that, it’s essential that the environment in which the tests are run be as identical to the production environment as possible.

I say “as identical as possible” deliberately. If you’re not testing on that environment, it won’t be exactly identical. That’s to be expected. There will be differences in the physical hardware because the testing environment might be a virtual machine, whereas production might be a bare metal environment. However, the software must be the same. This isn’t hard to achieve in this day and age, with tools such as VirtualBox, Vagrant, Ansible, and Docker available for free and backed by copious documentation.

Let’s say that we’re deploying a web-based application to DigitalOcean or Heroku, backed by services on Amazon S3. But, in development, we’re using virtual machines backed by Vagrant and VirtualBox. Regardless of the environment, we could use Ansible as our provisioner and let it make use of the different environment accordingly.

The result is that it will provision the same setup, which includes the same operating system (and version), the same runtimes, the same extensions, the same third-party dependencies, at the same respective versions, configured in the same ways. Other than more memory, drive space, and CPU cores, there should be no discernible differences. If there’s a bug, it shouldn’t take deploying to production to find it.

Make It Easy For Anyone To Get The Latest Executable

Of course, I don’t actually work from the perspective of an executable, as the software I develop is web-based. If you’re in the same position, we could reword this section as: Make It Easy For Anyone To Get A Running Instance Of The Application. Regardless, the same principle applies.

When it’s easy to either get the latest executable or set up a running copy of the latest development release, it’s abjectly clear just how much functionality has been completed and how much is left to go. Anyone within the organization can know whether a feature is truly complete and can be signed off, or whether it contains a defect that needs correction. This, in turn, reduces organizational risk and increases confidence. Combined, they increase confidence in the quality of the software being shipped to the client or user.

Progress is easy to measure. It’s also easier to identify where problems arise.

Everyone Can See What’s Happening

Perhaps more important than individual software defects is transparency. Being able to see what’s going on at any given point in time, whether tests are failing or there was a merge conflict transparency, helps the team identify problems and progress.

I can’t speak for your organization, but I’m a believer in teams where everyone’s involved. From the project manager who may have no software development experience to the junior developer who’s just been on-boarded. Everyone is a stakeholder, not just developers. Domain knowledge — such as how a claims process works in an insurance firm, how SEPA works in a bank, the history of the organization, or mandatory legal constraints — is as valuable as coding skills.

Without this knowledge, code can only be built based on flawed assumption. Developers aren’t domain experts, so it’s important to avoid issues that can lead to legal trouble or poor user experience. It’s all too common, unfortunately, for assumptions to be made as to why a product is falling behind deadlines, for blame to be placed, and for finger pointing to begin. Transparency helps reduce that. By knowing what’s happening, changes can be made accordingly and based on informed understanding, not feelings and egos.

Automate Deployment

Automated deployments go largely hand-in-hand with automated builds. When people are removed from the equation, when a process is no longer manual, then bugs caused by people are removed. What’s more, it brings predictability and greater reliability into the equation as well. Once the deployment process has been mapped out and a suitable deployment tool has been chosen, then it can almost become a set-and-forget scenario.

This can happen regardless of the level of sophistication involved in the deployment process. If you use a deployment tool such as Codeship along with provisioning tools like Ansible, then it doesn’t matter where the application’s deployed or what the production architecture is. The application can be deployed the same way, every time, and it can be performed by anyone, whether that’s the new junior developer, a project manager, or a personal assistant.

The mechanics of the process needn’t be known. When an automated build passes and is allowed to be pushed out to production, then the process can be initiated by anyone. Things that can cause well-tested applications to fail on deploy: Database migrations aren’t forgotten; cache warm-ups aren’t overlooked; credentials aren’t messed up or accidentally placed somewhere where anyone can find them; library upgrades aren’t overlooked; operating system patches aren’t skipped. The list could go on.

In Conclusion

Continuous integration isn’t a panacea. It won’t magically make your software defect free. That’s a utopian ideal that will likely never be attained. However, by diligently implementing CI, your organization can quickly and accurately identify and correct defects long before a client or user could ever find out about them.

It’s not a quick win. It’s a journey of growth. But it’s one which is worth the investment and dedication.

| Reference: | Reduce Production Bugs with Continuous Integration from our JCG partner Matthew Setter at the Codeship Blog blog. |