“Knative Serving” for Spring Boot Applications

I got a chance to try Knative’s Serving feature to deploy a Spring Boot application and this post is simply documenting a sample and the approach I took.

I don’t understand the internals of Knative enough yet to have an opinion on whether this approach is better than the deployment + services + ingress based approach.

One feature that is awesome is the auto-scaling feature in Knative Serving, which based on the load, increases/decreases the number of pods as part of a “Deployment” handling the request.

Details of the Sample

My entire sample is available here and it is mostly developed based on the java sample available with Knative Serving documentation. I used Knative with a minikube environment to try the sample.

Deploying to Kubernetes/Knative

Assuming that a Kubernetes environment with Istio and Knative has been set-up, the way to run the application is to deploy a Kubernetes manifest this way:

01 02 03 04 05 06 07 08 09 10 11 12 | apiVersion: serving.knative.dev/v1alpha1kind: Servicemetadata: name: sample-boot-knative-service namespace: defaultspec: runLatest: configuration: revisionTemplate: spec: container: image: bijukunjummen/sample-boot-knative-app:0.0.1-SNAPSHOT |

The image “bijukunjummen/sample-boot-knative-app:0.0.1-SNAPSHOT” is publicly available via Dockerhub, so this sample should work out of the box.

Applying this manifest:

1 | kubectl apply -f service.yml |

should register a Knative Serving Service resource with Kubernetes, the Knative serving services resource manages the lifecycle of other Knative resources (configuration, revision, route) the details of which can be viewed using the following commands, if anything goes wrong, the details should show up in the output:

1 | kubectl get services.serving.knative.dev sample-boot-knative-service -o yaml |

Testing

Assuming that the Knative serving service is deployed cleanly, the first oddity to see is that no pods show up for the application!

If I were to make a request to the app now, which is done via a routing layer managed by Knative – this can be retrieved for a minikube environment using the following bash script:

1 2 | export GATEWAY_URL=$(echo $(minikube ip):$(kubectl get svc knative-ingressgateway -n istio-system -o 'jsonpath={.spec.ports[?(@.port==80)].nodePort}'))export APP_DOMAIN=$(kubectl get services.serving.knative.dev sample-boot-knative-service -o="jsonpath={.status.domain}") |

and making a call to an endpoint of the app using CUrl:

1 2 3 4 5 6 7 8 9 | -H "Accept: application/json" \ -H "Content-Type: application/json" \ -H "Host: ${APP_DOMAIN}" \ -d $'{ "id": "1", "payload": "one", "delay": "300"}' |

OR httpie

1 | http http://${GATEWAY_URL}/messages Host:"${APP_DOMAIN}" id=1 payload=test delay=100 |

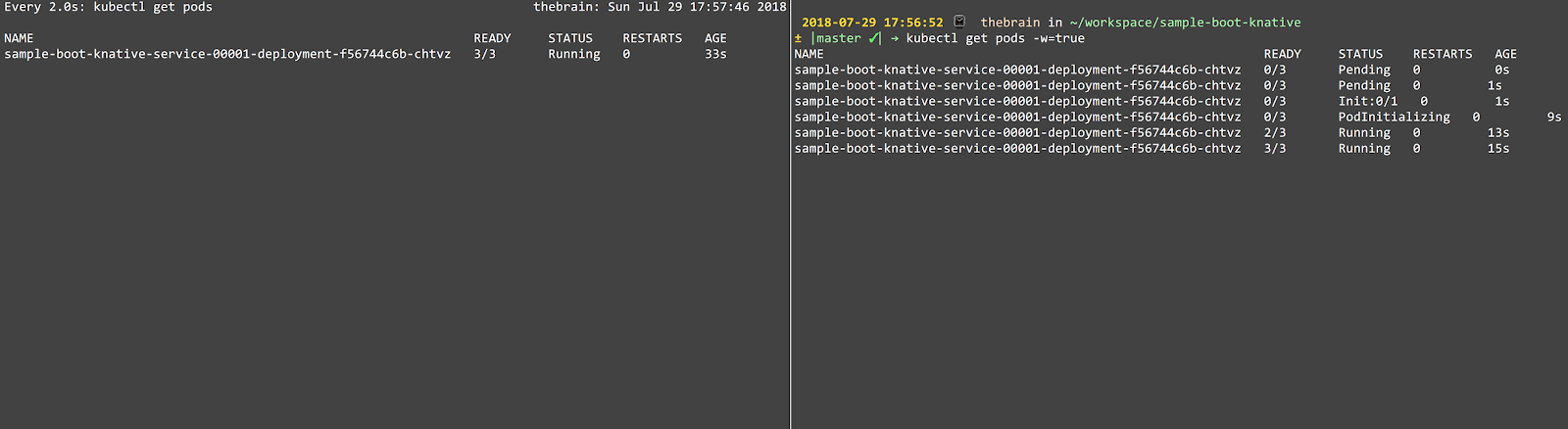

should magically, using the auto-scaler component start spinning up the pods to handle the request:

The first request took almost 17 seconds to complete, the time it takes to spin up a pod, but subsequent requests are quick.

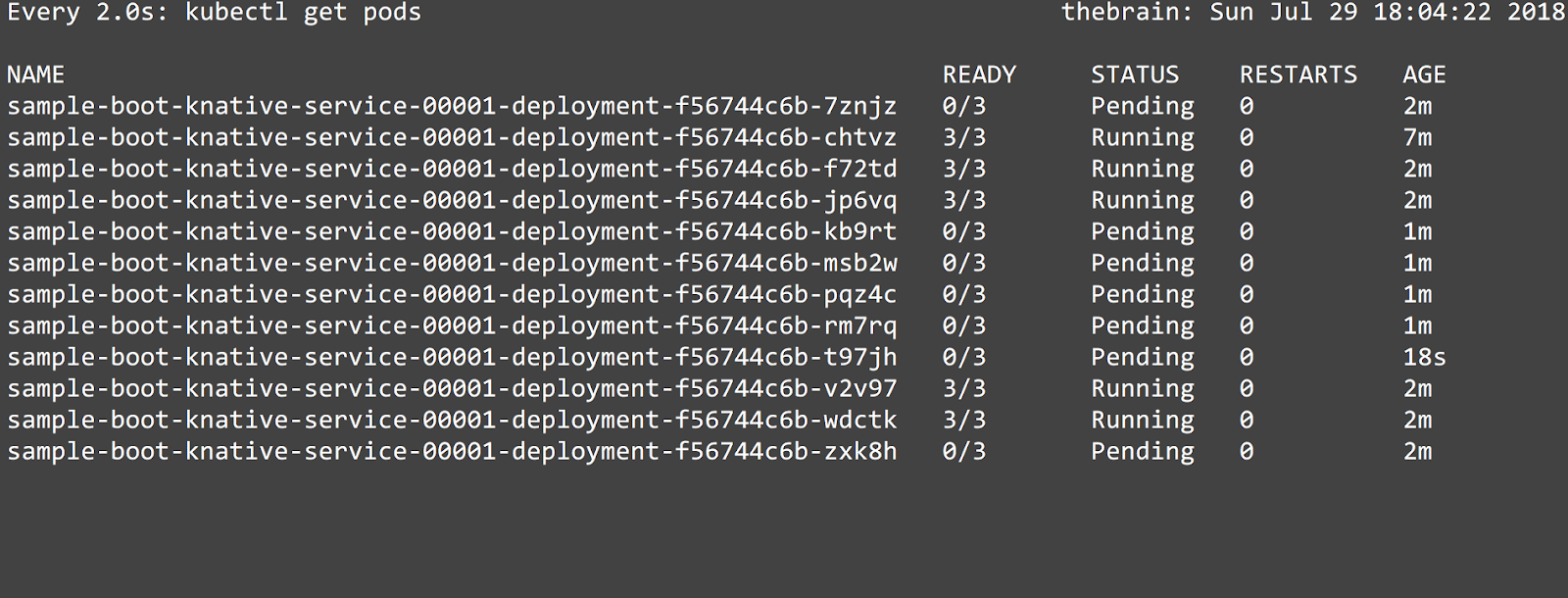

Now, to show the real power of auto-scaler I ran a small load test with a 50 user load and pods are scaled up and down as required.

Conclusion

I can see the promise of Knative in automatically managing the resources, once defined using a fairly simple manifest, in a Kubernetes environment and letting a developer focus on the code and logic.

| Published on Java Code Geeks with permission by Biju Kunjummen, partner at our JCG program. See the original article here: “Knative Serving” for Spring Boot Applications Opinions expressed by Java Code Geeks contributors are their own. |