Processing SQS Messages using Spring Boot and Project Reactor

I recently worked on a project where I had to efficiently process a large number of messages streaming in through an AWS SQS Queue. In this post (and potentially one more), I will go over the approach that I took to process the messages using the excellent Project Reactor

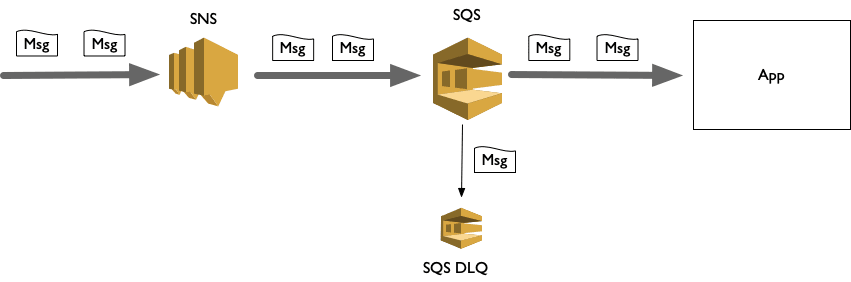

The following is the kind of set-up that I am aiming for:

Setting up a local AWS Environment

Before I jump into the code, let me get some preliminaries out of the way. First, how do you get a local version of SNS and SQS. One of the easiest ways is to use localstack. I use a docker-compose version of it described here

The second utility that I will be using is the AWS CLI. This website has details on how to install it locally.

Once both of these utilities are in place, a quick test should validate the setup:

1 2 3 4 5 6 7 8 | # Create a queueaws --endpoint http://localhost:4576 sqs create-queue --queue-name test-queue# Send a sample messageaws --endpoint http://localhost:4576 sqs send-message --queue-url http://localhost:4576/queue/test-queue --message-body "Hello world"# Receive the messageaws --endpoint http://localhost:4576 sqs receive-message --queue-url http://localhost:4576/queue/test-queue |

Basics of Project Reactor

Project Reactor implements the Reactive Streams specification and provides a way of handling streams of data across asynchronous boundaries that respects backpressure. A lot of words here but in essence think of it this way:

1. SQS Produces data

2. The application is going to consume and process it as a stream of data

3. The application should consume data at a pace that is sustainable – too much data should not be pumped in. This is formally referred to as

“Backpressure”

AWS SDK 2

The library that I will be using to consume AWS SQS data is the

AWS SDK 2. The library uses non-blocking IO under the covers.

The library offers both a sync version of making calls as well as an async version. Consider the synchronous way to fetch records from an SQS queue:

01 02 03 04 05 06 07 08 09 10 | import software.amazon.awssdk.services.sqs.model.ReceiveMessageRequestimport software.amazon.awssdk.services.sqs.SqsClientval receiveMessageRequest: ReceiveMessageRequest = ReceiveMessageRequest.builder() .queueUrl(queueUrl) .maxNumberOfMessages(5) .waitTimeSeconds(10) .build()val messages: List<Message> = sqsClient.receiveMessage(receiveMessageRequest).messages() |

Here “software.amazon.awssdk.services.sqs.SqsClient” is being used for querying sqs and retrieving a batch of results synchronously. An async result, on the other hand, looks like this:

1 2 3 4 5 6 7 8 9 | val receiveMessageRequest: ReceiveMessageRequest = ReceiveMessageRequest.builder() .queueUrl(queueUrl) .maxNumberOfMessages(5) .waitTimeSeconds(10) .build()val messages: CompletableFuture<List<Message>> = sqsAsyncClient .receiveMessage(receiveMessageRequest) .thenApply { result -> result.messages() } |

The output now is now a “CompletableFuture”

Infinite loop and no backpressure

My first attempt at creating a stream(Flux) of message is fairly simple – an infinite loop that polls AWS sqs and creates a Flux from it using the “Flux.create” operator, this way:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | fun listen(): Flux<Pair<String, () -> Unit>> { return Flux.create { sink: FluxSink<List<Message>> -> while (running) { try { val receiveMessageRequest: ReceiveMessageRequest = ReceiveMessageRequest.builder() .queueUrl(queueUrl) .maxNumberOfMessages(5) .waitTimeSeconds(10) .build() val messages: List<Message> = sqsClient.receiveMessage(receiveMessageRequest).messages() LOGGER.info("Received: $messages") sink.next(messages) } catch (e: InterruptedException) { LOGGER.error(e.message, e) } catch (e: Exception) { LOGGER.error(e.message, e) } } } .flatMapIterable(Function.identity()) .doOnError { t: Throwable -> LOGGER.error(t.message, t) } .retry() .map { snsMessage: Message -> val snsMessageBody: String = snsMessage.body() val snsNotification: SnsNotification = readSnsNotification(snsMessageBody) snsNotification.message to { deleteQueueMessage(snsMessage.receiptHandle(), queueUrl) } }} |

The way this works is that there is an infinite loop that checks for new messages using long-polling. Messages may not be available at every poll, in which case an empty list is added to the stream.

This list of atmost 5 messages is then mapped to a stream of individual messages using the “flatMapIterable” operator, which is further mapped by extracting the message from the SNS wrapper (as message gets forwarded from SNS to SQS, SNS adds a wrapper to the message) and a way to delete the message(deleteHandle) once the message is successfully processed is returned as Pair.

This approach works perfectly fine… but imagine a case where a huge number of messages have come in, since the loop is not really aware of the throughput downstream it will keep pumping data to the stream. The default behavior is for the intermediate operators to buffer this data flowing in based on how the final consumer is consuming the data. Since this buffer is unbounded it is possible that the system may reach an unsustainable state.

Backpressure aware stream

The fix is to use a different operator to generate the stream of data –

Flux.generate.

Using this operator the code looks like this:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 | fun listen(): Flux<Pair<String, () -> Unit>> { return Flux.generate { sink: SynchronousSink<List<Message>> -> val receiveMessageRequest: ReceiveMessageRequest = ReceiveMessageRequest.builder() .queueUrl(queueUrl) .maxNumberOfMessages(5) .waitTimeSeconds(10) .build() val messages: List<Message> = sqsClient.receiveMessage(receiveMessageRequest).messages() LOGGER.info("Received: $messages") sink.next(messages) } .flatMapIterable(Function.identity()) .doOnError { t: Throwable -> LOGGER.error(t.message, t) } .retry() .map { snsMessage: Message -> val snsMessageBody: String = snsMessage.body() val snsNotification: SnsNotification = readSnsNotification(snsMessageBody) snsNotification.message to { deleteQueueMessage(snsMessage.receiptHandle(), queueUrl) } }} |

The way this works is that the block passed to the “Flux.generate” operator is repeatedly called – similar to the while loop, in each loop one item is expected to be added to the stream. In this instance, the item added to the stream happens to be a list which like before is broken down into individual messages.

How does backpressure work in this scenario –

So again consider the case where the downstream consumer is processing at a slower rate than the generating end. In this case, Flux itself would slow down at the rate at which the generate operator is called, thus being considerate of the throughput of the downstream system.

Conclusion

This should set up a good pipeline for processing messages from SQS, there are a few more nuances to this to process messages in parallel later in the stream which I will cover in a future post.

The codebase of this example is available in my github repository

here – https://github.com/bijukunjummen/boot-with-sns-sqs. The code has a complete pipeline which includes processing the message and deleting it once processed.

Published on Java Code Geeks with permission by Biju Kunjummen, partner at our JCG program. See the original article here: Processing SQS Messages using Spring Boot and Project Reactor Opinions expressed by Java Code Geeks contributors are their own. |