How to build Graal-enabled JDK8 on CircleCI?

Citation: feature image on the blog can be found on flickr and created by Luca Galli. The image in one of the below sections can be also found on flickr and created by fklv (Obsolete hipster).

The GraalVM compiler is a replacement to HotSpot’s server-side JIT compiler widely known as the C2 compiler. It is written in Java with the goal of better performance (among other goals) as compared to the C2 compiler. New changes starting with Java 9 mean that we can now plug in our own hand-written C2 compiler into the JVM, thanks to JVMCI. The researchers and engineers at Oracle Labs) have created a variant of JDK8 with JVMCI enabled which can be used to build the GraalVM compiler. The GraalVM compiler is open source and is available on GitHub (along with the HotSpot JVMCI sources) needed to build the GraalVM compiler). This gives us the ability to fork/clone it and build our own version of the GraalVM compiler.

In this post, we are going to build the GraalVM compiler with JDK8 on CircleCI. The resulting artifacts are going to be:

– JDK8 embedded with the GraalVM compiler, and

– a zip archive containing Graal & Truffle modules/components.

Note: we are not covering how to build the whole of the GraalVM suite in this post, that can be done via another post. Although these scripts can be used to that, and there exists a branch which contains the rest of the steps.

Why use a CI tool to build the GraalVM compiler?

Continuous integration (CI) and continuous deployment (CD) tools have many benefits. One of the greatest is the ability to check the health of the code-base. Seeing why your builds are failing provides you with an opportunity to make a fix faster. For this project, it is important that we are able to verify and validate the scripts required to build the GraalVM compiler for Linux and macOS, both locally and in a Docker container.

A CI/CD tool lets us add automated tests to ensure that we get the desired outcome from our scripts when every PR is merged. In addition to ensuring that our new code does not introduce a breaking change, another great feature of CI/CD tools is that we can automate the creation of binaries and the automatic deployment of those binaries, making them available for open source distribution.

Let’s get started

During the process of researching CircleCI as a CI/CD solution to build the GraalVM compiler, I learned that we could run builds via two different approaches, namely:

– A CircleCI build with a standard Docker container (longer build time, longer config script)

– A CircleCI build with a pre-built, optimised Docker container (shorter build time, shorter config script)

We will now go through the two approaches mentioned above and see the pros and cons of both of them.

Approach 1: using a standard Docker container

For this approach, CircleCI requires a docker image that is available in Docker Hub or another public/private registry it has access to. We will have to install the necessary dependencies in this available environment in order for a successful build. We expect the build to run longer the first time and, depending on the levels of caching, it will speed up.

To understand how this is done, we will be going through the CircleCI configuration file section-by-section (stored in .circleci/circle.yml), see config.yml in .circleci for the full listing, see commit df28ee7 for the source changes.

Explaining sections of the config file

The below lines in the configuration file will ensure that our installed applications are cached (referring to the two specific directories) so that we don’t have to reinstall the dependencies each time a build occurs:

1 2 3 4 | dependencies: cache_directories: - "vendor/apt" - "vendor/apt/archives" |

We will be referring to the docker image by its full name (as available on http://hub.docker.com under the account name used – adoptopenjdk). In this case, it is a standard docker image containing JDK8 made available by the good folks behind the Adopt OpenJDK build farm. In theory, we can use any image as long as it supports the build process. It will act as the base layer on which we will install the necessary dependencies:

1 2 | docker: - image: adoptopenjdk/openjdk8:jdk8u152-b16 |

Next, in the pre-Install Os dependencies step, we will restore the cache, if it already exists, this may look a bit odd, but for unique key labels, the below implementation is recommended by the docs):

1 2 3 4 | - restore_cache: keys: - os-deps-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} - os-deps-{{ arch }}-{{ .Branch }} |

Then, in the Install Os dependencies step we run the respective shell script to install the dependencies needed. We have set this step to timeout if the operation takes longer than 2 minutes to complete (see docs for timeout):

1 2 3 4 | - run: name: Install Os dependencies command: ./build/x86_64/linux_macos/osDependencies.sh timeout: 2m |

Then, in then post-Install Os dependencies step, we save the results of the previous step – the layer from the above run step (the key name is formatted to ensure uniqueness, and the specific paths to save are included):

1 2 3 4 5 | - save_cache: key: os-deps-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} paths: - vendor/apt - vendor/apt/archives |

Then, in the pre-Build and install make via script step, we restore the cache, if one already exists:

1 2 3 4 | - restore_cache: keys: - make-382-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} - make-382-{{ arch }}-{{ .Branch }} |

Then, in the Build and install make via script step we run the shell script to install a specific version of make and it is set to timeout if step takes longer than 1 minute to finish:

1 2 3 4 | - run: name: Build and install make via script command: ./build/x86_64/linux_macos/installMake.sh timeout: 1m |

Then, in the post Build and install make via script step, we save the results of the above action to the cache:

1 2 3 4 5 6 7 8 | - save_cache: key: make-382-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} paths: - /make-3.82/ - /usr/bin/make - /usr/local/bin/make - /usr/share/man/man1/make.1.gz - /lib/ |

Then, we define environment variables to update JAVA_HOME and PATH at runtime. Here the environment variables are sourced so that we remember them for the next subsequent steps till the end of the build process (please keep this in mind):

1 2 3 4 5 | - run: name: Define Environment Variables and update JAVA_HOME and PATH at Runtime command: | echo '....' <== a number of echo-es displaying env variable values source ${BASH_ENV} |

Then, in the step to Display Hardware, Software, Runtime environment and dependency versions, as best practice we display environment-specific information and record it into the logs for posterity (also useful during debugging when things go wrong):

1 2 3 | - run: name: Display HW, SW, Runtime env. info and versions of dependencies command: ./build/x86_64/linux_macos/lib/displayDependencyVersion.sh |

Then, we run the step to setup MX – this is important from the point of view of the GraalVM compiler (mx) is a specialised build system created to facilitate compiling and building Graal/GraalVM and components):

1 2 3 | - run: name: Setup MX command: ./build/x86_64/linux_macos/lib/setupMX.sh ${BASEDIR} |

Then, we run the important step to Build JDK JVMCI (we build the JDK with JVMCI enabled here) and timeout, if the process takes longer than 15 minutes without any output or if the process takes longer than 20 minutes in total to finish:

1 2 3 4 5 | - run: name: Build JDK JVMCI command: ./build/x86_64/linux_macos/lib/build_JDK_JVMCI.sh ${BASEDIR} ${MX} timeout: 20m no_output_timeout: 15m |

Then, we run the step Run JDK JVMCI Tests, which runs tests as part of the sanity check after building the JDK JVMCI:

1 2 3 | - run: name: Run JDK JVMCI Tests command: ./build/x86_64/linux_macos/lib/run_JDK_JVMCI_Tests.sh ${BASEDIR} ${MX} |

Then, we run the step Setting up environment and Build GraalVM Compiler, to set up the build environment with the necessary environment variables which will be used by the steps to follow:

1 2 3 4 5 6 7 8 | - run: name: Setting up environment and Build GraalVM Compiler command: | echo ">>>> Currently JAVA_HOME=${JAVA_HOME}" JDK8_JVMCI_HOME="$(cd ${BASEDIR}/graal-jvmci-8/ && ${MX} --java-home ${JAVA_HOME} jdkhome)" echo "export JVMCI_VERSION_CHECK='ignore'" >> ${BASH_ENV} echo "export JAVA_HOME=${JDK8_JVMCI_HOME}" >> ${BASH_ENV} source ${BASH_ENV} |

Then, we run the step Build the GraalVM Compiler and embed it into the JDK (JDK8 with JVMCI enabled) which timeouts if the process takes longer than 7 minutes without any output or longer than 10 minutes in total to finish:

1 2 3 4 5 6 7 | - run: name: Build the GraalVM Compiler and embed it into the JDK (JDK8 with JVMCI enabled) command: | echo ">>>> Using JDK8_JVMCI_HOME as JAVA_HOME (${JAVA_HOME})" ./build/x86_64/linux_macos/lib/buildGraalCompiler.sh ${BASEDIR} ${MX} ${BUILD_ARTIFACTS_DIR} timeout: 10m no_output_timeout: 7m |

Then, we run the simple sanity checks to verify the validity of the artifacts created once a build has been completed, just before archiving the artifacts:

1 2 3 4 5 6 | - run: name: Sanity check artifacts command: | ./build/x86_64/linux_macos/lib/sanityCheckArtifacts.sh ${BASEDIR} ${JDK_GRAAL_FOLDER_NAME} timeout: 3m no_output_timeout: 2m |

Then, we run the step Archiving artifacts (means compressing and copying final artifacts into a separate folder) which timeouts if the process takes longer than 2 minutes without any output or longer than 3 minutes in total to finish:

1 2 3 4 5 6 | - run: name: Archiving artifacts command: | ./build/x86_64/linux_macos/lib/archivingArtifacts.sh ${BASEDIR} ${MX} ${JDK_GRAAL_FOLDER_NAME} ${BUILD_ARTIFACTS_DIR} timeout: 3m no_output_timeout: 2m |

For posterity and debugging purposes, we capture the generated logs from the various folders and archive them:

01 02 03 04 05 06 07 08 09 10 | - run: name: Collecting and archiving logs (debug and error logs) command: | ./build/x86_64/linux_macos/lib/archivingLogs.sh ${BASEDIR} timeout: 3m no_output_timeout: 2m when: always - store_artifacts: name: Uploading logs path: logs/ |

Finally, we store the generated artifacts at a specified location – the below lines will make the location available on the CircleCI interface (we can download the artifacts from here):

1 2 3 | - store_artifacts: name: Uploading artifacts in jdk8-with-graal-local path: jdk8-with-graal-local/ |

Approach 2: using a pre-built optimised Docker container

For approach 2, we will be using a pre-built docker container, that has been created and built locally with all necessary dependencies, the docker image saved and then pushed to a remote registry for e.g. Docker Hub. And then we will be referencing this docker image in the CircleCI environment, via the configuration file. This saves us time and effort for running all the commands to install the necessary dependencies to create the necessary environment for this approach (see the details steps in Approach 1 section).

We expect the build to run for a shorter time as compared to the previous build and this speedup is a result of the pre-built docker image (we will see in the Steps to build the pre-built docker image section), to see how this is done). The additional speed benefit comes from the fact that CircleCI caches the docker image layers which in turn results in a quicker startup of the build environment.

We will be going through the CircleCI configuration file section-by-section (stored in .circleci/circle.yml) for this approach, see config.yml in .circleci for the full listing, see commit e5916f1 for the source changes.

Explaining sections of the config file

Here again, we will be referring to the docker image by it’s full name. It is a pre-built docker image neomatrix369/graalvm-suite-jdk8 made available by neomatrix369. It was built and uploaded to Docker Hub in advance before the CircleCI build was started. It contains the necessary dependencies for the GraalVM compiler to be built:

1 2 3 4 | docker: - image: neomatrix369/graal-jdk8:${IMAGE_VERSION:-python-2.7} steps: - checkout |

All the sections below do the exact same tasks (and for the same purpose) as in Approach 1, see Explaining sections of the config file section.

Except, we have removed the below sections as they are no longer required for Approach 2:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | - restore_cache: keys: - os-deps-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} - os-deps-{{ arch }}-{{ .Branch }} - run: name: Install Os dependencies command: ./build/x86_64/linux_macos/osDependencies.sh timeout: 2m - save_cache: key: os-deps-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} paths: - vendor/apt - vendor/apt/archives - restore_cache: keys: - make-382-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} - make-382-{{ arch }}-{{ .Branch }} - run: name: Build and install make via script command: ./build/x86_64/linux_macos/installMake.sh timeout: 1m - save_cache: key: make-382-{{ arch }}-{{ .Branch }}-{{ .Environment.CIRCLE_SHA1 }} paths: - /make-3.82/ - /usr/bin/make - /usr/local/bin/make - /usr/share/man/man1/make.1.gz |

In the following section, I will go through the steps show how to build the pre-built docker image. It will involve running the bash scripts – ./build/x86_64/linux_macos/osDependencies.sh and ./build/x86_64/linux_macos/installMake.sh to install the necessary dependencies as part of building a docker image. And, finally pushing the image to Docker Hub (can be pushed to any other remote registry of your choice).

Steps to build the pre-built docker image

– Run build-docker-image.sh (see bash script source) which depends on the presence of Dockerfile (see docker script source). The Dockerfile does all the necessary tasks of running the dependencies inside the container i.e. runs the bash scripts ./build/x86_64/linux_macos/osDependencies.sh and ./build/x86_64/linux_macos/installMake.sh:

1 | $ ./build-docker-image.sh |

– Once the image has been built successfully, run push-graal-docker-image-to-hub.sh after setting the USER_NAME and IMAGE_NAME (see source code) otherwise it will use the default values as set in the bash script:

1 2 | $ USER_NAME="[your docker hub username]" IMAGE_NAME="[any image name]" \ ./push-graal-docker-image-to-hub.sh |

CircleCI config file statistics: Approach 1 versus Approach 2

| Areas of interest | Approach 1 | Approach 2 |

|---|---|---|

| Config file (full source list) | build-on-circleci | build-using-prebuilt-docker-image |

| Commit point (sha) | df28ee7 | e5916f1 |

| Lines of code (loc) | 110 lines | 85 lines |

| Source lines (sloc) | 110 sloc | 85 sloc |

| Steps (steps: section) | 19 | 15 |

| Performance (see Performance section) | Some speedup due to caching, but slower than Approach 2 | Speed-up due to pre-built docker image, and also due to caching at different steps. Faster than Approach 1 Ensure DLC layering is enabled (its a paid feature) |

What not to do?

Approach 1 issues

I came across things that wouldn’t work initially, but were later fixed with changes to the configuration file or the scripts:

- please make sure the .circleci/config.yml is always in the root directory of the folder

- when using the store_artifacts directive in the .circleci/config.yml file setting, set the value to a fixed folder name i.e. jdk8-with-graal-local/ – in our case, setting the path to ${BASEDIR}/project/jdk8-with-graal didn’t create the resulting artifact once the build was finished hence the fixed path name suggestion.

- environment variables: when working with environment variables, keep in mind that each command runs in its own shell hence the values set to environment variables inside the shell execution environment isn’t visible outside, follow the method used in the context of this post. Set the environment variables such that all the commands can see its required value to avoid misbehaviours or unexpected results at the end of each step.

- caching: use the caching functionality after reading about it, for more details on CircleCI caching refer to the caching docs. See how it has been implemented in the context of this post. This will help avoid confusions and also help make better use of the functionality provided by CircleCI.

Approach 2 issues

- Caching: check the docs when trying to use the Docker Layer Caching (DLC) option as it is a paid feature, once this is known the doubts about “why CircleCI keeps downloading all the layers during each build” will be clarified, for Docker Layer Caching details refer to docs. It can also clarify why in non-paid mode my build is still not as fast as I would like it to be.

General note:

- Light-weight instances: to avoid the pitfall of thinking we can run heavy-duty builds, check the documentation on the technical specifications of the instances. If we run the standard Linux commands to probe the technical specifications of the instance we may be misled by thinking that they are high specification machines. See the step that enlists the Hardware and Software details of the instance (see Display HW, SW, Runtime env. info and versions of dependencies section). The instances are actually Virtual Machines or Container like environments with resources like 2CPU/4096MB. This means we can’t run long-running or heavy-duty builds like building the GraalVM suite. Maybe there is another way to handle these kinds of builds, or maybe such builds need to be decomposed into smaller parts.

- Global environment variables: as each run line in the config.yml, runs in its own shell context, from within that context environment variables set by other executing contexts do not have access to these values. Hence in order to overcome this, we have adopted two methods:

- pass as variables as parameters to calling bash/shell scripts to ensure scripts are able to access the values in the environment variables

- use the source command as a run step to make environment variables accessible globally

End result and summary

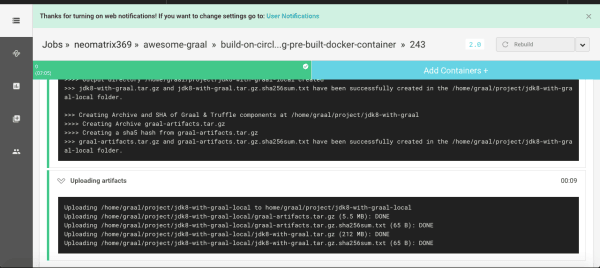

We see the below screen (the last step i.e. Updating artifacts enlists where the artifacts have been copied), after a build has been successfully finished:

The artifacts are now placed in the right folder for download. We are mainly concerned about the jdk8-with-graal.tar.gz artifact.

Performance

Before writing this post, I ran multiple passes of both the approaches and jotted down the time taken to finish the builds, which can be seen below:

– Approach 1: standard CircleCI build (caching enabled)

– 13 mins 28 secs

– 13 mins 59 secs

– 14 mins 52 secs

– 10 mins 38 secs

– 10 mins 26 secs

– 10 mins 23 secs

– Approach 2: using pre-built docker image (caching enabled, DLC) feature unavailable)

– 13 mins 15 secs

– 15 mins 16 secs

– 15 mins 29 secs

– 15 mins 58 secs

– 10 mins 20 secs

– 9 mins 49 secs

Note: Approach 2 should show better performance when using a paid tier, as Docker Layer Caching) is available as part of this plan.

Sanity check

In order to be sure that by using both the above approaches we have actually built a valid JDK embedded with the GraalVM compiler, we perform the following steps with the created artifact:

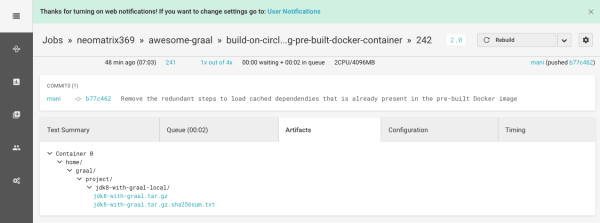

– Firstly, download the jdk8-with-graal.tar.gz artifact from under the Artifacts tab on the CircleCI dashboard (needs sign-in):

– Then, unzip the .tar.gz file and do the following:

1 | tar xvf jdk8-with-graal.tar.gz |

– Thereafter, run the below command to check the JDK binary is valid:

1 2 | cd jdk8-with-graal ./bin/java -version |

– And finally check if we get the below output:

1 2 3 | openjdk version "1.8.0-internal" OpenJDK Runtime Environment (build 1.8.0-internal-jenkins_2017_07_27_20_16-b00) OpenJDK 64-Bit Graal:compiler_ab426fd70e30026d6988d512d5afcd3cc29cd565:compiler_ab426fd70e30026d6988d512d5afcd3cc29cd565 (build 25.71-b01-internal-jvmci-0.46, mixed mode) |

– Similarly, to confirm if the JRE is valid and has the GraalVM compiler built in, we do this:

1 | ./bin/jre/java -version |

– And check if we get a similar output as above:

1 2 3 | openjdk version "1.8.0-internal" OpenJDK Runtime Environment (build 1.8.0-internal-jenkins_2017_07_27_20_16-b00) OpenJDK 64-Bit Graal:compiler_ab426fd70e30026d6988d512d5afcd3cc29cd565:compiler_ab426fd70e30026d6988d512d5afcd3cc29cd565 (build 25.71-b01-internal-jvmci-0.46, mixed mode) |

With this, we have successfully built JDK8 with the GraalVM compiler embedded in it and also bundled the Graal and Truffle components in an archive file, both of which are available for download via the CircleCI interface.

Note: you will notice that we do perform sanity checks of the binaries built just before we pack them into compressed archives, as part of the build steps (see bottom section of CircleCI the configuration files section).

Nice badges!

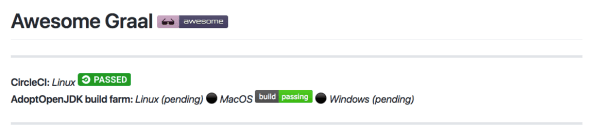

We all like to show-off and also like to know the current status of our build jobs. A green-colour, build status icon is a nice indication of success, which looks like the below on a markdown README page:

We can very easily embed both of these status badges displaying the build status of our project (branch-specific i.e. master or another branch you have created) built on CircleCI (see docs) on how to do that).

Conclusions

We explored two approaches to build the GraalVM compiler using the CircleCI environment. They were good experiments to compare performance between the two approaches and also how we can do them with ease. We also saw a number of things to avoid or not to do and also saw how useful some of the CircleCI features are. The documentation and forums do good justice when trying to make a build work or if you get stuck with something.

Once we know the CircleCI environment, it’s pretty easy to use and always gives us the exact same response (consistent behaviour) every time we run it. Its ephemeral nature means we are guaranteed a clean environment before each run and a clean up after it finishes. We can also set up checks on build time for every step of the build, and abort a build if the time taken to finish a step surpasses the threshold time-period.

The ability to use pre-built docker images coupled with Docker Layer Caching on CircleCI can be a major performance boost (saves us build time needed to reinstall any necessary dependencies at every build). Additional performance speedups are available on CircleCI, with caching of the build steps – this again saves build time by not having to re-run the same steps if they haven’t changed.

There are a lot of useful features available on CircleCI with plenty of documentation and everyone on the community forum are helpful and questions are answered pretty much instantly.

Next, let’s build the same and more on another build environment/build farm – hint, hint, are you think the same as me? Adopt OpenJDK build farm)? We can give it a try!

Thanks and credits to Ron Powell from CircleCI and Oleg Šelajev from Oracle Labs for proof-reading and giving constructive feedback.

Please do let me know if this is helpful by dropping a line in the comments below or by tweeting at @theNeomatrix369, and I would also welcome feedback, see how you can reach me, above all please check out the links mentioned above.

Useful resources

– Links to useful CircleCI docs

– About Getting started | Videos

– About Docker

– Docker Layer Caching

– About Caching

– About Debugging via SSH

– CircleCI cheatsheet

– CircleCI Community (Discussions)

– Latest community topics

– CircleCI configuration and supporting files

– Approach 1: https://github.com/neomatrix369/awesome-graal/tree/build-on-circleci (config file and other supporting files i.e. scripts, directory layout, etc…)

– Approach 2: https://github.com/neomatrix369/awesome-graal/tree/build-on-circleci-using-pre-built-docker-container (config file and other supporting files i.e. scripts, directory layout, etc…)

– Scripts to build Graal on Linux, macOS and inside the Docker container

– Truffle served in a Holy Graal: Graal and Truffle for polyglot language interpretation on the JVM

– Learning to use Wholly GraalVM!

– Building Wholly Graal with Truffle!

Published on Java Code Geeks with permission by Mani Sarkar, partner at our JCG program. See the original article here: How to build Graal-enabled JDK8 on CircleCI? Opinions expressed by Java Code Geeks contributors are their own. |

Hi…

I’m Elena gillbert.when using the store_artifacts directive in the . circleci/config. yml file setting, set the value to a fixed folder name i.e. jdk8-with-graal-local/ in our case, setting the path to ${BASEDIR}/project/jdk8-with-graal didn’t create the resulting artifact once the build was finished hence the fixed path name suggestion.