Project loom

WHY LOOM?

One of the drivers behind streams in Java 8 was concurrent programming. In your stream pipeline, you specify what you want to have done, and your tasks are automatically distributed onto the available processors:

1 2 3 4 5 | var result = myData .parallelStream() .map(someBusyOperation) .reduce(someAssociativeBinOp) .orElse(someDefault); |

Parallel streams work great when the data structure is cheap to split into parts and the operations keep the processors busy. That’s what it was designed for.

But this doesn’t help you if your workload consists of tasks that mostly block. That’s your typical web application, serving many requests, with each request spending much of its time waiting for the result of a REST service, a database query, and so on.

In 1998, it was amazing that the Sun Java Web Server (the precursor of Tomcat) ran each request in a separate thread, and not an OS process. It was able to serve thousands of concurrent requests this way! Nowadays, that’s not so amazing. Each thread takes up a significant amount of memory, and you can’t have millions of threads on a typical server.

That’s why the modern mantra of server-side programming is: “Never block!” Instead, you specify what should happen once the data is available.

This asynchronous programming style is great for servers, allowing them to handily support millions of concurrent requests. It isn’t so great for programmers.

Here is an asynchronous request with the HttpClient API:

1 2 3 4 5 6 | HttpClient.newBuilder() .build() .sendAsync(request, HttpResponse.BodyHandlers.ofString()) .thenAccept(response -> . . .); .thenApply(. . .); .exceptionally(. . .); |

What we would normally achieve with statements is now encoded as method calls. If we loved this style of programming, we would not have statements in our programming language and merrily code in Lisp.

Languages such as JavaScript and Kotlin give us “async” methods where we write statements that are then transformed to method calls like the ones that you’ve just seen. That’s nice, except it means that there are now two kinds of methods—the regular ones and the transformed ones. And you can’t mix them (the “red pill/blue pill” divide).

Project Loom takes its guidance from languages such as Erlang and Go, where blocking isn’t a big deal. You run tasks in “fibers” or “lightweight threads” or “virtual threads”. The name is up for discussion, but I prefer “fiber” since it nicely denotes the fact that multiple fibers execute in a carrier thread. Fibers are parked when a blocking operation occurs, such as waiting for a lock or for I/O. Parking is relatively cheap. A carrier thread can support a thousand fibers if each of them is parked much of the time.

Keep in mind that Project Loom does not solve all concurrency woes. It does nothing for you if you have computationally intensive tasks and want to keep all processor cores busy. It doesn’t help you with user interfaces that use a single thread (for serializizing access to data structures that aren’t thread-safe). Keep using AsyncTask/SwingWorker/JavaFX Task for that usecase. Project Loom is useful when you have lots of tasks that spend much of their time blocking.

NB. If you have been around for a very long time, you may remember that early versions of Java had “green threads” that were mapped to OS threads. However, there is a crucial difference. When a green thread blocked, its carrier thread was also blocked, preventing all other green threads on the same carrier thread from making progress.

KICKING THE TIRES

At this point, Project Loom is still very much exploratory. The API keeps changing, so be prepared to adapt to the latest API version when you try out the code after the holiday season.

You can download binaries of Project Loom at http://jdk.java.net/loom/, but they are updated infrequently. However, on a Linux machine or VM, it is easy to build the most current version yourself:

1 2 3 4 5 | git clone https://github.com/openjdk/loomcd loom git checkout fiberssh configure make images |

Depending on what you have already installed, you may have a couple of failures in configure, but the messages tell you what packages you need to install so that you can proceed.

In the current version of the API, a fiber or, as it is called right now, virtual thread, is represented as an object of the Thread class. Here are three ways of producing fibers. First, there is a new factory method that can construct OS threads or virtual threads:

1 | Thread thread = Thread.newThread(taskname, Thread.VIRTUAL, runnable); |

If you need more customization, there is a builder API:

1 2 3 4 5 6 | Thread thread = Thread.builder() .name(taskname) .virtual() .priority(Thread.MAX_PRIORITY) .task(runnable) .build(); |

However, manually creating threads has been considered a poor practice for some time, so you probably shouldn’t do either of these. Instead, use an executor with a thread factory:

1 2 | ThreadFactory factory = Thread.builder().virtual().factory();ExecutorService exec = Executors.newFixedThreadPool(NTASKS, factory); |

Now the familar fixed thread pool will schedule virtual threads from the factory, in the same way as it has always done. Of course there will also be OS-level carrier threads to run those virtual threads, but that’s internal to the virtual thread implementation.

The fixed thread pool will limit the total number of concurrent virtual threads. By default, the mapping from virtual threads to carrier threads is done with a fork join pool that uses as many cores as given by the system property jdk.defaultScheduler.parallelism, or by default, Runtime.getRuntime().availableProcessors(). You can supply your own scheduler in the thread factory:

1 | factory = Thread.builder().virtual().scheduler(myExecutor).factory(); |

I don’t know if this is something that one would want to do. Why have more carrier threads than cores?

Back to our executor service. You execute tasks on virtual threads just like you used to execute tasks on OS level threads:

1 2 3 4 5 6 | for (int i = 1; i <= NTASKS; i++) { String taskname = "task-" + i; exec.submit(() -> run(taskname));}exec.shutdown();exec.awaitTermination(delay, TimeUnit.MILLISECONDS); |

As a simple test, we can just sleep in each task.

01 02 03 04 05 06 07 08 09 10 | public static int DELAY = 10_000; public static void run(Object obj) { try { Thread.sleep((int) (DELAY * Math.random())); } catch (InterruptedException ex) { ex.printStackTrace(); } System.out.println(obj); } |

If you now set NTASKS to 1_000_000 and comment out the .virtual() in the factory builder, the program will fail with an out of memory error. A million OS level threads take a lot of memory. But with virtual threads, it works.

At least, it should work, and it did work for me with previous builds of Loom. Unfortunately, with the build I downloaded on December 5, I got a core dump. That has happened to me on and off as I experimented with Loom. Hopefully it will be fixed by the time you try this.

Now you are ready to try something more complex. Heinz Kabutz recently presented a puzzler with a program that loaded thousands of Dilbert cartoon images. For each calendar day, there is a page such as https://dilbert.com/strip/2011-06-05. The program read those pages, located the URL of the cartoon image in each page, and loaded each image. It was a mess of completable futures, somewhat like:

1 2 3 4 5 6 | CompletableFuture .completedFuture(getUrlForDate(date)) .thenComposeAsync(this::readPage, executor) .thenApply(this::getImageUrl) .thenComposeAsync(this::readPage) .thenAccept(this::process); |

With fibers, the code is much clearer:

1 2 3 4 5 | exec.submit(() -> { String page = new String(readPage(getUrlForDate(date))); byte[] image = readPage(getImageUrl(page)); process(image);}); |

Sure, each the call to readPage blocks, but with fibers, we don’t care.

Try this out with something you care about. Read a large number of web pages, process them, do more blocking reads, and enjoy the fact that blocking is cheap with fibers.

STRUCTURED CONCURRENCY

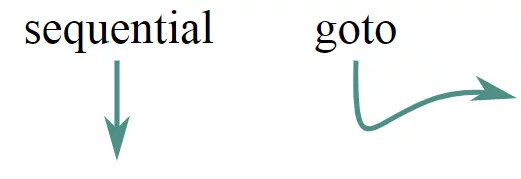

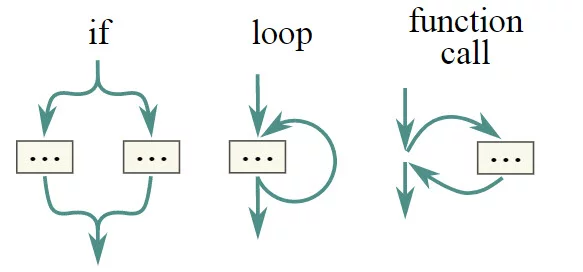

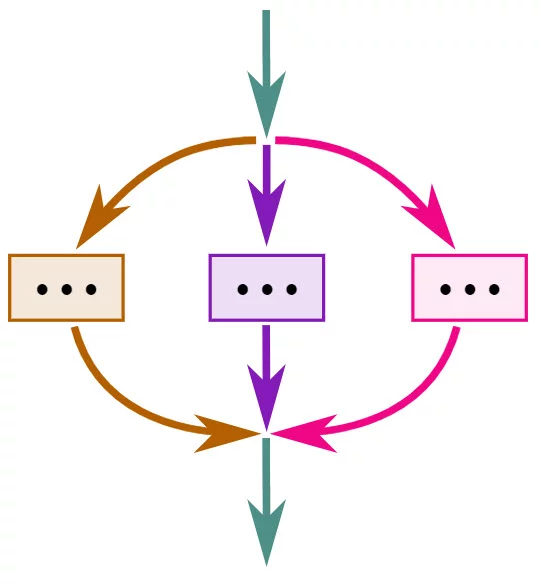

The initial motivation for Project Loom was to implement fibers, but earlier this year, the project embarked on an experimental API for structured concurrency. In this highly recommended article (from which the images below are taken), Nathaniel Smith proposes structured forms of concurrency. Here is his central argument. Launching a task in a new thread is really no better than programming with GOTO, i.e. harmful:

1 | new Thread(runnable).start(); |

When multiple threads run without coordination, it’s spaghetti code all over again. In the 1960s, structured programming replaced goto with branches, loops, and functions:

Now the time has come for structured concurrency. When launching concurrent tasks, we should know, from reading the program text, when they have all finished.

That way we can control the resources that the tasks use.

By summer 2019, Project Loom had an API to express structured concurrency. Unfortunately, that API is currently in tatters because of the more recent experiment in unifying the thread and fiber APIs, but you can try it with the prototype at http://jdk.java.net/loom/.

Here we schedule a number of tasks:

1 2 3 4 5 | FiberScope scope = FiberScope.open();for (int i = 0; i < NTASKS; i++) { scope.schedule(() -> run(i));}scope.close(); |

The call scope.close() blocks until all fibers finish. Remember—blocking is not a problem with fibers. Once the scope is closed, you know for sure that the fibers have finished.

A FiberScope is autocloseable, so you can use a try-with-resources statement:

1 2 3 | try (var scope = FiberScope.open()) { ...} |

But what if one of the tasks never finishes?

You can create a scope with a deadline (Instant) or timeout (Duration):

1 2 3 4 | try (var scope = FiberScope.open(Instant.now().plusSeconds(30))) { for (...) scope.schedule(...);} |

All fibers that haven’t finished by the deadline/timeout are canceled. How? Read on.

CANCELLATION

Cancellation has always been a pain in Java. By convention, you cancel a thread by interrupting it. If the thread is blocking, the blocking operation terminates with an InterruptedException. Otherwise the interrupted status flag is set. Getting the checks right is tedious. It is not helpful that the interrupted status can be reset, or that InterruptedException is a checked exception.

Treatment of cancellation in java.util.concurrent has been inconsistent. Consider ExecutorService.invokeAny. If any task yields a result, the others are cancelled. But CompletableFuture.anyOf lets all tasks run to completion, even though their results will be ignored.

The Summer 2019 Project Loom API tackled cancellation. In that version, fibers have a cancel operation, similar to interrupt, but cancellation is irrevocable. The static Fiber.cancelled method returns true if the current fiber has been canceled.

When a scope times out, its fibers get cancelled.

Cancelation can be controlled by the following options in the FiberScope constructor.

CANCEL_AT_CLOSE: Closing scope cancels all scheduled fibers instead of blockingPROPAGATE_CANCEL: If owning fiber is canceled, any newly scheduled fibers automatically canceledIGNORE_CANCEL: Scheduled fibers can’t be canceled

All these options are unset at the top level. The PROPAGATE_CANCEL and IGNORE_CANCEL options are inherited from the parent scope.

As you can see, there was a fair amount of tweakability. We’ll have to see what comes back when this issue is revisited. For structured concurrency, it must be automatic to cancel all fibers in the scope when the scope times out or is forcibly closed.

THREAD LOCALS

It came as a surprise to me that one of the pain points for the Project Loom implementors are ThreadLocal variables, as well as more esoteric things—context class loaders, AccessControlContext. I had no idea so much was riding along on threads.

If you have a data structure that isn’t safe for concurrent access, you can sometimes use an instance per thread. The classic example is SimpleDateFormat. Sure, you could keep constructing new formatter objects, but that’s not efficient. So you want to share one. But a global

1 | public static final SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd"); |

won’t work. If two threads access it concurrently, the formatting can get mangled.

So, it makes sense to have one of them per thread:

1 2 | public static final ThreadLocal<SimpleDateFormat> dateFormat = ThreadLocal.withInitial(() -> new SimpleDateFormat("yyyy-MM-dd")); |

To access an actual formatter, call

1 | String dateStamp = dateFormat.get().format(new Date()); |

The first time you call get in a given thread, the lambda in the constructor is called. From then on, the get method returns the instance belonging to the current thread.

For threads, that is accepted practice. But do you really want to have a million instances when there are a million fibers?

This hasn’t been an issue for me because it seems easier to use something threadsafe, like a java.time formatter. But Project Loom has been pondering “scope local” objects—one those FiberScope are reactivated.

Thread locals have also been used as an approximation for processor locality, in situations where there are about as many threads as processors. This could be supported with an API that actually models user intent.

STATE OF THE PROJECT

Developers who want to use Project Loom are naturally preoccupied with the API which, as you have seen, is not settled. However, a lot of the implementation work is under the hood.

A crucial part is to enable parking of fibers when an operation blocks. This has been done for networking, so you can connect to web sites, databases and so on, within fibers. Parking when local file operations block is not currently supported.

In fact, reimplementations of these libraries are already in JDK 11, 12, and 13—a tribute to the utility of frequent releases.

Blocking on monitors (synchronized blocks and methods) is not yet supported, but it needs to be eventually. ReentrantLock is ok now.

If a fiber blocks in a native method, that will “pin” the thread, and none of its fibers will make progress. There is nothing that Project Loom can do about that.

Method.invoke needs more work to be supported.

Work on debugging and monitoring support is ongoing.

As already mentioned, stability is still an issue.

Most importantly, performance has a way to go. Parking and unparking fibers is not a free lunch. A section of the runtime stack needs to be replaced each time.

There has been a lot of progress in all these areas, so let’s cycle back t what developers care about—the API. This is a really good time to look at Project Loom and think about how you want to use it.

Is it of value to you that the same class represents threads and fibers? Or would you prefer some of the baggage of Thread to be chucked out? Do you buy into the promise of structured concurrency?

Take Project Loom out for a spin and see how it works with your applications and frameworks, and provide feedback for the intrepid development team!

Published on Java Code Geeks with permission by Cay Horstmann, partner at our JCG program. See the original article here: Project Loom Opinions expressed by Java Code Geeks contributors are their own. |

Hi…

I’m Elena gillbert.Project Loom is a proposal to add fibers and continuations as a native JVM construct. … Fibers are light-weight threads, which can be created in large quantities, without worrying about exhausting system resources. Fibers are going to change how we write concurrent programs in Java.