Architecture Metrics

Last time we saw how major tech projects continue to be difficult to schedule. One thing that can keep momentum going for a long-running initiative is the appropriate use of metrics. Improving scores allow you to visualize progress and maintain motivation to keep going.

Let’s look at some metrics for software architectures.

Architecture is the art of making trade-offs between the quality attributes that matter most in a given context. A quality attribute is a measurable or testable property of a system that is used to indicate how well the system satisfies the needs of its stakeholders. An architecture metric should therefore be a combination of measurements of quality attributes deemed important for the architecture in question.

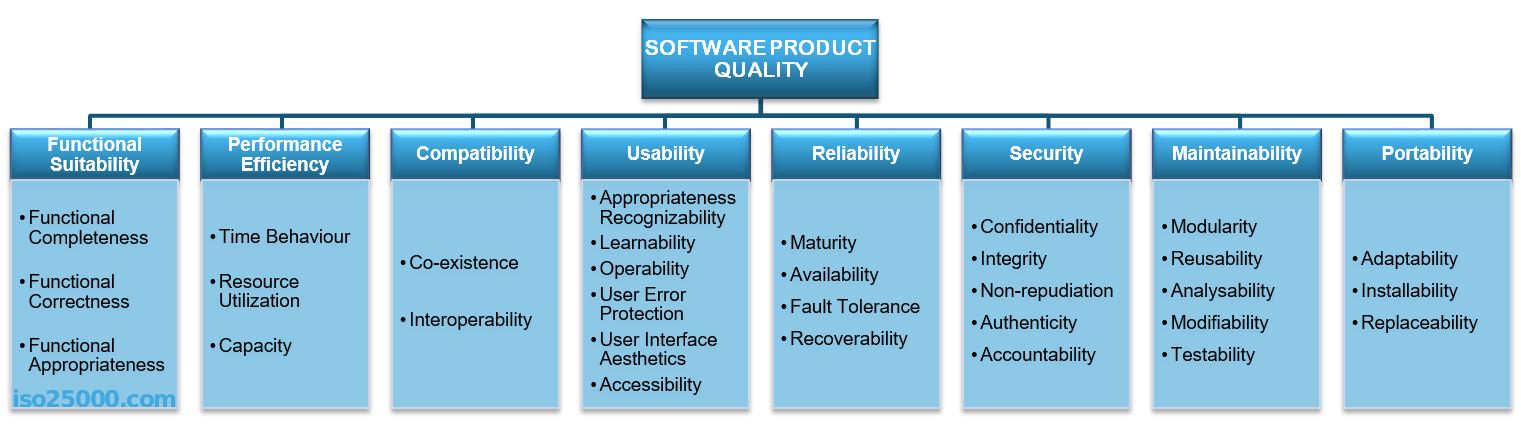

There are many potential quality attributes to choose from. The ISO/IEC 25010 standard describes 8 categories with a total of 31 quality attributes:

Nothing is stopping you from including additional quality attributes, of course, if they make sense in your context.

However, you should not measure all possible quality attributes. Some of them won’t make much sense in your situation. Others would be prohibitively expensive to measure. Having too many will also dilute your message. When in doubt, err on the side of leaving some out; you can always add them later. Start small and iterate as you learn.

Quality Storming is one way to determine which quality attributes are important enough to be included in your architecture metric.

Once you’ve decided what quality attributes to measure, you need to define how you will measure them. Good metrics are comparative and understandable, and they are usually ratios.

How you measure a certain quality attribute also depends on your context.

For example, McGabe’s cyclomatic complexity or Uncle Bob Martin’s distance from the main sequence are valid candidate metrics for a monolith. But those makes little sense for a Micro Services Architecture (MSA), since the complexity in an MSA moves from inside a service to the dependencies between the services. So if you’re living in an MSA world, maybe you should look instead at things like how much of the services’ data is exposed to other services, or what percentage of service calls cross domain boundaries.

When defining how to measure a quality attribute, think about how you’re going to collect the required data. If at all possible, automate the data collection. This will allow you to update the metrics more often, and it will probably also be less error-prone.

Some data could be collected by scanning the source code, e.g. for cyclomatic complexity. Other data could come from architecture diagrams, in particular container diagrams. Having a standard for those allows you to parse the diagrams for automated data collection. A threat model is a good source of data for security-related metrics.

Once you’ve defined how to measure all the quality attributes, the last step is to merge all those numbers into a single number that describes the overall quality of the architecture. You will most likely want to calculate a weighted average. To perform this calculation, you’ll need to define weights that indicate the relative importance of the quality attributes. The outcomes of Quality Storming can help with setting those weights.

What do you think? Do you define metrics for your architectures? If so, how? And in what way have they helped or hindered you? Please leave a comment below.

| Published on Java Code Geeks with permission by Remon Sinnema, partner at our JCG program. See the original article here: Architecture Metrics Opinions expressed by Java Code Geeks contributors are their own. |