A Gentle Introduction to Kubernetes

Kubernetes (often abbreviated as “K8s”) is an open-source platform for managing containerized workloads and services. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

Kubernetes allows developers to deploy, scale, and manage containerized applications and services across a cluster of computers. It provides a number of features that make it easier to manage containerized applications, including automated scaling, self-healing, load balancing, and service discovery.

Kubernetes is particularly popular in the world of cloud computing, where it is used to manage containerized workloads across multiple cloud providers and on-premises data centers. It is often used in conjunction with other cloud-native technologies like Docker, Prometheus, and Istio to create modern, scalable, and resilient cloud applications.

1. Kubernetes Architecture

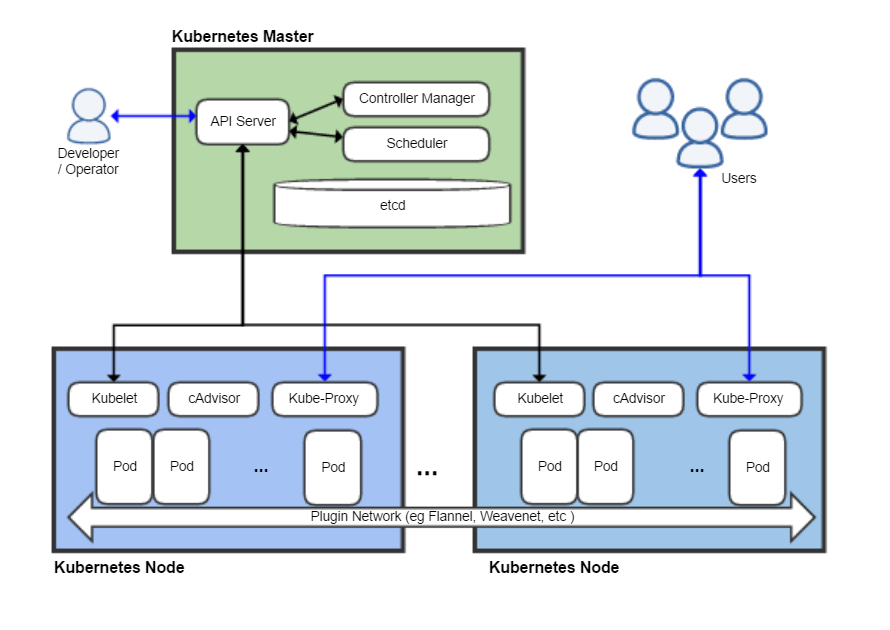

Kubernetes has a distributed architecture that is designed to manage containerized workloads and services across a cluster of machines. At a high level, the architecture of Kubernetes consists of a set of master nodes that control a set of worker nodes. Here is a brief overview of the key components in the Kubernetes architecture:

- Master nodes: The master nodes are responsible for managing the overall state of the Kubernetes cluster. They run a number of components, including the Kubernetes API server, etcd (a distributed key-value store), the Kubernetes controller manager, and the Kubernetes scheduler. These components work together to manage the deployment, scaling, and monitoring of containerized applications.

- Worker nodes: The worker nodes are responsible for running containerized workloads and services. Each worker node runs a container runtime (such as Docker or rkt) and a Kubernetes agent (known as the kubelet) that communicates with the master nodes.

- Kubernetes API server: The Kubernetes API server is the central component of the Kubernetes control plane. It exposes a RESTful API that allows administrators and developers to manage the Kubernetes cluster.

- etcd: etcd is a distributed key-value store that is used by Kubernetes to store cluster state information. This allows the master nodes to share information about the state of the cluster and coordinate actions.

- Kubernetes controller manager: The Kubernetes controller manager is responsible for managing various controllers that help to maintain the desired state of the cluster. For example, the replication controller ensures that a specified number of replicas of a given pod are running at all times.

- Kubernetes scheduler: The Kubernetes scheduler is responsible for scheduling pods (the smallest deployable units in Kubernetes) onto worker nodes. It takes into account factors such as resource availability, pod affinity/anti-affinity, and workload balancing when making scheduling decisions.

- Kubelet: The kubelet is the primary node agent that runs on each worker node. It communicates with the Kubernetes API server and manages the state of the pods running on that node.

- Container runtime: The container runtime is the software responsible for running containers on a given node. Kubernetes supports a number of different container runtimes, including Docker, rkt, and containerd.

In summary, the Kubernetes architecture is designed to provide a scalable and reliable platform for managing containerized workloads and services. Its distributed nature allows it to manage large clusters of machines, while its modular design allows it to be customized to meet the specific needs of a wide variety of applications.

2. K8s Master Server Components

The master server components in a Kubernetes cluster are responsible for managing the overall state of the cluster, and for providing the API that clients use to interact with the cluster. Here are the key components that run on a Kubernetes master server:

- Kubernetes API server: The API server is the central component of the Kubernetes control plane. It exposes the Kubernetes API that clients use to interact with the cluster. It receives requests from clients (such as the kubectl command-line tool), validates them, and updates the etcd data store with the new state of the cluster.

- etcd: etcd is a distributed key-value store that is used by Kubernetes to store all of its state information. It is the source of truth for the state of the entire cluster, and all changes to the state of the cluster are stored in etcd. The API server communicates with etcd to read and write cluster state information.

- Kubernetes controller manager: The controller manager is responsible for running a set of controllers that help to maintain the desired state of the cluster. These controllers include the ReplicaSet controller, the Deployment controller, and the Service controller, among others. Each controller is responsible for ensuring that a particular aspect of the cluster (such as the number of replicas of a particular pod) is always in the desired state.

- Kubernetes scheduler: The scheduler is responsible for assigning pods to nodes in the cluster. It takes into account various factors such as resource availability, node affinity/anti-affinity, and workload balancing when making scheduling decisions.

Together, these components provide the core functionality of the Kubernetes control plane, and are responsible for ensuring that the cluster is always in the desired state. In addition to these core components, there are also a number of optional components that can be run on the master server, such as the Kubernetes DNS server, the Kubernetes Dashboard, and the Prometheus monitoring stack.

3. Node Server Components

In a Kubernetes cluster, a Node is a worker machine that runs Pods. Nodes are responsible for running the containerized applications and providing the necessary resources (such as CPU, memory, and storage) to run those applications. Here are the key components that run on a Kubernetes Node:

- kubelet: The kubelet is an agent that runs on each Node in the cluster. It is responsible for starting, stopping, and monitoring the Pods that are scheduled on the Node. The kubelet communicates with the Kubernetes API server to receive instructions about which Pods to run, and it reports the status of the Pods back to the API server.

- kube-proxy: The kube-proxy is responsible for managing network traffic between different Pods and Services in the cluster. It maintains network rules and performs network address translation (NAT) to ensure that traffic is properly routed to the correct destination.

- Container runtime: The container runtime is the software that is responsible for running the containers that make up the Pods. Kubernetes supports several container runtimes, including Docker, containerd, and CRI-O.

- Pod: A Pod is the smallest deployable unit in Kubernetes. It consists of one or more containers that share the same network namespace and storage volumes. Pods run on Nodes, and they can be scheduled on any Node in the cluster based on resource availability.

- cAdvisor: The Container Advisor (cAdvisor) is an agent that runs on each Node and provides resource usage and performance metrics for each container running on the Node. The metrics collected by cAdvisor are used by Kubernetes to make scheduling and resource allocation decisions.

Together, these components provide the core functionality of a Kubernetes Node. The kubelet and kube-proxy work together to manage the Pods and network traffic on the Node, while the container runtime is responsible for running the containers that make up the Pods. The Pod is the smallest deployable unit in Kubernetes and runs on Nodes. Finally, cAdvisor provides resource usage and performance metrics for containers running on the Node.

4. How Services interact with Pod networking in a Kubernetes cluster

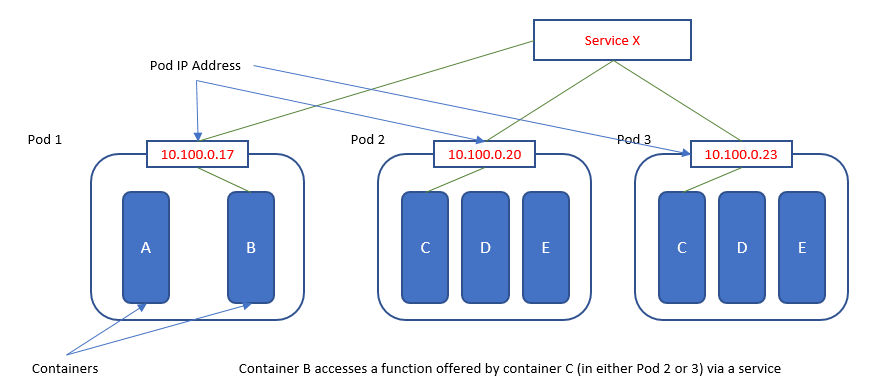

In a Kubernetes cluster, Services provide a stable IP address and DNS name for a set of Pods. Services allow clients (both inside and outside the cluster) to access Pods using a single, stable IP address and DNS name, even as the underlying Pods change.

Here’s how Services interact with Pod networking in a Kubernetes cluster:

- Pods are created and managed by Kubernetes Deployments or ReplicaSets. Each Pod is assigned a unique IP address, which is used for inter-Pod communication within the cluster.

- Services provide a way to expose a set of Pods to the network. When a Service is created, Kubernetes assigns it a stable IP address and DNS name.

- The Service uses labels to select a set of Pods to expose. When a client sends a request to the Service’s IP address, the request is forwarded to one of the selected Pods. This allows the client to access the Pods through a single, stable IP address and DNS name, even as the underlying Pods change.

- Kubernetes uses iptables rules to implement the Service’s forwarding behavior. When a request is sent to the Service’s IP address, the iptables rules redirect the request to one of the selected Pods based on a random selection algorithm, or based on a user-defined session affinity policy.

- The Service can be configured to use different types of load balancing algorithms, including round-robin, least connections, and IP hash. This allows the Service to distribute traffic evenly among the selected Pods.

In summary, Services provide a way to expose a set of Pods to the network, and allow clients to access the Pods using a single, stable IP address and DNS name. Kubernetes uses iptables rules to implement the Service’s forwarding behavior, and the Service can be configured to use different types of load balancing algorithms to distribute traffic among the selected Pods.

5. Kubernetes Objects and Workloads

In Kubernetes, objects are the basic building blocks used to define and deploy applications. Workloads are a type of object that define how applications should be deployed and managed in the cluster. Here are the most common Kubernetes objects and workloads:

- Pods: A Pod is the smallest deployable unit in Kubernetes. It consists of one or more containers that share the same network namespace and storage volumes. Pods are used to deploy and run individual containers, and they are managed by Kubernetes controllers such as Deployments or ReplicaSets.

- Deployments: A Deployment is a higher-level object that manages the creation and scaling of Pods. It ensures that a specified number of replica Pods are running at all times, and it can be used to roll out updates to the application without downtime.

- ReplicaSets: A ReplicaSet is a Kubernetes object that ensures a specified number of replica Pods are running at all times. It is used to provide fault tolerance and scaling for stateless applications.

- StatefulSets: A StatefulSet is a Kubernetes object that provides stateful deployment and scaling for stateful applications. It ensures that each Pod in the StatefulSet has a unique and stable network identity, and it supports ordered and parallel Pod creation and deletion.

- DaemonSets: A DaemonSet is a Kubernetes object that ensures that a copy of a Pod is running on each Node in the cluster. It is used for deploying system-level services such as logging and monitoring agents.

- Jobs: A Job is a Kubernetes object that creates one or more Pods to run a batch job. It runs the Pods to completion, and then terminates them once the job is complete.

- CronJobs: A CronJob is a Kubernetes object that creates Jobs on a schedule. It is used to run periodic or scheduled jobs.

- Services: A Service is a Kubernetes object that provides a stable IP address and DNS name for a set of Pods. It is used to provide a single, stable endpoint for accessing Pods from both inside and outside the cluster.

- ConfigMaps: A ConfigMap is a Kubernetes object that provides a way to store configuration data as key-value pairs. It is used to separate application configuration from application code.

- Secrets: A Secret is a Kubernetes object that provides a way to store sensitive data such as passwords and API keys. It ensures that sensitive data is stored securely and is not exposed in plain text.

In summary, Kubernetes objects are the basic building blocks used to define and deploy applications, while workloads define how applications should be deployed and managed in the cluster. The most common Kubernetes objects and workloads include Pods, Deployments, ReplicaSets, StatefulSets, DaemonSets, Jobs, CronJobs, Services, ConfigMaps, and Secrets.

6. Kubernetes Networking Components

In a Kubernetes cluster, networking is a crucial component that enables communication between different Pods and Services. Here are the key networking components in Kubernetes:

- Cluster network: The cluster network is the underlying network that connects all the Nodes in the cluster. It can be implemented using various network plugins, such as Flannel, Calico, or Weave Net.

- Pod network: The Pod network is a virtual network that connects all the Pods in the cluster. Each Pod is assigned a unique IP address within the Pod network. The Pod network is implemented using a container network interface (CNI) plugin, which creates virtual network interfaces for each Pod.

- Service network: The Service network is a virtual network that provides a stable IP address and DNS name for a set of Pods. Each Service is assigned a unique IP address within the Service network, which is used to route traffic to the Pods that the Service is responsible for.

- kube-proxy: The kube-proxy is a network proxy that runs on each Node in the cluster. It is responsible for managing network traffic between different Pods and Services in the cluster. It maintains network rules and performs network address translation (NAT) to ensure that traffic is properly routed to the correct destination.

- Ingress: An Ingress is a Kubernetes object that provides external access to Services in the cluster. It exposes a set of Services through a single IP address and hostname, and it can perform advanced routing and load balancing based on HTTP and HTTPS traffic.

- Network policies: Network policies are a Kubernetes feature that allows you to define rules for network traffic within the cluster. They can be used to restrict traffic between different Pods and Services based on IP address, port, and protocol.

Together, these components provide the core networking functionality of a Kubernetes cluster. The cluster network connects all the Nodes in the cluster, while the Pod network provides a virtual network that connects all the Pods. The Service network provides a stable IP address and DNS name for a set of Pods, and the kube-proxy manages network traffic between different Pods and Services. Ingress provides external access to Services, and network policies can be used to define rules for network traffic within the cluster.

7. Conclusion

Kubernetes is a powerful open-source container orchestration platform that helps automate the deployment, scaling, and management of containerized applications. It provides a rich set of features and components, including a master server, node components, networking components, and a wide range of objects and workloads, to help streamline the management and deployment of complex containerized applications. With Kubernetes, developers and IT teams can easily deploy and manage applications across multiple environments, including on-premises data centers, public clouds, and hybrid environments. Overall, Kubernetes offers a flexible and powerful solution for deploying and managing containerized applications at scale.