Java Quarkus LangChain4j – Building a Chatbot

Quarkus is a modern Java framework designed for cloud-native applications, offering fast startup times and efficient resource usage. LangChain4j, inspired by LangChain, simplifies integrating Large Language Models (LLMs) into Java applications. By combining Java, Quarkus , LangChain4j, developers can build AI-driven solutions that are both powerful and scalable. This integration allows for seamless deployment of natural language processing capabilities, such as chatbots and text analysis, within robust, high-performance applications. The synergy between Quarkus and LangChain4j enables the creation of innovative AI applications efficiently.

1. Overview

In the rapidly evolving landscape of artificial intelligence, chatbots have become indispensable tools for enhancing user experiences across various domains, from customer support to personal assistants. At the heart of these advanced conversational agents are Large Language Models (LLMs), which bring a new level of sophistication to human-computer interactions.

This tutorial will guide you through leveraging two powerful tools — Quarkus and LangChain4j — to create chatbots that are not only efficient and scalable but also capable of understanding and generating human-like responses. Quarkus, with its ultra-fast startup times and optimized memory usage, is an excellent choice for developing reactive microservices. On the other hand, LangChain4j offers robust capabilities for building and managing language processing chains, making it a perfect match for working with LLMs.

Whether you are a seasoned developer or a newcomer to the world of chatbots, this tutorial will provide you with the knowledge and practical skills needed to build intelligent and responsive chatbots. We will start by introducing the fundamentals of Quarkus and LangChain4j, then move on to integrating these tools to harness the full potential of LLMs. By the end of this journey, you will be equipped to deploy chatbots that can seamlessly handle a wide range of conversational scenarios.

Let’s embark on this exciting journey to build the next generation of chatbots!

2. LLMs

Large Language Models (LLMs) are advanced AI systems designed to understand and generate human language. Large Language Models, or LLMs, are sophisticated AI systems designed to understand, interpret, and generate human language. These models are trained on vast amounts of text data, enabling them to grasp complex linguistic patterns, context, and even subtle nuances in language. LLMs can perform a wide range of tasks, such as translating languages, answering questions, summarizing texts, and engaging in conversations. They have significantly advanced the field of natural language processing, making interactions with machines more intuitive and human-like. Their versatility and capability are transforming how we interact with technology across various domains.

LangChain4j is a Java library inspired by LangChain, aimed at simplifying the integration of these powerful LLMs into Java applications. LangChain4j supports a variety of Large Language Models (LLMs), enabling seamless integration into Java-based applications. This framework allows developers to leverage powerful models like OpenAI GPTs and Hugging Face transformers, providing advanced natural language processing capabilities. With LangChain4j, applications can benefit from sophisticated text generation, document classification, and conversational AI. The framework also supports embeddings and document stores, enhancing the flexibility and performance of AI solutions. By incorporating LLMs through LangChain4j, developers can create intelligent, responsive, and scalable applications across diverse domains.

Quarkus is a modern Java framework designed to optimize Java applications for Kubernetes and cloud environments. It stands out due to its fast startup times and low memory footprint, which make it ideal for serverless and microservices architectures. Quarkus achieves these efficiencies through techniques like ahead-of-time compilation and container-first principles, allowing developers to build and deploy applications quickly and efficiently. With a strong emphasis on developer productivity, Quarkus offers features like live coding and extensive libraries, enabling rapid development and easy integration of various technologies. This makes Quarkus a compelling choice for creating high-performance, cloud-native Java applications. Quarkus, known for its efficiency and performance in cloud-native environments, provides an ideal framework to host and deploy such applications.

By leveraging Quarkus and LangChain4j together, developers can create robust and scalable AI-driven applications. LangChain4j makes it easy to connect with various LLMs, enabling functionalities like natural language understanding, content generation, and conversational agents.

Together, Quarkus and LangChain4j empower developers to create sophisticated applications in diverse domains for different usecases:

- Customer Service

- Content Creation

- Digital Assistants

- Creativity Work

- Branding and Advertising

- Virtual Assistants

Docker for Quarkus

Let us first look at the Quarkus Docker file to pull the image for JDK 17. Quarks-run.jar file is created by copying the lib, deployments, app, quarkus folder contents to the target.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 | FROM registry.access.redhat.com/ubi8/openjdk-17:1.11ENV LANGUAGE='en_US:en'# We make four distinct layers so if there are application changes the library layers can be re-usedCOPY --chown=185 target/quarkus-app/lib/ /deployments/lib/COPY --chown=185 target/quarkus-app/*.jar /deployments/COPY --chown=185 target/quarkus-app/app/ /deployments/app/COPY --chown=185 target/quarkus-app/quarkus/ /deployments/quarkus/EXPOSE 8080USER 185ENV JAVA_OPTS="-Dquarkus.http.host=0.0.0.0 -Djava.util.logging.manager=org.jboss.logmanager.LogManager"ENV JAVA_APP_JAR="/deployments/quarkus-run.jar" |

Now, let us look at the Docker Compose yaml file. You need to change the API key and organization.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 | services: redis: image: redis:7-alpine hostname: cacheq ports: - 6379:6379 chatbot: hostname: chatbot build: context: . dockerfile: src/main/docker/Dockerfile.jvm environment: QUARKUS_REDIS_HOSTS: redis://cacheq:6379 QUARKUS_LANGCHAIN4J_OPENAI_API_KEY: "xxxx" QUARKUS_LANGCHAIN4J_OPENAI_ORGANIZATION_ID: xxxxx ports: - 8080:8080networks: default: |

3. Chatbot Application

Combining Quarkus and LangChain4j opens up a world of possibilities for creating advanced chatbot applications integrating Large Language Models (LLMs) into Java applications, making it easier to harness the power of natural language understanding and generation.

Quarkus chatbots leverage the efficiency and performance benefits of the Quarkus framework to deliver responsive and scalable conversational agents. Quarkus, with its fast startup times and low memory footprint, is well-suited for deploying chatbots in cloud and microservices environments. By integrating with libraries and services that handle natural language processing, machine learning, and real-time communication, Quarkus chatbots can handle high volumes of interactions seamlessly. This makes them ideal for applications ranging from customer support to interactive user engagements. The framework’s developer-friendly features ensure quick iterations and deployment, enhancing the overall chatbot development experience.

The Quarkus chatbots can understand user queries, provide accurate responses, and even carry out complex tasks while maintaining a natural and engaging conversation flow. Quarkus enhances this setup by ensuring the chatbot application runs efficiently, even in cloud-native environments. Its ability to handle high loads and scale effortlessly makes it an ideal choice for deploying AI-driven chatbots that need to handle numerous interactions simultaneously.

ChatBot Application

Let us look at the application.properties for a Quarkus Chatbot. Timeout is configured at 60s, and the log level is set to INFO level.

1 2 | quarkus.langchain4j.timeout=60squarkus.log.level=INFO |

Now let us look at Quarkus ChatBot Application in java. Chatbot is configured with a prompt to search in langchain4j resources.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | package org.javacodegeeks.quarkus.langchain4j;import dev.langchain4j.service.MemoryId;import dev.langchain4j.service.SystemMessage;import dev.langchain4j.service.UserMessage;import io.quarkiverse.langchain4j.RegisterAiService;import jakarta.inject.Singleton;import java.util.UUID;@Singleton@RegisterAiServicepublic interface QuarkusChatBot { @SystemMessage(""" During the whole chat please behave like a LangChain specialist and only answer directly related to LangChain, its documentation, features and components. Nothing else that has no direct relation to LangChain. """) @UserMessage(""" From the best of your knowledge answer the question below regarding LangChain. Please favor information from the following sources: - https://www.langchain.com/langchain - https://js.langchain.com/docs/tutorials/ And their subpages. Then, answer: --- {question} --- """) String chat(@MemoryId UUID memoryId, String question);} |

Let us look at QuarkusChatExample code in Java. Chat questions and messages are recorded in redis. They can be retrieved by chatId. ChatId is an unique identifier for chat question and answer.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | package org.javacodegeeks.quarkus.langchain4j;import jakarta.ws.rs.Consumes;import jakarta.ws.rs.POST;import jakarta.ws.rs.Path;import jakarta.ws.rs.Produces;import jakarta.ws.rs.QueryParam;import jakarta.ws.rs.core.MediaType;import java.util.UUID;@Path("/chat")@Produces(MediaType.APPLICATION_JSON)@Consumes(MediaType.APPLICATION_JSON)public class QuarkusChatExample { private QuarkusChatBot qchatBot; public QuarkusChatExample(QuarkusChatBot qchatBot) { this.qchatBot = qchatBot; } @POST public Answer mesage(@QueryParam("q") String question, @QueryParam("id") UUID chatId) { chatId = chatId == null ? UUID.randomUUID() : chatId; String message = qchatBot.chat(chatId, question); return new Answer(message, chatId); } public record Answer(String message, UUID chatId) {}} |

Testing the Chatbot

You can test the application using the postman. The application can be built using Maven and the docker image of Quarkus and redis to execute the built chatbot on the image. The commands are shown below:

1 2 | mvn clean packagedocker compose -f docker-compose.yml up --build |

The output when the above commands are executed is shown below:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | bhagvanarch@Bhagvans-MacBook-Air quarkus-langchain4j % sudo docker compose -f docker-compose.yml up --buildPassword:[+] Building 1.6s (10/10) FINISHED docker:desktop-linux => [chatbot internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [chatbot internal] load build definition from Dockerfile.jvm 0.0s => => transferring dockerfile: 831B 0.0s => [chatbot internal] load metadata for registry.access.redhat.com/ubi8/ 1.4s => [chatbot 1/5] FROM registry.access.redhat.com/ubi8/openjdk-17:1.11@sh 0.0s => [chatbot internal] load build context 0.0s => => transferring context: 869.04kB 0.0s => CACHED [chatbot 2/5] COPY --chown=185 target/quarkus-app/lib/ /deploy 0.0s => [chatbot 3/5] COPY --chown=185 target/quarkus-app/*.jar /deployments/ 0.0s => [chatbot 4/5] COPY --chown=185 target/quarkus-app/app/ /deployments/a 0.0s => [chatbot 5/5] COPY --chown=185 target/quarkus-app/quarkus/ /deploymen 0.0s => [chatbot] exporting to image 0.0s => => exporting layers 0.0s => => writing image sha256:6b06e4b6d5dc321f581d7d0b4f680e661e07895ce30f5 0.0s => => naming to docker.io/library/quarkus-langchain4j-chatbot 0.0s[+] Running 2/0 ✔ Container quarkus-langchain4j-redis-1 Created 0.0s ✔ Container quarkus-langchain4j-chatbot-1 Recreated 0.0s Attaching to chatbot-1, redis-1redis-1 | 1:C 29 Nov 2024 17:32:51.099 # WARNING Memory overcommit must be enabled! Without it, a background save or replication may fail under low memory condition. Being disabled, it can also cause failures without low memory condition, see https://github.com/jemalloc/jemalloc/issues/1328. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.redis-1 | 1:C 29 Nov 2024 17:32:51.100 * oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Ooredis-1 | 1:C 29 Nov 2024 17:32:51.100 * Redis version=7.4.1, bits=64, commit=00000000, modified=0, pid=1, just startedredis-1 | 1:C 29 Nov 2024 17:32:51.100 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.confredis-1 | 1:M 29 Nov 2024 17:32:51.100 * monotonic clock: POSIX clock_gettimeredis-1 | 1:M 29 Nov 2024 17:32:51.101 * Running mode=standalone, port=6379.redis-1 | 1:M 29 Nov 2024 17:32:51.101 * Server initializedredis-1 | 1:M 29 Nov 2024 17:32:51.101 * Loading RDB produced by version 7.4.1redis-1 | 1:M 29 Nov 2024 17:32:51.101 * RDB age 142 secondsredis-1 | 1:M 29 Nov 2024 17:32:51.101 * RDB memory usage when created 1.04 Mbredis-1 | 1:M 29 Nov 2024 17:32:51.101 * Done loading RDB, keys loaded: 7, keys expired: 0.redis-1 | 1:M 29 Nov 2024 17:32:51.101 * DB loaded from disk: 0.000 secondsredis-1 | 1:M 29 Nov 2024 17:32:51.101 * Ready to accept connections tcpchatbot-1 | Starting the Java application using /opt/jboss/container/java/run/run-java.sh ...chatbot-1 | INFO exec java -Dquarkus.http.host=0.0.0.0 -Djava.util.logging.manager=org.jboss.logmanager.LogManager -XX:+UseParallelGC -XX:MinHeapFreeRatio=10 -XX:MaxHeapFreeRatio=20 -XX:GCTimeRatio=4 -XX:AdaptiveSizePolicyWeight=90 -XX:+ExitOnOutOfMemoryError -cp "." -jar /deployments/quarkus-run.jar chatbot-1 | __ ____ __ _____ ___ __ ____ ______ chatbot-1 | --/ __ \/ / / / _ | / _ \/ //_/ / / / __/ chatbot-1 | -/ /_/ / /_/ / __ |/ , _/ ,< / /_/ /\ \ chatbot-1 | --\___\_\____/_/ |_/_/|_/_/|_|\____/___/ chatbot-1 | 2024-11-29 17:32:51,809 INFO [io.quarkus] (main) quarkus-langchain4j 0.1-SNAPSHOT on JVM (powered by Quarkus 3.14.2) started in 0.600s. Listening on: http://0.0.0.0:8080chatbot-1 | 2024-11-29 17:32:51,810 INFO [io.quarkus] (main) Profile prod activated. chatbot-1 | 2024-11-29 17:32:51,810 INFO [io.quarkus] (main) Installed features: [cdi, langchain4j, langchain4j-memory-store-redis, langchain4j-openai, qute, redis-client, rest, rest-client, rest-client-jackson, rest-jackson, smallrye-context-propagation, vertx]^CGracefully stopping... (press Ctrl+C again to force)[+] Stopping 0/0 ⠋ Container quarkus-langchain4j-chatbot-1 Stopping 0.1s [+] Stopping 2/2rkus-langchain4j-redis-1 Stopping 0.1s ✔ Container quarkus-langchain4j-chatbot-1 Stopped 0.2s ✔ Container quarkus-langchain4j-redis-1 Stopped 0.2s |

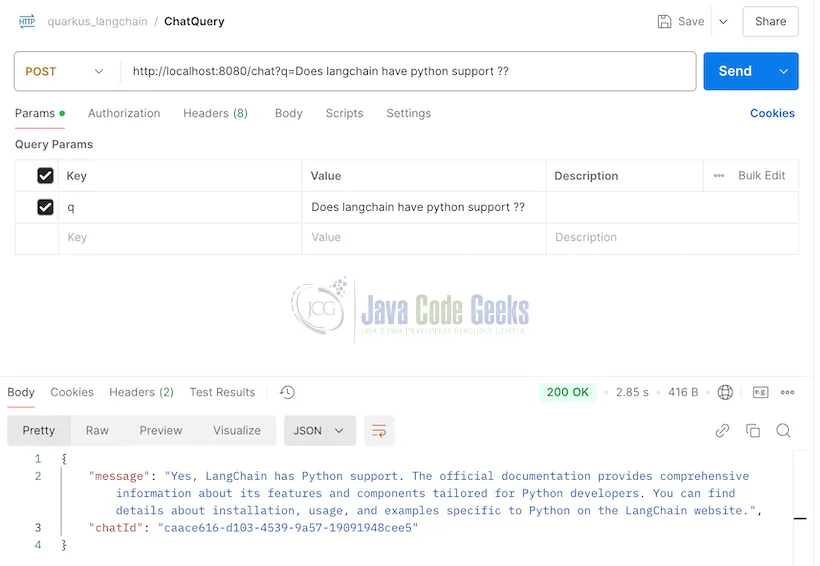

When the Postman collection chat request is invoked, You can see the response in the attached image below. The response is the LLM answer.

Quarkus Chat Query and Response

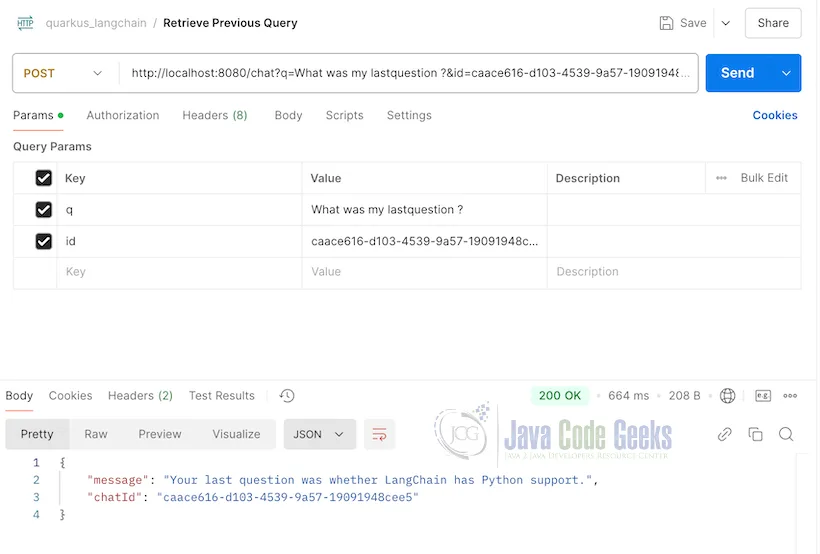

Now let us look at the last question asked and pass the chatId. The chat history is stored in redis which is part of the docker image.

Output for Chat Query – Last Question Asked

4. Conclusion

In conclusion, integrating LangChain4j with Quarkus offers a robust and efficient framework for developing advanced AI-driven applications. Throughout this tutorial, we have explored the setup process, configuration, and practical examples of leveraging LangChain4j to enhance your applications with natural language processing capabilities. By combining the performance and scalability of Quarkus with the versatility of LangChain4j, developers can create powerful, responsive, and resource-efficient solutions. This approach simplifies the development process and opens up new possibilities for innovative AI applications, ensuring a seamless and productive experience.

5. Download

You can download the full source code of this example here: Java Quarkus LangChain4j -Building a Chatbot