Clustering reactmq with akka-cluster

In the last two posts on reactmq, I described how to write a reactive, persistent message queue. The queue has the following characteristics:

- there is a single broker storing the messages, with potentially multiple clients, either sending or receiving messages

- provides at-least-once-delivery; receiving a message blocks the message; unless it is deleted within some time it will be available for delivery again

- sending & receiving messages is reactive thanks to akka-streams: back-pressure is applied if any of the components cannot keep up with the load

- messages are persistent, using akka-persistence: all send/receive/delete events are persisted in a journal, and when the system is restarted the state of the queue actor is re-created

The event store can be replicated by using a replicated journal implementation, e.g. Cassandra. However, there is one piece missing: clustering the broker itself.

Luckily, akka-cluster is there to help! Let’s see how using it we can make sure that there’s always a broker running in our cluster.

Cluster setup

First of all we need a working cluster. We will need two new dependencies in our build.sbt file, akka-cluster and akka-contrib, as we will be using some contributed extensions.

Secondly, we need to provide configuration for the ActorSystem with clustering enabled. It’s good to make it clear what’s the purpose of a config file, hence there’s the cluster-broker-template.conf file:

akka {

actor.provider = "akka.cluster.ClusterActorRefProvider"

remote.netty.tcp.port = 0 // should be overriden

remote.netty.tcp.hostname = "127.0.0.1"

cluster {

seed-nodes = [

"akka.tcp://broker@127.0.0.1:9171",

"akka.tcp://broker@127.0.0.1:9172",

"akka.tcp://broker@127.0.0.1:9173"

]

auto-down-unreachable-after = 10s

roles = [ "broker" ]

role.broker.min-nr-of-members = 2

}

extensions = [ "akka.contrib.pattern.ClusterReceptionistExtension" ]

}Going through the settings:

- we need to use the

ClusterActorRefProviderto communicate with actors on other cluster nodes - as we will be using the same config file for multiple nodes (for local testing), the port will be overridden in code. Apart from the port, we also need to specify the hostname to which cluster communication will bind.

- the node needs an initial list of seed nodes, with which it will try to communicate on start-up to form a cluster

- we’re using auto-downing, which causes nodes to be declared

downafter 10 seconds. This can cause partitions, however we also specify that there need to be at least 2 nodes alive for the cluster fragment to be operative (we will have 3 nodes in total, so with 2 we are safe against partitions) - finally, we are declaring that any cluster nodes started using the config file will have the

brokerrole and use the cluster receptionist extension – but more on that later.

Actually starting a clustered actor system is now very simple, see the BrokerManager class:

class BrokerManager(clusterPort: Int) {

// …

val conf = ConfigFactory

.parseString(s"akka.remote.netty.tcp.port=$clusterPort")

.withFallback(ConfigFactory.load("cluster-broker-template"))

val system = ActorSystem(s"broker", conf)

// ...

}To specify the port we create configuration from a string with only the port part specified, and to use the other settings, we fall back to the configuration from the template file.

Starting cluster nodes is just a matter of starting three simple applications:

object ClusteredBroker1 extends App {

new BrokerManager(9171).run()

}Cluster singleton

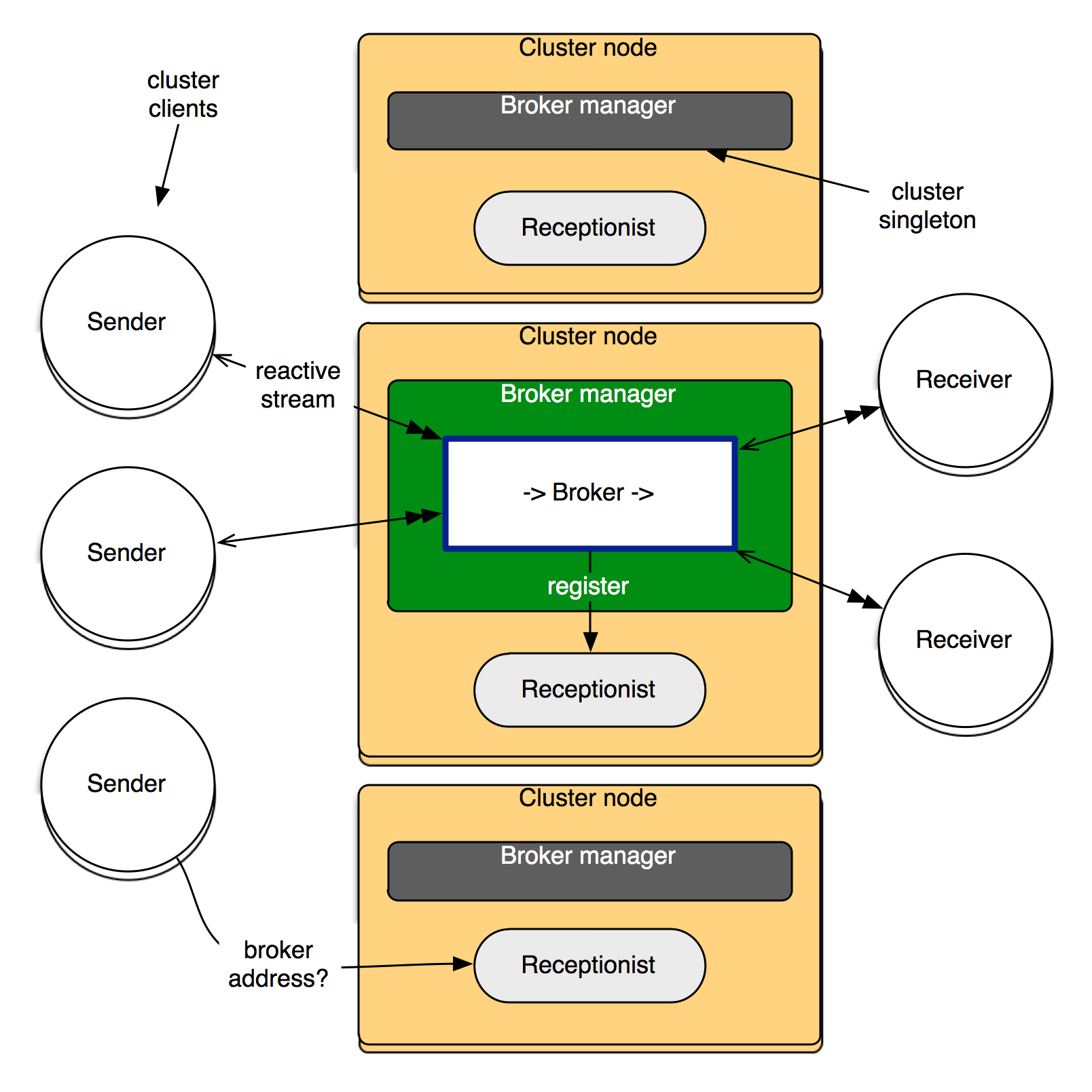

Having the cluster ready, we now have to start the broker on one of the nodes. At any time, there should be only one broker running – otherwise the queue would get corrupted. For that the Cluster Singleton contrib extension is perfect.

To use the extension we need to create an actor, which will be managed by the singleton extension and started on only one cluster node. Hence we create the BrokerManagerActor and we can now start the singleton:

def run() {

// …

system.actorOf(ClusterSingletonManager.props(

singletonProps = Props(classOf[BrokerManagerActor], clusterPort),

singletonName = "broker",

terminationMessage = PoisonPill,

role = Some("broker")),

name = "broker-manager")

}

class BrokerManagerActor(clusterPort: Int) extends Actor {

val sendServerAddress = new InetSocketAddress(

"localhost", clusterPort + 10)

val receiveServerAddress = new InetSocketAddress(

"localhost", clusterPort + 20)

override def preStart() = {

super.preStart()

new Broker(sendServerAddress, receiveServerAddress)(context.system)

.run()

}

override def receive = { case _ => }

}In the ClusterSingletonManager properties, we specify the actor to run, the message that can be used to terminate the actor, and the cluster roles on which the actor can run (our clusters’ nodes have only one role, broker).

The BrokerManagerActor takes a port (should be unique to each node if we want to run a couple on localhost), and creates basing on that an address on which the socket for new queue-message-sending-clients will listen, and another for the queue-message-receive-client listening socket.

Cluster client & receptionist: cluster side

We now have a single broker running in the cluster, but how can clients know what’s the address of the singleton? Well, we can just ask the BrokerManagerActor actor for that! This can be done by a simple message exchange:

case object GetBrokerAddresses

case class BrokerAddresses(sendServerAddress: InetSocketAddress,

receiveServerAddress: InetSocketAddress)

class BrokerManagerActor(clusterPort: Int) extends Actor {

// as above, plus:

override def receive = {

case GetBrokerAddresses => sender() ! BrokerAddresses(

sendServerAddress, receiveServerAddress)

}

}One problem remains, though. The clients that want to use our message queue need not be members of the cluster. Here the Cluster Client contrib extension can help.

On the cluster node side, the client extension provides a receptionist, with which actors, which want to be visible outside can register. And that’s what our BrokerManagerActor does on startup:

class BrokerManagerActor(clusterPort: Int) extends Actor {

// …

override def preStart() = {

// ...

ClusterReceptionistExtension(context.system)

.registerService(self)

}

}Cluster client & receptionist: client side

As for the clients itself, they also need some configuration to communicate with the cluster:

akka {

actor.provider = "akka.remote.RemoteActorRefProvider"

remote.netty.tcp.port = 0

remote.netty.tcp.hostname = "127.0.0.1"

}

cluster.client.initial-contact-points = [

"akka.tcp://broker@127.0.0.1:9171",

"akka.tcp://broker@127.0.0.1:9172",

"akka.tcp://broker@127.0.0.1:9173"

]Again going through the settings:

- to communicate with remote actors (which live in the cluster), we need to use the

RemoteActorRefProvider(theClusterARPis a richer version of theRemoteARP) - similarly to the seed nodes, we need to provide seed contact points, so that the client has some way of initiating communication with the cluster

Actually initiating a cluster client is quite straightforward, we need to create an actor system and create an actor which will communicate with the cluster:

val conf = ConfigFactory.load("cluster-client")

implicit val system = ActorSystem(name, conf)

val initialContacts = conf

.getStringList("cluster.client.initial-contact-points")

.asScala.map {

case AddressFromURIString(addr) => system.actorSelection(

RootActorPath(addr) / "user" / "receptionist")

}.toSet

val clusterClient = system.actorOf(

ClusterClient.props(initialContacts), "cluster-client")To start a client of our message queue (we have two types of clients: one sends messages to the queue, the other received messages from it), we need to find out what’s the address of the broker. To do that, we ask (using Akka’s ask pattern) the broker registered with the receptionist about its address:

clusterClient ? ClusterClient.Send(

"/user/broker-manager/broker",

GetBrokerAddresses,

localAffinity = false)

.mapTo[BrokerAddresses]

.flatMap { ba =>

logger.info(s"Connecting a $name using broker address $ba.")

runClient(ba, system)

}Finally, when the client stream completes (e.g. because a broker is down), we try to restart it after 1 second. Probably some exponential back-off mechanism would be useful here.

The runnable application for running the queue-message senders and receivers uses the same code as the single-node one, the difference being that the broker address is obtained from the cluster:

object ClusterReceiver extends App with ClusterClientSupport {

start("receiver", (ba, system) =>

new Receiver(ba.receiveServerAddress)(system).run())

}Running

If you try to run ClusterReceiver (any number), ClusterSender (any number), ClusteredBroker1, ClusteredBroker2 and ClusteredBroker3, you will see that that the messages flow from senders, through a single running broker, to the receivers. You can kill a broker node, and after a couple of seconds another one will be started on another cluster node, and the senders/receivers will re-connect.

I’d say that’s quite nice for the pretty small amount of code we’ve written!

Summing up

Our message queue now is:

- reactive, using akka-streams

- persistent, using akka-persistence

- clustered, using akka-cluster

And the best side is, in the code we don’t have to deal with any details of back-pressure handling, storing messages to disk or communicating and reaching consensus in the cluster. We now have a truly reactive application.

Thanks to Endre from the Akka team for helping out with adding error handling to the streams. The whole code is available on GitHub. Enjoy!

| Reference: | Clustering reactmq with akka-cluster from our JCG partner Adam Warski at the Blog of Adam Warski blog. |