Java EE 7 and WildFly on Kubernetes using Vagrant

This tip will show how to run a Java EE 7 application deployed in WildFly and hosted using Kubernetes and Docker. If you want to learn more about the basics, then this blog has already published quite a bit of content on the topic. A sampling of some of the content is given below:

- Get started with Docker

- How to create your own Docker image

- Pushing your Docker images to registry

- Java EE 7 Hands-on Lab on WildFly and Docker

- WildFly/JavaEE7 and MySQL linked on two Docker containers

- Docker container linking across multiple hosts

- Key Concepts of Kubernetes

- Build Kubernetes on Mac OS X

- Vagrant with Docker provider, using WildFly and Java EE 7 image

Lets get started!

Start Kubernetes cluster

Kubernetes cluster can be easily started on a Linux machine using the usual scripts. There are Getting Started Guides for different platforms such as Fedora, CoreOS, Amazon Web Services, and others. Running a Kubernetes cluster on Mac OS X require to use the Vagrant image which is also explained in Getting Started with Vagrant. This blog will use the Vagrant box.

- By default, Kubernetes cluster management scripts assumes you are running on Google Compute Engine. Kubernetes can be configured to run with a variety of providers:

gce,gke,aws,azure,vagrant,local,vsphere. So lets set our provider to vagrant as:export KUBERNETES_PROVIDER=vagrant

This means, your Kubernetes cluster is running inside a Fedora VM created by Vagrant.

- Start the cluster as:

kubernetes> ./cluster/kube-up.sh Starting cluster using provider: vagrant ... calling verify-prereqs ... calling kube-up Using credentials: vagrant:vagrant Bringing machine 'master' up with 'virtualbox' provider... Bringing machine 'minion-1' up with 'virtualbox' provider... . . . Running: ./cluster/../cluster/vagrant/../../cluster/../cluster/vagrant/../../_output/dockerized/bin/darwin/amd64/kubectl --auth-path=/Users/arungupta/.kubernetes_vagrant_auth create -f - skydns ... calling setup-logging TODO: setup logging Done

Notice, this command is given from the kubernetes directory where it is already compiled as explained in Build Kubernetes on Mac OS X.

By default, the Vagrant setup will create a single kubernetes-master and 1 kubernetes-minion. This involves creating Fedora VM, installing dependencies, creating master and minion, setting up connectivity between them, and a whole lot of other things. As a result, this step can take a few minutes (~10 mins on my machine).

Verify Kubernetes cluster

Now that the cluster has started, lets make sure we verify it does everything that its supposed to.

- Verify the that your Vagrant images are up correctly as:

kubernetes> vagrant status Current machine states: master running (virtualbox) minion-1 running (virtualbox) This environment represents multiple VMs. The VMs are all listed above with their current state. For more information about a specific VM, run `vagrant status NAME`.

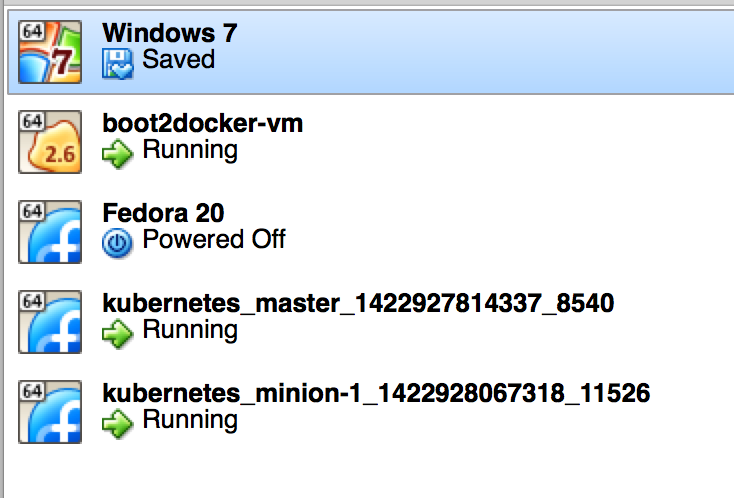

This can also be verified by verifying the status in Virtual Box console as shown:

Kubernetes Virtual Machines in Virtual Box boot2docker-vm is the Boot2Docker VM. Then there is Kubernetes master and minion VM. Two additional VMs are shown here but they not relevant to the example.

- Log in to the master as:

kubernetes> vagrant ssh master Last login: Fri Jan 30 21:35:34 2015 from 10.0.2.2 [vagrant@kubernetes-master ~]$

Verify that different Kubernetes components have started up correctly. Start with Kubernetes API server:

[vagrant@kubernetes-master ~]$ sudo systemctl status kube-apiserver kube-apiserver.service - Kubernetes API Server Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled) Active: active (running) since Fri 2015-01-30 21:34:25 UTC; 7min ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 3506 (kube-apiserver) CGroup: /system.slice/kube-apiserver.service └─3506 /usr/local/bin/kube-apiserver --address=127.0.0.1 --etcd_servers=http://10.245.1.2:4001 --cloud_provider=vagrant --admission_c... . . .Then Kube Controller Manager:

[vagrant@kubernetes-master ~]$ sudo systemctl status kube-controller-manager kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled) Active: active (running) since Fri 2015-01-30 21:34:27 UTC; 8min ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 3566 (kube-controller) CGroup: /system.slice/kube-controller-manager.service └─3566 /usr/local/bin/kube-controller-manager --master=127.0.0.1:8080 --minion_regexp=.* --cloud_provider=vagrant --v=2 . . .Similarly you can verify

etcdandnginxas well.Docker and Kubelet are running in the minion and can be verified by logging in to the minion and using

systemctlscripts as:kubernetes> vagrant ssh minion-1 Last login: Fri Jan 30 21:37:05 2015 from 10.0.2.2 [vagrant@kubernetes-minion-1 ~]$ sudo systemctl status docker docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled) Active: active (running) since Fri 2015-01-30 21:39:05 UTC; 8min ago Docs: http://docs.docker.com Main PID: 13056 (docker) CGroup: /system.slice/docker.service ├─13056 /usr/bin/docker -d -b=kbr0 --iptables=false --selinux-enabled └─13192 docker-proxy -proto tcp -host-ip 0.0.0.0 -host-port 4194 -container-ip 10.246.0.3 -container-port 8080 . . . [vagrant@kubernetes-minion-1 ~]$ sudo systemctl status kubelet kubelet.service - Kubernetes Kubelet Server Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled) Active: active (running) since Fri 2015-01-30 21:36:57 UTC; 10min ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 3118 (kubelet) CGroup: /system.slice/kubelet.service └─3118 /usr/local/bin/kubelet --etcd_servers=http://10.245.1.2:4001 --api_servers=https://10.245.1.2:6443 --auth_path=/var/lib/kubele... . . . - Check the minions as:

kubernetes> ./cluster/kubectl.sh get minions Running: ./cluster/../cluster/vagrant/../../_output/dockerized/bin/darwin/amd64/kubectl --auth-path=/Users/arungupta/.kubernetes_vagrant_auth get minions NAME LABELS STATUS 10.245.1.3 <none> Ready

Only one minion is created. This can be manipulated by setting an environment variable

NUM_MINIONSvariable to an integer before invokingkube-up.shscript.Finally check the pods as:

kubernetes> ./cluster/kubectl.sh get pods Running: ./cluster/../cluster/vagrant/../../_output/dockerized/bin/darwin/amd64/kubectl --auth-path=/Users/arungupta/.kubernetes_vagrant_auth get pods POD IP CONTAINER(S) IMAGE(S) HOST LABELS STATUS 22d4a478-a8c8-11e4-a61e-0800279696e1 10.246.0.2 etcd quay.io/coreos/etcd:latest 10.245.1.3/10.245.1.3 k8s-app=skydns Running kube2sky kubernetes/kube2sky:1.0 skydns kubernetes/skydns:2014-12-23-001This shows a single pod is created by default and has three containers running:

- skydns: SkyDNS is a distributed service for announcement and discovery of services built on top of etcd. It utilizes DNS queries to discover available services.

- etcd: A distributed, consistent key value store for shared configuration and service discovery with a focus on being simple, secure, fast, reliable. This is used for storing state information for Kubernetes.

- kube2sky: A bridge between Kubernetes and SkyDNS. This will watch the kubernetes API for changes in Services and then publish those changes to SkyDNS through etcd.

- No pods have been created by our application so far, lets do that next.

Start WildFly and Java EE 7 application Pod

Pod is created by using the kubectl script and providing the details in a JSON configuration file. The source code for our configuration file is available at github.com/arun-gupta/kubernetes-java-sample, and looks like:

{

"id": "wildfly",

"kind": "Pod",

"apiVersion": "v1beta1",

"desiredState": {

"manifest": {

"version": "v1beta1",

"id": "wildfly",

"containers": [{

"name": "wildfly",

"image": "arungupta/javaee7-hol",

"cpu": 100,

"ports": [{

"containerPort": 8080,

"hostPort": 8080

},

{

"containerPort": 9090,

"hostPort": 9090

}]

}]

}

},

"labels": {

"name": "wildfly"

}

}

The exact payload and attributes of this configuration file are documented at kubernetes.io/third_party/swagger-ui/#!/v1beta1/createPod_0. Complete docs of all the possible APIs are at kubernetes.io/third_party/swagger-ui/. The key attributes in this fie particularly are:

- A pod is created. API allows other types such as “service”, “replicationController” etc. to be created.

- Version of the API is “v1beta1″.

- Docker image arungupta/javaee7-hol used to run the container.

- Exposes port 8080 and 9090, as they are originally exposed in the base image Dockerfile. This require further debugging on how the list of ports can be cleaned up.

- Pod is given a label “wildfly”. In this case, its not used much but would be more meaningful when services are created in a subsequent blog.

As mentioned earlier, this tech tip will spin up a single pod, with one container. Our container will be using a pre-built image (arungupta/javaee7-hol) that deploys a typical 3-tier Java EE 7 application to WildFly.

Start the WildFly pod as:

kubernetes/>./cluster/kubectl create -f ../kubernetes-java-sample/javaee7-hol.json

Check the status of the created pod as:

kubernetes> ./cluster/kubectl.sh get pods

Running: ./cluster/../cluster/vagrant/../../_output/dockerized/bin/darwin/amd64/kubectl --auth-path=/Users/arungupta/.kubernetes_vagrant_auth get pods

POD IP CONTAINER(S) IMAGE(S) HOST LABELS STATUS

4c283aa1-ab47-11e4-b139-0800279696e1 10.246.0.2 etcd quay.io/coreos/etcd:latest 10.245.1.3/10.245.1.3 k8s-app=skydns Running

kube2sky kubernetes/kube2sky:1.0

skydns kubernetes/skydns:2014-12-23-001

wildfly 10.246.0.5 wildfly arungupta/javaee7-hol 10.245.1.3/10.245.1.3 name=wildfly Running

The WildFly pod is now created and shown in the list. The HOST column shows the IP address on which the application is accessible.

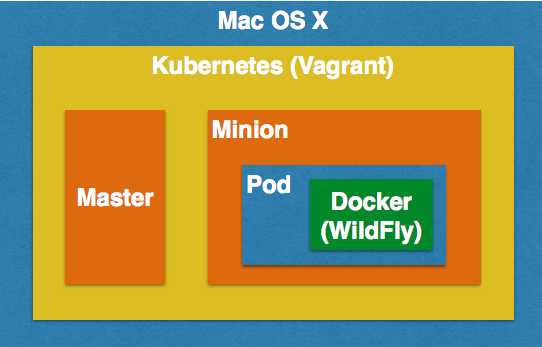

The image below explains how all the components fit with each other:

As only one minion is created by default, this pod will be created on that minion. The blog will show how multiple minions can be created. Kubernetes of course picks the minion where the pods are created.

Running the pod ensures that the Java EE 7 application is deployed to WildFly.

Access Java EE 7 Application

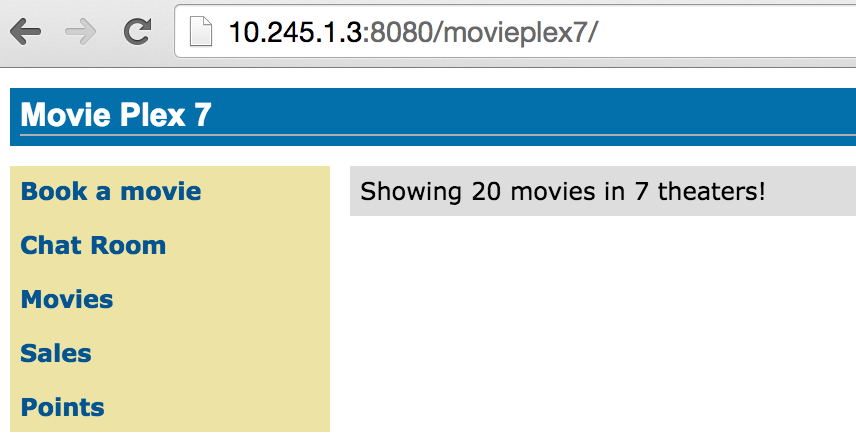

From the kubectl.sh get pods output, HOST column shows the IP address where the application is externally accessible. In our case, the IP address is 10.245.1.3. So, access the application in the browser to see output as:

This confirms that your Java EE 7 application is now accessible.

Kubernetes Debugging Tips

Once the Kubernetes cluster is created, you’ll need to debug it and see what’s going on under the hood.

First of all, lets log in to the minion:

kubernetes> vagrant ssh minion-1 Last login: Tue Feb 3 01:52:22 2015 from 10.0.2.2

List of Docker containers on Minion

Lets take a look at all the Docker containers running on minion-1:

[vagrant@kubernetes-minion-1 ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3f7e174b82b1 arungupta/javaee7-hol:latest "/opt/jboss/wildfly/ 16 minutes ago Up 16 minutes k8s_wildfly.a78dc60_wildfly.default.api_59be80fa-ab48-11e4-b139-0800279696e1_75a4a7cb 1c464e71fb69 kubernetes/pause:go "/pause" 20 minutes ago Up 20 minutes 0.0.0.0:8080->8080/tcp, 0.0.0.0:9090->9090/tcp k8s_net.7946daa4_wildfly.default.api_59be80fa-ab48-11e4-b139-0800279696e1_e74d3d1d 7bdd763df691 kubernetes/skydns:2014-12-23-001 "/skydns -machines=h 21 minutes ago Up 21 minutes k8s_skydns.394cd23c_4c283aa1-ab47-11e4-b139-0800279696e1.default.api_4c283aa1-ab47-11e4-b139-0800279696e1_3352f6bd 17a140aaabbe google/cadvisor:0.7.1 "/usr/bin/cadvisor" 22 minutes ago Up 22 minutes k8s_cadvisor.68f5108e_cadvisor-agent.file-6bb810db-kubernetes-minion-1.file_65235067df34faf012fd8bb088de6b73_86e59309 a5f8cf6463e9 kubernetes/kube2sky:1.0 "/kube2sky -domain=k 22 minutes ago Up 22 minutes k8s_kube2sky.1cbba018_4c283aa1-ab47-11e4-b139-0800279696e1.default.api_4c283aa1-ab47-11e4-b139-0800279696e1_126d4d7a 28e6d2e67a92 kubernetes/fluentd-elasticsearch:1.0 "/bin/sh -c '/usr/sb 23 minutes ago Up 23 minutes k8s_fluentd-es.6361e00b_fluentd-to-elasticsearch.file-8cd71177-kubernetes-minion-1.file_a190cc221f7c0766163ed2a4ad6e32aa_a9a369d3 5623edf7decc quay.io/coreos/etcd:latest "/etcd /etcd -bind-a 25 minutes ago Up 25 minutes k8s_etcd.372da5db_4c283aa1-ab47-11e4-b139-0800279696e1.default.api_4c283aa1-ab47-11e4-b139-0800279696e1_8b658811 3575b562f23e kubernetes/pause:go "/pause" 25 minutes ago Up 25 minutes 0.0.0.0:4194->8080/tcp k8s_net.beddb979_cadvisor-agent.file-6bb810db-kubernetes-minion-1.file_65235067df34faf012fd8bb088de6b73_8376ce8e 094d76c83068 kubernetes/pause:go "/pause" 25 minutes ago Up 25 minutes k8s_net.3e0f95f3_fluentd-to-elasticsearch.file-8cd71177-kubernetes-minion-1.file_a190cc221f7c0766163ed2a4ad6e32aa_6931ca22 f8b9cd5af169 kubernetes/pause:go "/pause" 25 minutes ago Up 25 minutes k8s_net.3d64b7f6_4c283aa1-ab47-11e4-b139-0800279696e1.default.api_4c283aa1-ab47-11e4-b139-0800279696e1_b0ebce5a

The first container is specific to our application, everything else is started by Kubernetes.

Details about each Docker container

More details about each container can be found by using their container id as:

docker inspect <CONTAINER_ID>

In our case, the output is shown as:

[vagrant@kubernetes-minion-1 ~]$ docker inspect 3f7e174b82b1

[{

"AppArmorProfile": "",

"Args": [

"-c",

"standalone-full.xml",

"-b",

"0.0.0.0"

],

"Config": {

"AttachStderr": false,

"AttachStdin": false,

"AttachStdout": false,

"Cmd": [

"/opt/jboss/wildfly/bin/standalone.sh",

"-c",

"standalone-full.xml",

"-b",

"0.0.0.0"

],

"CpuShares": 102,

"Cpuset": "",

"Domainname": "",

"Entrypoint": null,

"Env": [

"KUBERNETES_PORT_443_TCP_PROTO=tcp",

"KUBERNETES_RO_PORT_80_TCP=tcp://10.247.82.143:80",

"SKYDNS_PORT_53_UDP=udp://10.247.0.10:53",

"KUBERNETES_PORT_443_TCP=tcp://10.247.92.82:443",

"KUBERNETES_PORT_443_TCP_PORT=443",

"KUBERNETES_PORT_443_TCP_ADDR=10.247.92.82",

"KUBERNETES_RO_PORT_80_TCP_PROTO=tcp",

"SKYDNS_PORT_53_UDP_PROTO=udp",

"KUBERNETES_RO_PORT_80_TCP_ADDR=10.247.82.143",

"SKYDNS_SERVICE_HOST=10.247.0.10",

"SKYDNS_PORT_53_UDP_PORT=53",

"SKYDNS_PORT_53_UDP_ADDR=10.247.0.10",

"KUBERNETES_SERVICE_HOST=10.247.92.82",

"KUBERNETES_RO_SERVICE_HOST=10.247.82.143",

"KUBERNETES_RO_PORT_80_TCP_PORT=80",

"SKYDNS_SERVICE_PORT=53",

"SKYDNS_PORT=udp://10.247.0.10:53",

"KUBERNETES_SERVICE_PORT=443",

"KUBERNETES_PORT=tcp://10.247.92.82:443",

"KUBERNETES_RO_SERVICE_PORT=80",

"KUBERNETES_RO_PORT=tcp://10.247.82.143:80",

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/lib/jvm/java",

"WILDFLY_VERSION=8.2.0.Final",

"JBOSS_HOME=/opt/jboss/wildfly"

],

"ExposedPorts": {

"8080/tcp": {},

"9090/tcp": {},

"9990/tcp": {}

},

"Hostname": "wildfly",

"Image": "arungupta/javaee7-hol",

"MacAddress": "",

"Memory": 0,

"MemorySwap": 0,

"NetworkDisabled": false,

"OnBuild": null,

"OpenStdin": false,

"PortSpecs": null,

"StdinOnce": false,

"Tty": false,

"User": "jboss",

"Volumes": null,

"WorkingDir": "/opt/jboss"

},

"Created": "2015-02-03T02:03:54.882111127Z",

"Driver": "devicemapper",

"ExecDriver": "native-0.2",

"HostConfig": {

"Binds": null,

"CapAdd": null,

"CapDrop": null,

"ContainerIDFile": "",

"Devices": null,

"Dns": [

"10.247.0.10",

"10.0.2.3"

],

"DnsSearch": [

"default.kubernetes.local",

"kubernetes.local",

"c.hospitality.swisscom.com"

],

"ExtraHosts": null,

"IpcMode": "",

"Links": null,

"LxcConf": null,

"NetworkMode": "container:1c464e71fb69adfb2a407217d0c84600a18f755721628ea3f329f48a2cdaa64f",

"PortBindings": {

"8080/tcp": [

{

"HostIp": "",

"HostPort": "8080"

}

],

"9090/tcp": [

{

"HostIp": "",

"HostPort": "9090"

}

]

},

"Privileged": false,

"PublishAllPorts": false,

"RestartPolicy": {

"MaximumRetryCount": 0,

"Name": ""

},

"SecurityOpt": null,

"VolumesFrom": null

},

"HostnamePath": "",

"HostsPath": "/var/lib/docker/containers/1c464e71fb69adfb2a407217d0c84600a18f755721628ea3f329f48a2cdaa64f/hosts",

"Id": "3f7e174b82b1520abdc7f39f34ad4e4a9cb4d312466143b54935c43d4c258e3f",

"Image": "a068decaf8928737340f8f08fbddf97d9b4f7838d154e88ed77fbcf9898a83f2",

"MountLabel": "",

"Name": "/k8s_wildfly.a78dc60_wildfly.default.api_59be80fa-ab48-11e4-b139-0800279696e1_75a4a7cb",

"NetworkSettings": {

"Bridge": "",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"MacAddress": "",

"PortMapping": null,

"Ports": null

},

"Path": "/opt/jboss/wildfly/bin/standalone.sh",

"ProcessLabel": "",

"ResolvConfPath": "/var/lib/docker/containers/1c464e71fb69adfb2a407217d0c84600a18f755721628ea3f329f48a2cdaa64f/resolv.conf",

"State": {

"Error": "",

"ExitCode": 0,

"FinishedAt": "0001-01-01T00:00:00Z",

"OOMKilled": false,

"Paused": false,

"Pid": 17920,

"Restarting": false,

"Running": true,

"StartedAt": "2015-02-03T02:03:55.471392394Z"

},

"Volumes": {},

"VolumesRW": {}

}

]

Logs from the Docker container

Logs from the container can be seen using the command:

docker logs <CONTAINER_ID>

In our case, the output is shown as:

[vagrant@kubernetes-minion-1 ~]$ docker logs 3f7e174b82b1

=========================================================================

JBoss Bootstrap Environment

JBOSS_HOME: /opt/jboss/wildfly

JAVA: /usr/lib/jvm/java/bin/java

JAVA_OPTS: -server -Xms64m -Xmx512m -XX:MaxPermSize=256m -Djava.net.preferIPv4Stack=true -Djboss.modules.system.pkgs=org.jboss.byteman -Djava.awt.headless=true

=========================================================================

. . .

02:04:12,078 INFO [org.jboss.as.jpa] (ServerService Thread Pool -- 57) JBAS011409: Starting Persistence Unit (phase 1 of 2) Service 'movieplex7-1.0-SNAPSHOT.war#movieplex7PU'

02:04:12,128 INFO [org.hibernate.jpa.internal.util.LogHelper] (ServerService Thread Pool -- 57) HHH000204: Processing PersistenceUnitInfo [

name: movieplex7PU

...]

02:04:12,154 INFO [org.hornetq.core.server] (ServerService Thread Pool -- 56) HQ221007: Server is now live

02:04:12,155 INFO [org.hornetq.core.server] (ServerService Thread Pool -- 56) HQ221001: HornetQ Server version 2.4.5.FINAL (Wild Hornet, 124) [f13dedbd-ab48-11e4-a924-615afe337134]

02:04:12,175 INFO [org.hornetq.core.server] (ServerService Thread Pool -- 56) HQ221003: trying to deploy queue jms.queue.ExpiryQueue

02:04:12,735 INFO [org.jboss.as.messaging] (ServerService Thread Pool -- 56) JBAS011601: Bound messaging object to jndi name java:/jms/queue/ExpiryQueue

02:04:12,736 INFO [org.hornetq.core.server] (ServerService Thread Pool -- 60) HQ221003: trying to deploy queue jms.queue.DLQ

02:04:12,749 INFO [org.jboss.as.messaging] (ServerService Thread Pool -- 60) JBAS011601: Bound messaging object to jndi name java:/jms/queue/DLQ

02:04:12,792 INFO [org.hibernate.Version] (ServerService Thread Pool -- 57) HHH000412: Hibernate Core {4.3.7.Final}

02:04:12,795 INFO [org.hibernate.cfg.Environment] (ServerService Thread Pool -- 57) HHH000206: hibernate.properties not found

02:04:12,801 INFO [org.hibernate.cfg.Environment] (ServerService Thread Pool -- 57) HHH000021: Bytecode provider name : javassist

02:04:12,820 INFO [org.jboss.as.connector.deployment] (MSC service thread 1-1) JBAS010406: Registered connection factory java:/JmsXA

02:04:12,997 INFO [org.hornetq.jms.server] (ServerService Thread Pool -- 59) HQ121005: Invalid "host" value "0.0.0.0" detected for "http-connector" connector. Switching to "wildfly". If this new address is incorrect please manually configure the connector to use the proper one.

02:04:13,021 INFO [org.jboss.as.messaging] (ServerService Thread Pool -- 59) JBAS011601: Bound messaging object to jndi name java:jboss/exported/jms/RemoteConnectionFactory

02:04:13,025 INFO [org.jboss.as.messaging] (ServerService Thread Pool -- 58) JBAS011601: Bound messaging object to jndi name java:/ConnectionFactory

02:04:13,072 INFO [org.hornetq.ra] (MSC service thread 1-1) HornetQ resource adaptor started

02:04:13,073 INFO [org.jboss.as.connector.services.resourceadapters.ResourceAdapterActivatorService$ResourceAdapterActivator] (MSC service thread 1-1) IJ020002: Deployed: file://RaActivatorhornetq-ra

02:04:13,078 INFO [org.jboss.as.messaging] (MSC service thread 1-4) JBAS011601: Bound messaging object to jndi name java:jboss/DefaultJMSConnectionFactory

02:04:13,076 INFO [org.jboss.as.connector.deployment] (MSC service thread 1-8) JBAS010401: Bound JCA ConnectionFactory

02:04:13,487 INFO [org.jboss.weld.deployer] (MSC service thread 1-2) JBAS016002: Processing weld deployment movieplex7-1.0-SNAPSHOT.war

02:04:13,694 INFO [org.hibernate.validator.internal.util.Version] (MSC service thread 1-2) HV000001: Hibernate Validator 5.1.3.Final

02:04:13,838 INFO [org.jboss.as.ejb3.deployment.processors.EjbJndiBindingsDeploymentUnitProcessor] (MSC service thread 1-2) JNDI bindings for session bean named ShowTimingFacadeREST in deployment unit deployment "movieplex7-1.0-SNAPSHOT.war" are as follows:

java:global/movieplex7-1.0-SNAPSHOT/ShowTimingFacadeREST!org.javaee7.movieplex7.rest.ShowTimingFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/ShowTimingFacadeREST!org.javaee7.movieplex7.rest.ShowTimingFacadeREST

java:module/ShowTimingFacadeREST!org.javaee7.movieplex7.rest.ShowTimingFacadeREST

java:global/movieplex7-1.0-SNAPSHOT/ShowTimingFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/ShowTimingFacadeREST

java:module/ShowTimingFacadeREST

02:04:13,838 INFO [org.jboss.as.ejb3.deployment.processors.EjbJndiBindingsDeploymentUnitProcessor] (MSC service thread 1-2) JNDI bindings for session bean named TheaterFacadeREST in deployment unit deployment "movieplex7-1.0-SNAPSHOT.war" are as follows:

java:global/movieplex7-1.0-SNAPSHOT/TheaterFacadeREST!org.javaee7.movieplex7.rest.TheaterFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/TheaterFacadeREST!org.javaee7.movieplex7.rest.TheaterFacadeREST

java:module/TheaterFacadeREST!org.javaee7.movieplex7.rest.TheaterFacadeREST

java:global/movieplex7-1.0-SNAPSHOT/TheaterFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/TheaterFacadeREST

java:module/TheaterFacadeREST

02:04:13,839 INFO [org.jboss.as.ejb3.deployment.processors.EjbJndiBindingsDeploymentUnitProcessor] (MSC service thread 1-2) JNDI bindings for session bean named MovieFacadeREST in deployment unit deployment "movieplex7-1.0-SNAPSHOT.war" are as follows:

java:global/movieplex7-1.0-SNAPSHOT/MovieFacadeREST!org.javaee7.movieplex7.rest.MovieFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/MovieFacadeREST!org.javaee7.movieplex7.rest.MovieFacadeREST

java:module/MovieFacadeREST!org.javaee7.movieplex7.rest.MovieFacadeREST

java:global/movieplex7-1.0-SNAPSHOT/MovieFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/MovieFacadeREST

java:module/MovieFacadeREST

02:04:13,840 INFO [org.jboss.as.ejb3.deployment.processors.EjbJndiBindingsDeploymentUnitProcessor] (MSC service thread 1-2) JNDI bindings for session bean named SalesFacadeREST in deployment unit deployment "movieplex7-1.0-SNAPSHOT.war" are as follows:

java:global/movieplex7-1.0-SNAPSHOT/SalesFacadeREST!org.javaee7.movieplex7.rest.SalesFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/SalesFacadeREST!org.javaee7.movieplex7.rest.SalesFacadeREST

java:module/SalesFacadeREST!org.javaee7.movieplex7.rest.SalesFacadeREST

java:global/movieplex7-1.0-SNAPSHOT/SalesFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/SalesFacadeREST

java:module/SalesFacadeREST

02:04:13,840 INFO [org.jboss.as.ejb3.deployment.processors.EjbJndiBindingsDeploymentUnitProcessor] (MSC service thread 1-2) JNDI bindings for session bean named TimeslotFacadeREST in deployment unit deployment "movieplex7-1.0-SNAPSHOT.war" are as follows:

java:global/movieplex7-1.0-SNAPSHOT/TimeslotFacadeREST!org.javaee7.movieplex7.rest.TimeslotFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/TimeslotFacadeREST!org.javaee7.movieplex7.rest.TimeslotFacadeREST

java:module/TimeslotFacadeREST!org.javaee7.movieplex7.rest.TimeslotFacadeREST

java:global/movieplex7-1.0-SNAPSHOT/TimeslotFacadeREST

java:app/movieplex7-1.0-SNAPSHOT/TimeslotFacadeREST

java:module/TimeslotFacadeREST

02:04:14,802 INFO [org.jboss.as.messaging] (MSC service thread 1-1) JBAS011601: Bound messaging object to jndi name java:global/jms/pointsQueue

02:04:14,931 INFO [org.jboss.weld.deployer] (MSC service thread 1-2) JBAS016005: Starting Services for CDI deployment: movieplex7-1.0-SNAPSHOT.war

02:04:15,018 INFO [org.jboss.weld.Version] (MSC service thread 1-2) WELD-000900: 2.2.6 (Final)

02:04:15,109 INFO [org.hornetq.core.server] (ServerService Thread Pool -- 57) HQ221003: trying to deploy queue jms.queue.movieplex7-1.0-SNAPSHOT_movieplex7-1.0-SNAPSHOT_movieplex7-1.0-SNAPSHOT_java:global/jms/pointsQueue

02:04:15,110 INFO [org.jboss.weld.deployer] (MSC service thread 1-6) JBAS016008: Starting weld service for deployment movieplex7-1.0-SNAPSHOT.war

02:04:15,787 INFO [org.jboss.as.jpa] (ServerService Thread Pool -- 57) JBAS011409: Starting Persistence Unit (phase 2 of 2) Service 'movieplex7-1.0-SNAPSHOT.war#movieplex7PU'

02:04:16,189 INFO [org.hibernate.annotations.common.Version] (ServerService Thread Pool -- 57) HCANN000001: Hibernate Commons Annotations {4.0.4.Final}

02:04:17,174 INFO [org.hibernate.dialect.Dialect] (ServerService Thread Pool -- 57) HHH000400: Using dialect: org.hibernate.dialect.H2Dialect

02:04:17,191 WARN [org.hibernate.dialect.H2Dialect] (ServerService Thread Pool -- 57) HHH000431: Unable to determine H2 database version, certain features may not work

02:04:17,954 INFO [org.hibernate.hql.internal.ast.ASTQueryTranslatorFactory] (ServerService Thread Pool -- 57) HHH000397: Using ASTQueryTranslatorFactory

02:04:19,832 INFO [org.hibernate.dialect.Dialect] (ServerService Thread Pool -- 57) HHH000400: Using dialect: org.hibernate.dialect.H2Dialect

02:04:19,833 WARN [org.hibernate.dialect.H2Dialect] (ServerService Thread Pool -- 57) HHH000431: Unable to determine H2 database version, certain features may not work

02:04:19,854 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE SALES]

02:04:19,855 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE POINTS]

02:04:19,855 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE SHOW_TIMING]

02:04:19,855 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE MOVIE]

02:04:19,856 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE TIMESLOT]

02:04:19,857 WARN [org.hibernate.jpa.internal.schemagen.GenerationTargetToDatabase] (ServerService Thread Pool -- 57) Unable to execute JPA schema generation drop command [DROP TABLE THEATER]

02:04:23,942 INFO [io.undertow.websockets.jsr] (MSC service thread 1-5) UT026003: Adding annotated server endpoint class org.javaee7.movieplex7.chat.ChatServer for path /websocket

02:04:24,975 INFO [javax.enterprise.resource.webcontainer.jsf.config] (MSC service thread 1-5) Initializing Mojarra 2.2.8-jbossorg-1 20140822-1131 for context '/movieplex7'

02:04:26,377 INFO [javax.enterprise.resource.webcontainer.jsf.config] (MSC service thread 1-5) Monitoring file:/opt/jboss/wildfly/standalone/tmp/vfs/temp/temp1267e5586f39ea50/movieplex7-1.0-SNAPSHOT.war-ea3c92cddc1c81c/WEB-INF/faces-config.xml for modifications

02:04:30,216 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Deploying javax.ws.rs.core.Application: class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,247 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding class resource org.javaee7.movieplex7.rest.TheaterFacadeREST from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,248 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding class resource org.javaee7.movieplex7.rest.ShowTimingFacadeREST from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,248 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding class resource org.javaee7.movieplex7.rest.MovieFacadeREST from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,248 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding provider class org.javaee7.movieplex7.json.MovieWriter from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,249 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding provider class org.javaee7.movieplex7.json.MovieReader from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,267 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding class resource org.javaee7.movieplex7.rest.SalesFacadeREST from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:30,267 INFO [org.jboss.resteasy.spi.ResteasyDeployment] (MSC service thread 1-5) Adding class resource org.javaee7.movieplex7.rest.TimeslotFacadeREST from Application class org.javaee7.movieplex7.rest.ApplicationConfig

02:04:31,544 INFO [org.wildfly.extension.undertow] (MSC service thread 1-5) JBAS017534: Registered web context: /movieplex7

02:04:32,187 INFO [org.jboss.as.server] (ServerService Thread Pool -- 31) JBAS018559: Deployed "movieplex7-1.0-SNAPSHOT.war" (runtime-name : "movieplex7-1.0-SNAPSHOT.war")

02:04:34,800 INFO [org.jboss.as] (Controller Boot Thread) JBAS015961: Http management interface listening on http://127.0.0.1:9990/management

02:04:34,859 INFO [org.jboss.as] (Controller Boot Thread) JBAS015951: Admin console listening on http://127.0.0.1:9990

02:04:34,859 INFO [org.jboss.as] (Controller Boot Thread) JBAS015874: WildFly 8.2.0.Final "Tweek" started in 38558ms - Started 400 of 452 services (104 services are lazy, passive or on-demand)

WildFly startup log, including the application deployment, is shown here.

Log into the Docker container

Log into the container and show WildFly logs. There are a couple of ways to do that.

First is to use the name and exec a bash shell. For that, get the name of the container as:

docker inspect <CONTAINER_ID> | grep Name

In our case, the output is:

[vagrant@kubernetes-minion-1 ~]$ docker inspect 3f7e174b82b1 | grep Name

"Name": ""

"Name": "/k8s_wildfly.a78dc60_wildfly.default.api_59be80fa-ab48-11e4-b139-0800279696e1_75a4a7cb",

Log into the container as:

[vagrant@kubernetes-minion-1 ~]$ docker exec -it k8s_wildfly.a78dc60_wildfly.default.api_59be80fa-ab48-11e4-b139-0800279696e1_75a4a7cb bash [root@wildfly /]# pwd /

Other, more classical way, is to get the process id of the container as:

docker inspect <CONTAINER_ID> | grep Pid

In our case, the output is:

[vagrant@kubernetes-minion-1 ~]$ docker inspect 3f7e174b82b1 | grep Pid

"Pid": 17920,

Log into the container as:

[vagrant@kubernetes-minion-1 ~]$ sudo nsenter -m -u -n -i -p -t 17920 /bin/bash docker exec -it [root@wildfly /]# pwd /

And now the complete WildFly distribution is available at:

[root@wildfly /]# cd /opt/jboss/wildfly [root@wildfly wildfly]# ls -la total 424 drwxr-xr-x 10 jboss jboss 4096 Dec 5 22:22 . drwx------ 4 jboss jboss 4096 Dec 5 22:22 .. drwxr-xr-x 3 jboss jboss 4096 Nov 20 22:43 appclient drwxr-xr-x 5 jboss jboss 4096 Nov 20 22:43 bin -rw-r--r-- 1 jboss jboss 2451 Nov 20 22:43 copyright.txt drwxr-xr-x 4 jboss jboss 4096 Nov 20 22:43 docs drwxr-xr-x 5 jboss jboss 4096 Nov 20 22:43 domain drwx------ 2 jboss jboss 4096 Nov 20 22:43 .installation -rw-r--r-- 1 jboss jboss 354682 Nov 20 22:43 jboss-modules.jar -rw-r--r-- 1 jboss jboss 26530 Nov 20 22:43 LICENSE.txt drwxr-xr-x 3 jboss jboss 4096 Nov 20 22:43 modules -rw-r--r-- 1 jboss jboss 2356 Nov 20 22:43 README.txt drwxr-xr-x 8 jboss jboss 4096 Feb 3 02:03 standalone drwxr-xr-x 2 jboss jboss 4096 Nov 20 22:43 welcome-content

Clean up the cluster

Entire Kubernetes cluster can be cleaned either using Virtual Box console, or using the command line as:

kubernetes> vagrant halt

==> minion-1: Attempting graceful shutdown of VM...

==> minion-1: Forcing shutdown of VM...

==> master: Attempting graceful shutdown of VM...

==> master: Forcing shutdown of VM...

kubernetes> vagrant destroy

minion-1: Are you sure you want to destroy the 'minion-1' VM? [y/N] y

==> minion-1: Destroying VM and associated drives...

==> minion-1: Running cleanup tasks for 'shell' provisioner...

master: Are you sure you want to destroy the 'master' VM? [y/N] y

==> master: Destroying VM and associated drives...

==> master: Running cleanup tasks for 'shell' provisioner...

So we learned how to run a Java EE 7 application deployed in WildFly and hosted using Kubernetes and Docker.

Enjoy!

| Reference: | Java EE 7 and WildFly on Kubernetes using Vagrant from our JCG partner Arun Gupta at the Miles to go 2.0 … blog. |