MongoDB and Java Tutorial

This article is part of our Academy Course titled MongoDB – A Scalable NoSQL DB.

In this course, you will get introduced to MongoDB. You will learn how to install it and how to operate it via its shell. Moreover, you will learn how to programmatically access it via Java and how to leverage Map Reduce with it. Finally, more advanced concepts like sharding and replication will be explained. Check it out here!

Table Of Contents

1. Introduction

In previous parts of the tutorial we have briefly looked through many MongoDB features, its architecture, installation and most of the supported commands. Hopefully, you already see MongoDB fitting nicely your application demands and would like to make it a part of your software stack.

In this part we are going to cover the integration of MongoDB with applications written in Java. Our choice of Java is caused by its popularity but MongoDB provides bindings (or drivers) to many other languages, for complete list please refer to official documentation.

We are going to develop a simple book store application with a goal in mind to cover most of the use cases you may encounter, emphasizing MongoDB ways to solve them. The solutions will be presented as typical JUnit test cases with awesome fluent assertions provided by AssertJ, from time to time accompanied by MongoDB shell commands as a verification steps.

Though Spring Data MongoDB is by far the most popular choice in Java community, we will use another great library called Morphia: lightweight type-safe library for mapping Java objects to/from MongoDB. The latest version of the Morphia library at the moment of writing is 0.106 and, unfortunately, it does not support all MongoDB 2.6 features yet (like text indexes and full-text search).

2. Extensions

Yet another good thing about Morphia is its extensibility. In particular, we are very interested in JSR 303: Bean Validation 1.0 extension provided by Morphia team out of the box. It is a very useful addition which helps to ensure the data objects flowing through the system are valid and meaningful. We will see a couple of examples later on.

3. Dependencies

Our project is going to use Apache Maven for build and dependency management as it is quite a popular choice in Java community. Luckily, Morphia releases are available through public Apache Maven repositories and we are going to leverage those three modules:

<properties>

<org.mongodb.morphia.version>0.106</org.mongodb.morphia.version>

</properties>

<dependencies>

<dependency>

<groupId>org.mongodb.morphia</groupId>

<artifactId>morphia</artifactId>

<version>${org.mongodb.morphia.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.morphia</groupId>

<artifactId>morphia-validation</artifactId>

<version>${org.mongodb.morphia.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.morphia</groupId>

<artifactId>morphia-logging-slf4j</artifactId>

<version>${org.mongodb.morphia.version}</version>

</dependency>

</dependencies>The core module provides the annotations, mappings and basically all required classes to start building your applications and use MongoDB. The validation module (or extension) provides integration with JSR 303: Bean Validation 1.0 as we mentioned before. Finally, the logging module integrates with excellent SLF4J framework.

4. Data Model

As we are building a simple book store application, its data domain might be represented by those three classes:

- Author: the book’s author

- Book: the book itself

- Store: the store selling the books

To project what we just said to MongoDB terminology, we are going to create a database bookstore with three document collections:

- authors: all known book authors

- books: all available books

- stores: all existing stores selling the books

The transparent mapping between Java classes (Author, Book, Store) and MongoDB collections (authors, books, stores) is one of the Morphia responsibilities. Let us take a look on Author classfirst as it is the simplest one.

package com.javacodegeeks.mongodb;

import org.bson.types.ObjectId;

import org.hibernate.validator.constraints.NotEmpty;

import org.mongodb.morphia.annotations.Entity;

import org.mongodb.morphia.annotations.Id;

import org.mongodb.morphia.annotations.Index;

import org.mongodb.morphia.annotations.Indexes;

import org.mongodb.morphia.annotations.Property;

import org.mongodb.morphia.annotations.Version;

@Entity( value = "authors", noClassnameStored = true )

@Indexes( {

@Index( "-lastName, -firstName" )

} )

public class Author {

@Id private ObjectId id;

@Version private long version;

@Property @NotEmpty private String firstName;

@Property @NotEmpty private String lastName;

public Author() {

}

public Author( final String firstName, final String lastName ) {

this.lastName = lastName;

this.firstName = firstName;

}

public ObjectId getId() {

return id;

}

protected void setId( final ObjectId id ) {

this.id = id;

}

public long getVersion() {

return version;

}

public void setVersion( final long version ) {

this.version = version;

}

public String getLastName() {

return lastName;

}

public void setLastName( final String lastName ) {

this.lastName = lastName;

}

public String getFirstName() {

return firstName;

}

public void setFirstName( final String firstName ) {

this.firstName = firstName;

}

}For Java developers working with JPA and/or Hibernate, such POJOs (plain old Java objects) are very familiar. The @Entity annotation maps the Java class to MongoDB collection, in this case Author -> authors. The quick note about noClassnameStored property: by default, Morphia stores the full Java class name inside each MongoDB document. It is actually quite useful if the same collection may contain documents of different types (means instances of different Java classes). In our application we are not going to use this kind of feature so Morphia is instructed not to store this information (which makes documents to look cleaner).

Next, we are declaring the MongoDB document fields as properties of Java class. In case of Author, it includes:

- id (marked with @Id)

- version (marked with @Version), we are going to use this property for optimistic locking in case of concurrent updates

- firstName (marked with @Property), additionally it has the @NotEmpty validation constraint defined which requires this property to be set

- lastName (marked with @Property), additionally it has the @NotEmpty validation constraint defined which requires this property to be set

And lastly, the Author class defines one compound index for lastName and firstName properties (the indexing concepts will be discussed in depth in Part 7. MongoDB Security, Profiling, Indexing, Cursors and Bulk Operations Guide).

Let us move on to a bit more complex examples involving two other classes, Book and Store. Here is a Book class.

package com.javacodegeeks.mongodb;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

import java.util.Set;

import java.util.TreeSet;

import javax.validation.Valid;

import javax.validation.constraints.NotNull;

import org.bson.types.ObjectId;

import org.hibernate.validator.constraints.NotEmpty;

import org.mongodb.morphia.annotations.Embedded;

import org.mongodb.morphia.annotations.Entity;

import org.mongodb.morphia.annotations.Id;

import org.mongodb.morphia.annotations.Indexed;

import org.mongodb.morphia.annotations.Property;

import org.mongodb.morphia.annotations.Reference;

import org.mongodb.morphia.annotations.Version;

import org.mongodb.morphia.utils.IndexDirection;

@Entity( value = "books", noClassnameStored = true )

public class Book {

@Id private ObjectId id;

@Version private long version;

@Property @Indexed( IndexDirection.DESC ) @NotEmpty private String title;

@Reference @Valid private List< Author > authors = new ArrayList<>();

@Property( "published" ) @NotNull private Date publishedDate;

@Property( concreteClass = TreeSet.class ) @Indexed

private Set< String > categories = new TreeSet<>();

@Embedded @Valid @NotNull private Publisher publisher;

public Book() {

}

public Book( final String title ) {

this.title = title;

}

public ObjectId getId() {

return id;

}

protected void setId( final ObjectId id ) {

this.id = id;

}

public long getVersion() {

return version;

}

public void setVersion( final long version ) {

this.version = version;

}

public String getTitle() {

return title;

}

public void setTitle( final String title ) {

this.title = title;

}

public List< Author > getAuthors() {

return authors;

}

public void setAuthors( final List< Author > authors ) {

this.authors = authors;

}

public Date getPublishedDate() {

return publishedDate;

}

public void setPublishedDate( final Date publishedDate ) {

this.publishedDate = publishedDate;

}

public Set< String > getCategories() {

return categories;

}

public void setCategories( final Set< String > categories ) {

this.categories = categories;

}

public Publisher getPublisher() {

return publisher;

}

public void setPublisher( final Publisher publisher ) {

this.publisher = publisher;

}

}The most of the annotations we have already seen but there are a couple of new ones:

- publishedDate (marked with @Property named “published” in document)

- publisher (marked with @Embedded, the whole object will be stored inside each document), additionally it has the @Validand @NotNull validation constraint defined which require this property to be set and be valid (conforming own validation constraints)

- authors (marked with @Reference, the references to the authors will be stored inside each documents), additionally it has the @Valid validation constraint defined which requires each author in the collection to be valid (conforming own validation constraints)

The title and categories properties have own indexes defined using @Indexed annotation. The Publisher class is not annotated with @Entity and as such is not mapped to any MongoDB collection being simple Java bean. That being said, the Publisher class still can declare indexes which will be part of the MongoDB collection this class is being embedded in (books).

package com.javacodegeeks.mongodb;

import org.hibernate.validator.constraints.NotBlank;

import org.mongodb.morphia.annotations.Indexed;

import org.mongodb.morphia.annotations.Property;

import org.mongodb.morphia.utils.IndexDirection;

public class Publisher {

@Property @Indexed( IndexDirection.DESC ) @NotBlank private String name;

public Publisher() {

}

public Publisher( final String name ) {

this.name = name;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

}Lastly, let us take a look on Store class. This class basically glues together all the concepts we have seen in Author and Book classes.

package com.javacodegeeks.mongodb;

import java.util.ArrayList;

import java.util.List;

import javax.validation.Valid;

import org.bson.types.ObjectId;

import org.hibernate.validator.constraints.NotEmpty;

import org.mongodb.morphia.annotations.Embedded;

import org.mongodb.morphia.annotations.Entity;

import org.mongodb.morphia.annotations.Id;

import org.mongodb.morphia.annotations.Indexed;

import org.mongodb.morphia.annotations.Property;

import org.mongodb.morphia.annotations.Version;

import org.mongodb.morphia.utils.IndexDirection;

@Entity( value = "stores", noClassnameStored = true )

public class Store {

@Id private ObjectId id;

@Version private long version;

@Property @Indexed( IndexDirection.DESC ) @NotEmpty private String name;

@Embedded @Valid private List< Stock > stock = new ArrayList<>();

@Embedded @Indexed( IndexDirection.GEO2D ) private Location location;

public Store() {

}

public Store( final String name ) {

this.name = name;

}

public ObjectId getId() {

return id;

}

protected void setId( final ObjectId id ) {

this.id = id;

}

public String getName() {

return name;

}

public void setName( final String name ) {

this.name = name;

}

public List< Stock > getStock() {

return stock;

}

public void setStock( final List< Stock > stock ) {

this.stock = stock;

}

public Location getLocation() {

return location;

}

public void setLocation( final Location location ) {

this.location = location;

}

public long getVersion() {

return version;

}

public void setVersion(long version) {

this.version = version;

}

}The only new thing this class introduces is location and geospatial index marked with @Indexed( IndexDirection.GEO2D ). In MongoDB there are several ways to represent the location coordinates:

- as an array of coordinates: [ 55.5 , 42.3 ]

- as an embedded object with two properties: { lon : 55.5 , lat : 42.3 }

The Location class embedded inside Store class uses the second option and is a simple as a regular Java bean, similar to Publisher class.

package com.javacodegeeks.mongodb;

import org.mongodb.morphia.annotations.Property;

public class Location {

@Property private double lon;

@Property private double lat;

public Location() {

}

public Location( final double lon, final double lat ) {

this.lon = lon;

this.lat = lat;

}

public double getLon() {

return lon;

}

public void setLon( final double lon ) {

this.lon = lon;

}

public double getLat() {

return lat;

}

public void setLat( final double lat ) {

this.lat = lat;

}

}The Stock class holds the book and its available quantity in each store, very similar to Publisher and Location classes.

package com.javacodegeeks.mongodb;

import javax.validation.Valid;

import javax.validation.constraints.Min;

import org.mongodb.morphia.annotations.Property;

import org.mongodb.morphia.annotations.Reference;

public class Stock {

@Reference @Valid private Book book;

@Property @Min( 0 ) private int quantity;

public Stock() {

}

public Stock( final Book book, final int quantity ) {

this.book = book;

this.quantity = quantity;

}

public Book getBook() {

return book;

}

public void setBook( final Book book ) {

this.book = book;

}

public int getQuantity() {

return quantity;

}

public void setQuantity( final int quantity ) {

this.quantity = quantity;

}

}The quantity property has a validation constraint @Min( 0 ) defined which states that the value of this property should never be negative.

5. Connection

Now, when the data model for the book store is complete, it is a time to see how we can apply it to a real MongoDB instance. In the following sections we assume that you have your local MongoDB instance up and running on localhost and default port 27017.

With Morphia, the first step is to create an instance of Morhia class and initialize it with desired extensions (in our case, it is a validation extension).

final Morphia morphia = new Morphia(); new ValidationExtension( morphia );

With this step being done, the instance of Datastore class (effectively, MongoDB database) could be created (if database doesn’t exist) or obtained (if exists) using the instance of Morhia class. The MongoDB connection is required at this stage and could be provided using MongoClient class instance. Plus, the mapped entities (Author, Book, Store) could be specified at the same time resulting in respective document collections creation.

final MongoClient client = new MongoClient( "localhost", 27017 );

final Datastore dataStore = morphia

.map( Store.class, Book.class, Author.class )

.createDatastore( client, "bookstore" );In case data store (or database) is created from scratch, it is handy to ask Morphia to create all indexes and capped collections as well.

dataStore.ensureIndexes(); dataStore.ensureCaps();

Great, with instance of data store in place, we are ready to create new documents, run queries and perform complex updates and aggregations.

6. Creating Documents

It would be nice to start with creating a couple of documents and persisting them in MongoDB. Our first test case is doing exactly that by creating new author.

@Test

public void testCreateNewAuthor() {

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 0 );

final Author author = new Author( "Kristina", "Chodorow" );

dataStore.save( author );

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 1 );

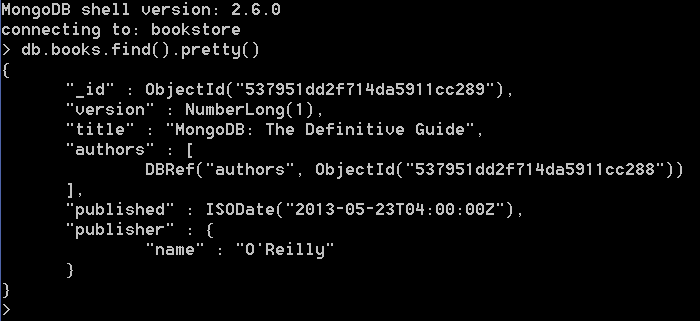

}For curious readers, let us run MongoDB shell and double check that authors collection really contains the newly created author: bin/mongo bookstore

Well, that was easy. But what happens if the author we are about to persist in MongoDB violates the validation constraints? In this case the save() method call is going to fail with VerboseJSR303ConstraintViolationException exception as our next test case demonstrates.

@Test( expected = VerboseJSR303ConstraintViolationException.class )

public void testCreateNewAuthorWithEmptyLastName() {

final Author author = new Author( "Kristina", "" );

dataStore.save( author );

}Without writing any single line of code but just asserting some expectations about the proper objects state, we are able to perform complex validation tasks literally for free. In the next test case we are going to do slightly more work to persist a new book.

@Test

public void testCreateNewBook() {

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 0 );

assertThat( dataStore.getCollection( Book.class ).count() ).isEqualTo( 0 );

final Publisher publisher = new Publisher( "O'Reilly" );

final Author author = new Author( "Kristina", "Chodorow" );

final Book book = new Book( "MongoDB: The Definitive Guide" );

book.getAuthors().add( author );

book.setPublisher( publisher );

book.setPublishedDate( new LocalDate( 2013, 05, 23 ).toDate() );

dataStore.save( author );

dataStore.save( book );

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 1 );

assertThat( dataStore.getCollection( Book.class ).count() ).isEqualTo( 1 );

}Through MongoDB shell let us make sure the newly created book is stored inside books collection and its authors property references the documents from authors collection: bin/mongo bookstore

To see some validation constraints violations, let us try to store the new book without publisher property set. It should result into VerboseJSR303ConstraintViolationException exception.

@Test( expected = VerboseJSR303ConstraintViolationException.class )

public void testCreateNewBookWithEmptyPublisher() {

final Author author = new Author( "Kristina", "Chodorow" );

final Book book = new Book( "MongoDB: The Definitive Guide" );

book.getAuthors().add( author );

book.setPublishedDate( new LocalDate( 2013, 05, 23 ).toDate() );

dataStore.save( author );

dataStore.save( book );

}The final test cases are demonstrating the creation of new store as well as validation at work when the book with negative quantity is being stocked.

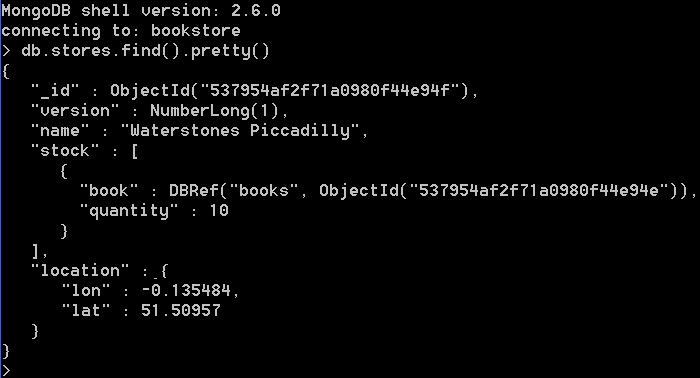

@Test

public void testCreateNewStore() {

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 0 );

assertThat( dataStore.getCollection( Book.class ).count() ).isEqualTo( 0 );

assertThat( dataStore.getCollection( Store.class ).count() ).isEqualTo( 0 );

final Publisher publisher = new Publisher( "O'Reilly" );

final Author author = new Author( "Kristina", "Chodorow" );

final Book book = new Book( "MongoDB: The Definitive Guide" );

book.setPublisher( publisher );

book.setPublishedDate( new LocalDate( 2013, 05, 23 ).toDate() );

book.getAuthors().add( author );

book.getCategories().addAll( Arrays.asList( "Databases", "Programming", "NoSQL" ) );

final Store store = new Store( "Waterstones Piccadilly" );

store.setLocation( new Location( -0.135484, 51.50957 ) );

store.getStock().add( new Stock( book, 10 ) );

dataStore.save( author );

dataStore.save( book );

dataStore.save( store );

assertThat( dataStore.getCollection( Author.class ).count() ).isEqualTo( 1 );

assertThat( dataStore.getCollection( Book.class ).count() ).isEqualTo( 1 );

assertThat( dataStore.getCollection( Store.class ).count() ).isEqualTo( 1 );

}

@Test( expected = VerboseJSR303ConstraintViolationException.class )

public void testCreateNewStoreWithNegativeBookQuantity() {

final Author author = new Author( "Kristina", "Chodorow" );

final Book book = new Book( "MongoDB: The Definitive Guide" );

book.getAuthors().add( author );

book.setPublisher( new Publisher( "O'Reilly" ) );

book.setPublishedDate( new LocalDate( 2013, 05, 23 ).toDate() );

final Store store = new Store( "Waterstones Piccadilly" );

store.getStock().add( new Stock( book, -1 ) );

dataStore.save( author );

dataStore.save( book );

dataStore.save( store );

}7. Querying Documents

With knowledge how to create new documents and store them in MongoDB using Morphia we are now ready to take a look on querying for existing documents. As we are going to see very soon, Morphia provides fluent and easy to use, strongly typed (where it has sense) query API. To make following test cases a bit simpler, the couple of authors, books:

- MongoDB: The Definitive Guide by Kristina Chodorow, published by O’Reilly (May 23, 2013) in categories Databases”, Programming, NoSQL

- MongoDB Applied Design Patterns by Rick Copeland,published by O’Reilly (March 19, 2013) in categories Databases, Programming, NoSQL, Patterns

- MongoDB in Action by Kyle Banker, published by Manning (December 16, 2011) in categories Databases, Programming, NoSQL

- NoSQL Distilled: A Brief Guide to the Emerging World of Polyglot Persistence by Pramod J Sadalage and Martin Fowler published by Addison Wesley (August 18, 2012) in categories Databases, NoSQL

- Waterstones Piccadilly located at (51.50957,-0.135484) and stocked with:

- MongoDB: The Definitive Guide in quantity of 10

- MongoDB Applied Design in quantity of 45

- MongoDB in Action in quantity of 2

- NoSQL Distilled in quantity of 0

- Barnes & Noble located at (40.786277,-73.978693) and stocked with:

- MongoDB: The Definitive Guide in quantity of 7

- MongoDB Applied Design in quantity of 12

- MongoDB in Action in quantity of 15

- NoSQL Distilled in quantity of 2

and stores:

are being pre-populated already before each test run.

The test case we are going to start with just queries for all the books which have ‘mongodb’ word in the title using case-insensitive comparison.

@Test

public void testFindBooksByName() {

final List< Book > books = dataStore.createQuery( Book.class )

.field( "title" ).containsIgnoreCase( "mongodb" )

.order( "title" )

.asList();

assertThat( books ).hasSize( 3 );

assertThat( books ).extracting( "title" )

.containsExactly(

"MongoDB Applied Design Patterns",

"MongoDB in Action",

"MongoDB: The Definitive Guide"

);

}This test case uses the one of the favors of the Morphia querying API. But there is another one using the less verbose filter method call which the next test case demonstrates by looking the book by its author.

@Test

public void testFindBooksByAuthor() {

final Author author = dataStore.createQuery( Author.class )

.filter( "lastName =", "Banker" )

.get();

final List< Book > books = dataStore.createQuery( Book.class )

.field( "authors" ).hasThisElement( author )

.order( "title" )

.asList();

assertThat( books ).hasSize( 1 );

assertThat( books ).extracting( "title" ).containsExactly( "MongoDB in Action" );

}Next test case uses a bit more complex compound criteria: it queries all the books in categories NoSQL, Databases and published after January 1, 2013. The result is also sorted by book’s title in ascending order (“-title”).

@Test

public void testFindBooksByCategoryAndPublishedDate() {

final Query< Book > query = dataStore.createQuery( Book.class ).order( "-title" );

query.and(

query.criteria( "categories" )

.hasAllOf( Arrays.asList( "NoSQL", "Databases" ) ),

query.criteria( "published" )

.greaterThan( new LocalDate( 2013, 01, 01 ).toDate() )

);

final List< Book > books = query.asList();

assertThat( books ).hasSize( 2 );

assertThat( books ).extracting( "title" )

.containsExactly(

"MongoDB: The Definitive Guide",

"MongoDB Applied Design Patterns"

);

}If you by any chance are in London and would like to buy a MongoDB book in the closest store, the next test case shows off geospatial query capabilities of MongoDB by looking up the book store not far away from the center.

@Test

public void testFindStoreClosestToLondon() {

final List< Store > stores = dataStore

.createQuery( Store.class )

.field( "location" ).near( 51.508035, -0.128016, 1.0 )

.asList();

assertThat( stores ).hasSize( 1 );

assertThat( stores ).extracting( "name" )

.containsExactly( "Waterstones Piccadilly" );

}And lastly, it would be nice to know if the book store you are about to visit contains enough copies for you to buy (assuming, you would like to buy at least 10 of them).

@Test

public void testFindStoreWithEnoughBookQuantity() {

final Book book = dataStore.createQuery( Book.class )

.field( "title" ).equal( "MongoDB in Action" )

.get();

final List< Store > stores = dataStore

.createQuery( Store.class )

.field( "stock" ).hasThisElement(

dataStore.createQuery( Stock.class )

.field( "quantity" ).greaterThan( 10 )

.field( "book" ).equal( book )

.getQueryObject() )

.retrievedFields( true, "name" )

.asList();

assertThat( stores ).hasSize( 1 );

assertThat( stores ).extracting( "name" ).containsExactly( "Barnes & Noble" );

}This test case demonstrates a very important technique to query the embedded (or inner) documents using $elemMatch operator. Because each store has own stock (represented as a list of Stock objects), we would like to find the store which has at least 10 copies of MongoDB in Action book. Additionally, we would like to retrieve from MongoDB only name of the store, filtering out all other properties by applying retrievedFields filter. Also, each stock element contains the reference to the book and as such the book in question should be retrieved beforehand and passed to query criteria.

8. Updating Documents

Document updates or modifications are the most interesting and powerful features of MongoDB. It heavily leverages the querying capabilities we have seen in Querying Documents section and rich update semantics we are about to explore.

Not to be surprised, Morphia provides a couple of ways to perform in-place document updates using save()/merge(), update()/updateFirst() and findAndModify() method families. We are going to start with test case using the save() method as we have seen it in action before.

@Test

public void testSaveStoreWithNewLocation() {

final Store store = dataStore

.createQuery( Store.class )

.field( "name" ).equal( "Waterstones Piccadilly" )

.get();

assertThat( store.getVersion() ).isEqualTo( 1 );

store.setLocation( new Location( 50.50957,-0.135484 ) );

final Key< Store > key = dataStore.save( store );

final Store updated = dataStore.getByKey( Store.class, key );

assertThat( updated.getVersion() ).isEqualTo( 2 );

}This is the most straightforward way to perform the modifications if you are already working with some document instance(s) retrieved from MongoDB. You just call regular Java setters to update some properties and pass the updated object to save() method. Please notice that each call to save() increments the version of the document: if the version of the object being saved does not match the one in the database, the ConcurrentModificationException will be thrown (as test case below simulates).

@Test( expected = ConcurrentModificationException.class )

public void testSaveStoreWithConcurrentUpdates() {

final Query< Store > query = dataStore

.createQuery( Store.class )

.filter( "name =", "Waterstones Piccadilly" );

final Store store1 = query.get();

final Store store2 = query.cloneQuery().get();

store1.setName( "New Store 1" );

dataStore.save( store1 );

assertThat( store1.getName() ).isEqualTo( "New Store 1" );

store2.setName( "New Store 2" );

dataStore.save( store2 );

}Another way to perform single or mass document modifications is by using update() method call. By default, the update() modifies all documents matching the query criteria. If you would like to limit the scope to one document only, please use updateFirst() method.

@Test

public void testUpdateStoreLocation() {

final UpdateResults< Store > results = dataStore.update(

dataStore

.createQuery( Store.class )

.field( "name" ).equal( "Waterstones Piccadilly" ),

dataStore

.createUpdateOperations( Store.class )

.set( "location", new Location( 50.50957,-0.135484 ) )

);

assertThat( results.getUpdatedCount() ).isEqualTo( 1 );

}The return value of the update() method is not a document (or documents) but more generic operation status: how many documents have been updated or inserted, etc. It is very useful and faster comparing to other options if you do not need the updated document(s) to be returned to you but rather to make sure at least something has been updated.

The findAndModify is the most powerful and feature-rich operation. It leads to the same results as update() method but it also may return the updated document (after the modifications have been applied) or the old one (before the modifications). Additionally, it may create a new document if none matched the query (performing so called upsert).

@Test

public void testFindStoreWithEnoughBookQuantity() {

final Book book = dataStore.createQuery( Book.class )

.field( "title" ).equal( "MongoDB in Action" )

.get();

final Store store = dataStore.findAndModify(

dataStore

.createQuery( Store.class )

.field( "stock" ).hasThisElement(

dataStore.createQuery( Stock.class )

.field( "quantity" ).greaterThan( 10 )

.field( "book" ).equal( book )

.getQueryObject() ),

dataStore

.createUpdateOperations( Store.class )

.disableValidation()

.inc( "stock.$.quantity", -10 ),

false

);

assertThat( store ).isNotNull();

assertThat( store.getStock() )

.usingElementComparator( comparator )

.contains( new Stock( book, 5 ) );

}The test case above finds store which has at least 10 copies of the book MongoDB in Action and decrement its stock by that amount (leaving only 5 copies available out of initial 15). The stock.$.quantity construct targets an update to the exact stock element matching the query criteria. The modified store has been returned by the call confirming that only 5 MongoDB in Action books left in stock.

9. Deleting Documents

Similarly to Updating Documents, deleting documents from collection could be done in several ways, depending on use case. For example, if you already have an instance of document retrieved from MongoDB, it could be passed directly to the delete() method.

@Test

public void testDeleteStore() {

final Store store = dataStore

.createQuery( Store.class )

.field( "name" ).equal( "Waterstones Piccadilly" )

.get();

final WriteResult result = dataStore.delete( store );

assertThat( result.getN() ).isEqualTo( 1 );

}The method returns an operation status with a number of documents affected. As we are deleting a single store instance, the expected number of affected documents is 1.

In case of mass removal, the delete() method allows to provide a query and also returns the status with a number of documents affected. The following test case deletes all stores (which we have created only 2).

@Test

public void testDeleteAllStores() {

final WriteResult result = dataStore.delete( dataStore.createQuery( Store.class ) );

assertThat( result.getN() ).isEqualTo( 2 );

}Consequently, there is a findAndDelete() method which accepts a query and returns the single (or first in case of multiple matches) deleted document which satisfies the criteria. If no documents match the criteria, findAndDelete() returns null.

@Test

public void testFindAndDeleteBook() {

final Book book = dataStore.findAndDelete(

dataStore

.createQuery( Book.class )

.field( "title" ).equal( "MongoDB in Action" )

);

assertThat( book ).isNotNull();

assertThat( dataStore.getCollection( Book.class ).count() ).isEqualTo( 3 );

}10. Aggregations

Aggregations are the most complex part of MongoDB operations because of the flexibility they provide to manipulate documents. Morphia tries hard to simplify the usage of the aggregations from the Java code but you may still find them a bit difficult to work with.

We are going to start with simple example of grouping books by publisher. The result of the operation is the publisher’s name and the count of the books.

@Test

public void testGroupBooksByPublisher() {

final DBObject result = dataStore

.getCollection( Book.class )

.group(

new BasicDBObject( "publisher.name", "1" ),

new BasicDBObject(),

new BasicDBObject( "total", 0 ),

"function ( curr, result ) { result.total += 1 }"

);

assertThat( result ).isInstanceOf( BasicDBList.class );

final BasicDBList groups = ( BasicDBList )result;

assertThat( groups ).hasSize( 3 );

assertThat( groups ).containsExactly(

new BasicDBObject( "publisher.name", "O'Reilly" ).append( "total", 2.0 ),

new BasicDBObject( "publisher.name", "Manning" ).append( "total", 1.0 ),

new BasicDBObject( "publisher.name", "Addison Wesley" ).append( "total", 1.0 )

);

}As you can see, even being a Java method call, group() arguments resemble a lot the parameters of group command we have seen in Part 2. MongoDB Shell Guide – Operations and Commands.

To finish up with aggregations, let us tackle a bit more interesting task: group books by categories. At the moment, MongoDB does not support grouping by array property (which categories are). Luckily, we can build an aggregation pipeline (which we have also touched in Part 2. MongoDB Shell Guide – Operations and Commands ) to achieve the desired result.

@Test

public void testGroupBooksByCategories() {

final DBCollection collection = dataStore.getCollection( Book.class );

final AggregationOutput output = collection

.aggregate(

Arrays.< DBObject >asList(

new BasicDBObject( "$project", new BasicDBObject( "title", 1 )

.append( "categories", 1 ) ),

new BasicDBObject( "$unwind", "$categories"),

new BasicDBObject( "$group", new BasicDBObject( "_id", "$categories" )

.append( "count", new BasicDBObject( "$sum", 1 ) ) )

)

);

assertThat( output.results() ).hasSize( 4 );

assertThat( output.results() ).containsExactly(

new BasicDBObject( "_id", "Patterns" ).append( "count", 1 ),

new BasicDBObject( "_id", "Programming" ).append( "count", 3 ),

new BasicDBObject( "_id", "NoSQL" ).append( "count", 4 ),

new BasicDBObject( "_id", "Databases" ).append( "count", 4 )

);

} The first thing the aggregation pipeline does is picking only title and categories from the books documents collection. Then it applies an unwinding operation to categories array to convert it to a simple field with a single value (which for every book document will create as many intermediate documents as categories this book has). And lastly, the grouping is being performed.

11. What’s next

This part gave us the first look on MongoDB from application developer point of view. In the next part of the tutorial we are going to cover MongoDB sharding capabilities.

Is this project at github?

Thanks