Cassandra At The Heart Of Globo’s Live Streaming Platform

A couple of years ago my friend Juarez Bochi wrote a post, here on Planet Cassandra, sharing the challenges we had implementing Globo.com’s live streaming platform, specially with the migration from Redis to Cassandra.

For those not familiar, Globo.com is the internet branch for Grupo Globo, one of the 5 largest media conglomerates in the world, producing content such as TV series, telenovelas, TV shows, news shows, etc. exporting them worldwide. The live streaming platform plays an essential role ingesting and streaming these content using the HTTP Live Streaming (HLS) protocol, allowing web, mobile and smart tv clients to broadcast videos seamlessly to end users via HTTP.

As Juarez pointed out on his post, we implemented a Digital Video Recording feature, or DVR, on the platform for the World Cup 2014, allowing users to pause and rewind the video whenever they like. This solution was first implemented using Redis as the underlying storage mechanism to the HLS files, as we wanted fast reads (cache-like). That was a great solution at that time, since we were just streaming at most a couple of matches simultaneously. A few months later with the presidential and governor elections in Brazil, we needed to find a way to scale out this architecture to be able to broadcast all 27 simultaneous governor debates live. That was when we started considering other storage alternatives, looking specially for things like high availability and scalability, so we decided to give Cassandra a try.

At first it felt a bit awkward to have binary data persisted and served straight from the database, as most of the implementations I’ve seen previously used file system solutions, but when I saw the graphs with response times, reads and writes, that just blew me away.

For every channel streaming on our platform we have an encoder, Elemental in most cases, encoding the video signal in H.264 producing six bitrate streams ranging from 264k to 2864k, for adaptive bitrate streaming. Every stream is then pushed into our segmenter (Nginx RTMP) using the Real Time Messaging Protocol, or RTMP, where it creates the HLS video chunks and the video playlists and stores on disk. The Ingest Service, listens for any disk activity and stores the video chunks and the playlists in Cassandra, setting the time to live (TTL) to the DVR duration. So if a stream is configured to have a DVR duration of 1 hour, allowing users to rewind up to that point, the video chunk will expire after this period. This is all configurable internally through APIs.

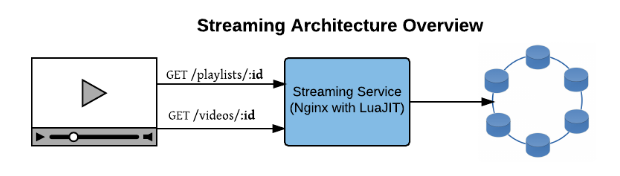

We use a player called Clappr, which was developed internally but is now open-source. Clappr supports the HLS protocol, so playing a live streaming became as simple as making a GET request to the Streaming Service to fetch the playlist for a specific broadcast, then from the playlist make subsequent GET requests, each for a separate video chunk. The Streaming Service runs on Nginx servers with LuaJIT, and for Cassandra interactions we used the Lua Driver we developed internally.

Putting the new architecture into test

After the Brazilian elections we were pretty confident to put this new architecture into test on our two main products, Globosat Play (http://globosatplay.com) and Globo Play (http://globoplay.com). That resulted in 168 new streams, worth 88GB of disk space, being ingested and streamed on the platform. Both products are a huge success with more than 14 million users watching videos monthly on desktops, mobile phones, tablets and smart tvs. It seems that the decision of using Cassandra was the right one for us, as you can see from a sampling of our metrics below.

Getting ready for the Olympic Games

Now with the olympic games starting in a couple of months we needed to scale even more our architecture, as we are adding up 324 new streams to be broadcast. That’s more than three times our current workload! We are configuring another Cassandra datacenter, on a different physical datacenter, and adding it to the same cluster. The automatic multi-datacenter replication is just beautiful! We are also migrating to the Cassandra Enterprise version to rely on 24/7 support.

Looking ahead…

One of the features built in Globo Play is the ability to broadcast regional tv shows, produced by Globo’s affiliates countrywide, to users based on their geographic location. So users in one region will watch different content from ones in other regions. Currently all this data is stored on a single Cassandra datacenter, and one of the things we are looking to improve is to have them spread across different region based datacenters, together with some kind of backup strategy between them. The idea is to improve end-user response time, better distribute content and avoid unnecessary networking hops we have today. For reference, see this article.

Cassandra setup

We are currently running with two Datastax Enterprise 4.8 datacenters, with four nodes each. Our replication factor is two and query consistency levels are defined as one, since our dataset is never updated, eliminating the need to check consistency on other nodes, improving response time. Each node runs on RHEL 6.5 and has 24 cores, 64GB of RAM and 1TB SSD.

Conclusion

I believe Globo nailed it when chose to adopt Cassandra as the underlying mechanism to broadcast its live video content. Fast, easy to scale, highly available… well done! Looking forward to the next opportunity to explore it even more.

| Reference: | Cassandra At The Heart Of Globo’s Live Streaming Platform from our JCG partner Alexandre Martins at the Planet Cassandra blog. |