How to Integrate Apache PredictionIO with MapR for Actionable Machine Learning

PredictionIO is an open source machine learning server, and is a recent addition to the Apache family. PredictionIO allows you to:

- Quickly build and deploy an engine as a web service in production with customizable templates

- Respond to dynamic queries in real time once deployed as a web service

- Evaluate and tune multiple engine variants systematically

- Unify data from multiple platforms in batch or in real time for comprehensive predictive analytics

- Speed up machine learning modeling with systematic processes and pre-built evaluation measures

- Support machine learning and data processing libraries such as Spark MLlib and OpenNLP

- Implement your own machine learning models and seamlessly incorporate them into your engine

- Simplify data infrastructure management

PredictionIO is bundled with HBase, and is used as event data storage to manage the data infrastructure for machine learning models. In this integration task, we will use MapR-DB within the MapR Converged Data Platform to replace HBase. MapR-DB is implemented directly in the MapR File System. The resulting advantages is that MapR-DB has no intermediate layers when performing operations on data. MapR-DB runs within the MapR-FS process, and reads/writes to disk directly. Whereas HBase mostly runs on HDFS, which it needs to communicate through JVM and HDFS, and it also communicates with the Linux file system to perform reads/writes. Further advantages could be found here in the MapR documentation.

A few lines of code need to be modified in PredictionIO to work with MapR-DB. I have created a forked version that works with MapR 5.1 and Spark 1.6.1. The Github link is here.

Preparation

The prerequisite is that you have a MapR 5.1 cluster running, with Spark 1.6.1 and an ElasticSearch server installed. We use MapR-DB (1.1.1) for event data storage, ElasticSearch for metadata storage, and MapR-FS for model data storage. In MapR-DB, there is no HBase namespace concept, so the table hierarchy is based on the hierarchy of the MapR File System. But MapR supports namespace mapping for HBase (the detailed link is here). Please note that the core-site.xml is at “/opt/mapr/hadoop/hadoop-2.7.0/etc/hadoop/” as of MapR 5.1 and you should modify core-site.xml and add a configuration as below. Also, please create a dedicated MapR volume at the path of your choice.

<property> <name>hbase.table.namespace.mappings</name> <value>*:/hbase_tables</value> </property>

Then we download and compile PredictionIO:

git clone https://github.com/mengdong/mapr-predictionio.git cd mapr-predictionio git checkout mapr ./make-distribution.sh

After it compiles, there should be a file “PredictionIO-0.10.0-SNAPSHOT.tar.gz” that was created. Copy it to a temporary path and extract it there, and copy back the jar file “pio-assembly-0.10.0-SNAPSHOT.jar” into “lib” directory under your “mapr-predictionio” folder.

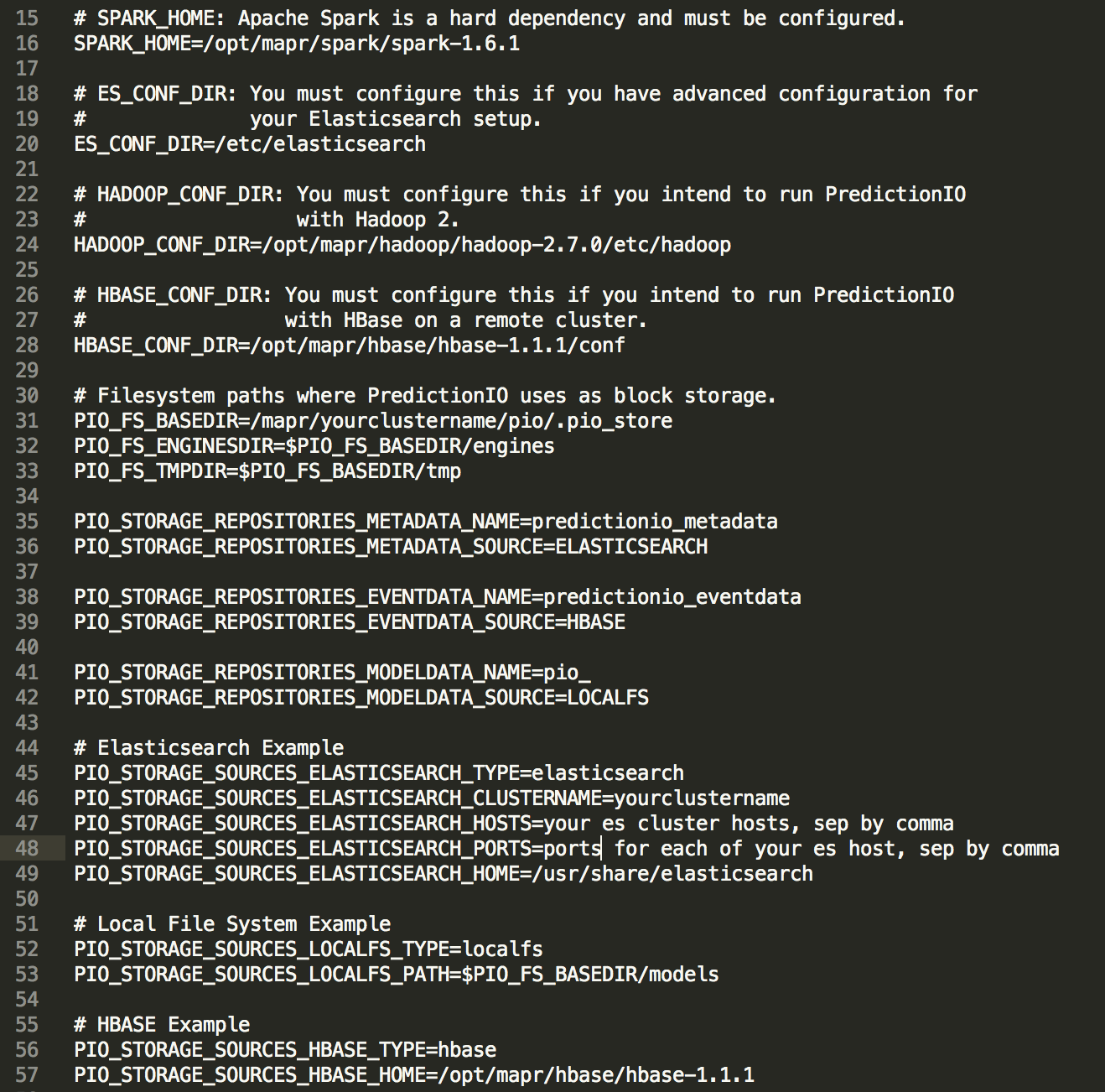

Since we want to work with MapR 5.1, we want to make sure the proper classpath is included. I have edited “bin/pio-class” in my repo to include the necessary change, but your environment could vary, so please edit accordingly. “conf/pio-env.sh” also needs to be created. I have a template for reference:

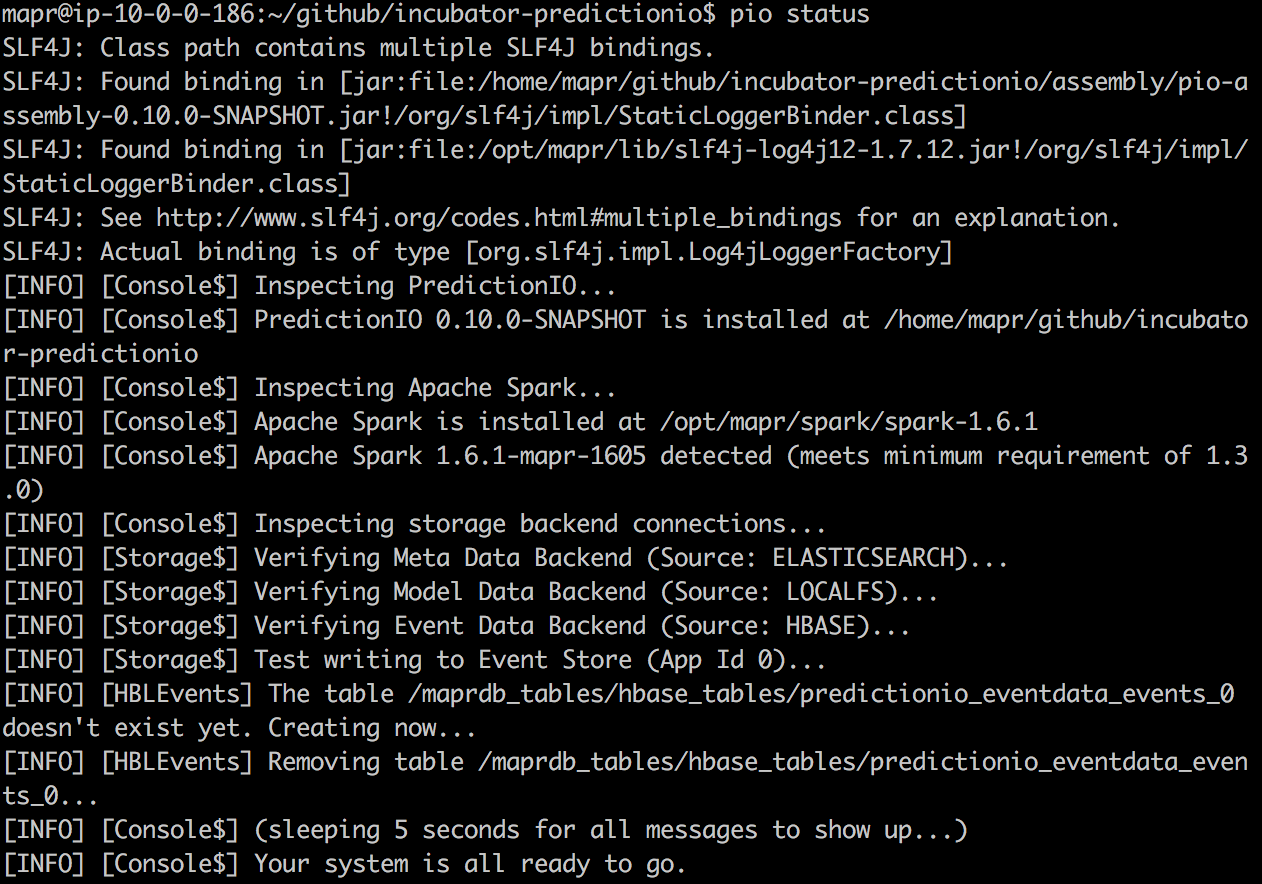

At this point, the preparation is almost finished. We should add “bin” folder of your PredictionIO to your path. Simply run “pio status” to find out if your setup is successful. If everything works out, you should observe the following log:

This means it is ready to run “bin/pio-start-all” to start your PredictionIO console. If it runs successfully, you can just run “jps” and you should observe a “console” jvm.

Deploy Machine Learning

One excellent feature of PredictionIO is the ease of developing/training/deploying your machine learning application and performing model update and model governance. There are many templates available to demo; for example: http://predictionio.incubator.apache.org/demo/textclassification/.

However, due to its recent migration to the Apache family, the links are broken. I have created a forked repo in order to make a couple of templates work. One https://github.com/mengdong/template-scala-parallel-classification is for http://predictionio.incubator.apache.org/demo/textclassification/, which is a logistic regression trained to do binary spam email classification.

Another one https://github.com/mengdong/template-scala-parallel-similarproduct is for http://predictionio.incubator.apache.org/templates/similarproduct/quickstart/, which is a recommendation engine for users and items. You can either clone my forked repo instead of using “pio template get” or you can copy “src” folder and “build.sbt” over to your “pio template get” location. Please modify the package name in your Scala code to match your input during template get if you do a copy over.

Everything else works in the predictionIO tutorial. I believe the links will be fixed very soon as well. Just follow the tutorial to register your engine to a PredictionIO application. Then train the machine learning model and further deploy the model and use it through the REST service or SDK (currently supporting python/java/php/ruby). You can also use Spark and PredictionIO to develop your own model to use MapR-DB to serve as the backend.

| Reference: | How to Integrate Apache PredictionIO with MapR for Actionable Machine Learning from our JCG partner Dong Meng at the Mapr blog. |