Short DNS Record TTL And Centralization Are Serious Risks For The Internet

Yesterday Dyn, a DNS-provider, went down after a massive DDoS. That led to many popular websites being inaccessible, including twitter, LinkedIn, eBay and others. The internet seemed to be “crawling on its knees”.

We’ll probably read an interesting post-mortem from Dyn, but why did that happen? First, DDoS capacity is increasing, using insecure and infected IoT devices with access to the internet. Huge volumes of fake requests are poured on a given server or set of servers and they become inaccessible, either being unable to cope with the requests, or simply because the network to the server doesn’t have enough throughput to accomodate all the requests.

But why did “the internet” stop because a single DNS provider was under attack? First, because of centralization. The internet is supposed to be decentralized (although I’ve argued that exactly because of DNS, it is pseudo-decentralized). But services like Dyn, UltraDNS, Amazon Route53 and also Akamai and CloudFlare centralize DNS. I can’t tell how exactly, but out of the top 500 websites according to Moz.com, 181 use one of the above 5 services as their DNS provider. Add 25 google services that use their own, and you get nearly 200 out of 500 centered in just 6 entities.

But centralization of the authoritative nameservers alone would not have led to yesterday’s problem. A big part of the problem, I think, is the TTL (time to live) of the DNS records, that is – the records which contain the mapping between domain name and IP address(es). The idea is that you should not always hit the authoritative nameserver (Dyn’s server(s) in this case) – you should hit it only if there is no cached entry anywhere along the way of your request. Your operating system may have a cache, but more importantly – your ISP has a cache. So the idea is that when subscribers of one ISP all make requests to twitter, the requests should not go to the nameserver, but would instead by resolved by looking them up in the cache of the ISP.

If that was the case, regardless of whether Dyn was down, most users would be able to access all services, because they would have their IPs cached and resolved. And that’s the proper distributed mode that the internet should function in.

However, it has become a common practice to set very short TTL on DNS records – just a few minutes. So after the few minutes expire, your browsers has to ask the nameserver “what IP should I connect to in order to access twitter.com?”. That’s why the attack was so successful – because no information was cached and everyone repeatedly turned to Dyn to get the IP corresponding to the requested domain.

That practice is highly questionable, to say the least. This article explains in details the issues of short TTLs, but let me quote some important bits:

The lower the TTL the more frequently the DNS is accessed. If not careful DNS reliability may become more important than the reliability of, say, the corporate web server.

The increasing use of very low TTLs (sub one minute) is extremely misguided if not fundamentally flawed. The most charitable explanation for the trend to lower TTL value may be to try and create a dynamic load-balancer or a fast fail-over strategy. More likely the effect will be to break the nameserver through increased load.

So we knew the risks. And it was inevitable that this problematic practice will be abused. I decided to analyze how big the problem actually is. So I got the aformentioned top 500 websites as representative, fetched their A, AAAA (IPv6), CNAME and NS records, and put them into a table. You can find the code in this gist (uses the dnsjava library).

The resulting CSV can be seen here. And if you want to play with it in Excel, here is the excel file.

Some other things that I collected: how many websites have AAAA (IPv6) records (only 79 out of 500), whether the TTLs betwen IPv4 and IPv6 differ (it does for 4), which is the DNS provider (which is how I got the figures mentioned above), taken from the NS records, and how many use CNAME instead of A records (just a few). I also collected the number of A/AAAA records, in order to see how many (potentially) utilize round-robin DNS (187) (worth mentioning: the A records served to me may differ from those served to other users, which is also a way to do load balancing).

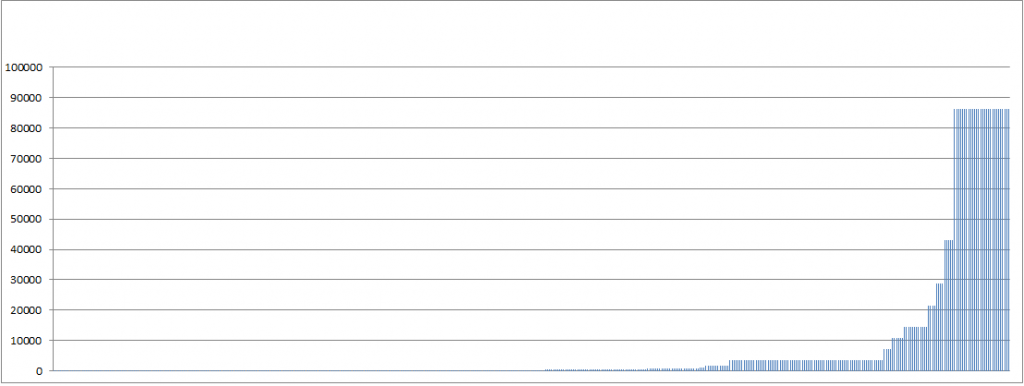

The results are a bit scary. The average TTL is only around 7600 seconds (2 hours and 6 minutes). But it gets worse when you look at the 50th percentile (sort the values by ttl and get the lowest 250). The average there is just 215 seconds. This means the DNS servers are hit constantly, which turns them into a real single point of failure and “the internet goes down” just after a few minutes of DDoS.

Just a few websites have a high TTL, as can be seen from this simple chart (all 500 sites are on the X axis, the TTL is on y):

What are the benefits of the short TTL? Not many, actually. You have the flexibility to change your IP address, but you don’t do that very often, and besides – it doesn’t automatically mean all users will be pointed to the new IP, as some ISPs, routers and operating systems may ignore the TTL value and keep the cache alive for longer periods. You could do the round-robin DNS, which is basically using the DNS provider as a load-balancer, which sounds wrong in most cases. It can be used for geolocation routing – serving different IP depending on the geographical area of the request, but that doesn’t necessarily require a low TTL – if caching happens closer to the user than to the authoritative DNS server, then he will be pointed to the nearest IP anyway, regardless of whether that values gets refreshed often or not.

Short TTL is very useful with internal infrastructure – when pointing to your internal components (e.g. a message queue, or to a particular service if using microservices), then using low TTLs may be better. But that’s not about your main domain being accessed from the internet.

Overlay networks like BitBorrent and Bitcoin use DNS round-robin for seeding new clients with a list of peers that they can connect to (your first use of a torrent client connects you to one of serveral domains that each point to a number of nodes that are supposed to be always on). But that’s again a rare usecase.

Overall, I think most services should go for higher TTLs. 24 hours is not too much, and it will be needed to keep your old IP serving requests for 24 hours anyway, because of caches that ignore the TTL value. That way services won’t care if the auhtoritative nameserver is down or not. And that would in turn mean that DNS providers would be less of an interesting target for attacks.

And I understand the flexibility that Dyn and Route53 give us. But maybe we should think of a more distributed way to gain that flexibility. Because yesterday’s attack may be just the beginning.

| Reference: | Short DNS Record TTL And Centralization Are Serious Risks For The Internet from our JCG partner Bozhidar Bozhanov at the Bozho’s tech blog blog. |