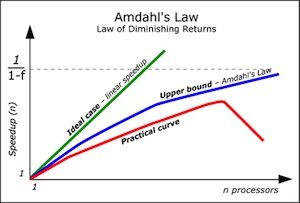

Amdahl’s law illustrated

The article will explain the Amdahl’s law in simple terms. We are going to demonstrate via a case study how throughput and latency are changing when you change the number of threads performing the tasks. We also help to draw right conclusions in the context of your own performance tuning task at hand.

First of all, let’s refresh our memory on the definitions.

- Throughput – number of computing tasks closed per time unit. Example – 1,000 credit card payments in a minute.

- Latency – delay between invoking the operation and getting the response. Example – maximum time taken to process a credit card transaction is 25ms.

The case we built is simulating a typical Java EE application doing some computation in the JVM and calling an external data source. Just like many of the Java EE apps out there – user initiates HttpRequest processed in the application server in turn calling either a relational database or a web service. The simulation achieves this via burning through CPU cycles in calculating large primes and enforcing the thread to sleep for a while. Even though the sleeping part sounds weird at the first glance – this is similar to how the threads are handled when waiting for an external resource. Sleeping / waiting threads are removed from the list of threads waiting for their schedule. Until the response arrives.

The case we built is simulating a typical Java EE application doing some computation in the JVM and calling an external data source. Just like many of the Java EE apps out there – user initiates HttpRequest processed in the application server in turn calling either a relational database or a web service. The simulation achieves this via burning through CPU cycles in calculating large primes and enforcing the thread to sleep for a while. Even though the sleeping part sounds weird at the first glance – this is similar to how the threads are handled when waiting for an external resource. Sleeping / waiting threads are removed from the list of threads waiting for their schedule. Until the response arrives.

The application we have built also contains a thread pool. Again, just as most of the modern application servers do. We are going to change the size of the pool. And we bombard the application with a lot of simulated “HttpRequests” to find out how the latency and throughput look under the load.

But before doing so, could we give our best guess on what would the results look like? The tests were ran using a mid-2010 Macbook Pro on 2.66MHz Intel i7. Two cores, hyperthreading enabled. So four virtual cores for our threads to play with. At any given moment each of our four cores is making progress in one thread.

In single-threaded environment the code used along our tests contained a snippet burning CPU cycles for ~50ms and a 1,000ms sleep. So 20-1 ratio between executing the code in JVM versus being blocked on the external call.

Considering that total request time is 1,050ms out of which 1,000ms is kept waiting we can calculate the number of tasks optimal for each core to process. Number of optimal tasks per core is equal to 1050/(1050-1000) = 21 tasks. Out of which one is currently being processed and 20 are waiting for the external resource. Considering we have four cores in total the optimal number of threads should be close to 84.

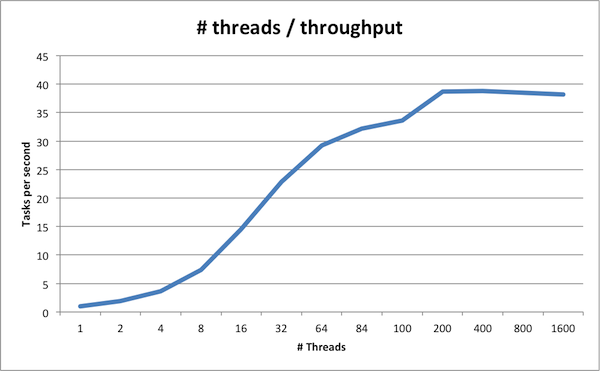

Now after running the tests with 4000 tasks to be executed and varying the number of threads in the pool between 1 and 3,200 we got the following results:

We have beautiful case of Amdahl’s law in practice – adding number of threads do not have significant effect on performance after 60-100 threads. But it does not exactly prove our initial guess having the best results on 84 threads. Using 84 threads we are able to fulfil approximately 32 tasks per second. But when the pools sized between 200-1600 threads were all able to execute approximately 39 tasks per second. Why so? Apparently modern processors are a lot smarter than our naive calculation and the schedulers are not just applying simple round-robin algos to select the threads. If any of our readers can explain the surprise, we are more than eager to listen.

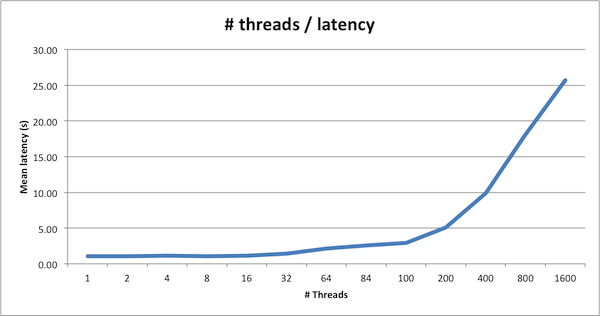

But would this indicate that for this particular case we can throw in a lot more threads than our initial 84 indicated? Not so fast. Lets look at another metrics – latency:

We see two significant bumps in latency – median latency is increased from 1,100ms to 1,500ms when we go from 16 threads to 32. And another more severe increase when we go from 100 to 200 – then the increase is already from 3,200 to 5,000 and it keeps growing quickly. Indicating that the context switches start taking their toll.

Now in real life this means that it might not be a good decision to throw in 800 threads in this case. Even though the throughput is nice and we are processing approximately 39 requests per second, the average user has to wait 18 seconds to have a response to their request.

Now what to conclude from here? Without actual requirements for throughput and latency we cannot push further. But in real life you have requirements in place. In form of “No requests can span more than 3,000ms and the average request must be completed in less than 2,000ms”. And “We must be able to process 30 requests per second”. Using those sample criterias we see that our sweet spot is anywhere between 64 and 100 threads.

Another important remark – when increasing the count of threads in the pool to 3,200 (actually already 2,400 was enough) we have exhausted the resources available and face the good’ol java.lang.OutOfMemoryError: unable to create new native thread message. Which is the JVM’s way of saying that OS is running out of its resources for your application. Depending on your operating system you can bypass those limits (ulimit in Linux for example), but this goes beyond the scope of this article.

Reference: Amdahl’s law illustrated from our JCG partner Vladimir Sor at the Plumbr Blog blog.