A walk-through for the Twitter streaming API

Introduction

Analyzing tweets is all the rage, and if you are new to the game you want to know how to get them programmatically. There are many ways to do this, but a great start is to use the Twitter streaming API, a RESTful service that allows you to pull tweets in real time based on criteria you specify. For most people, this will mean having access to the spritzer, which provides only a very small percentage of all the tweets going through Twitter at any given moment. For access to more, you need to have a special relationship with Twitter or pay Twitter or an affiliate like Gnip. This post provides a basic walk-through for using the Twitter streaming API. You can get all of this based on the documentation provided by Twitter, but this will be slightly easier going for

those new to such services. (This post is mainly geared for the first phase of the course project for students in my Applied Natural Language Processing class this semester). You need to have a Twitter account to do this walk-through, so obtain one now if you don’t have one already.

Accessing a random sample of tweets

First, trying pulling a random sample of tweets using your browser by going to the following link.

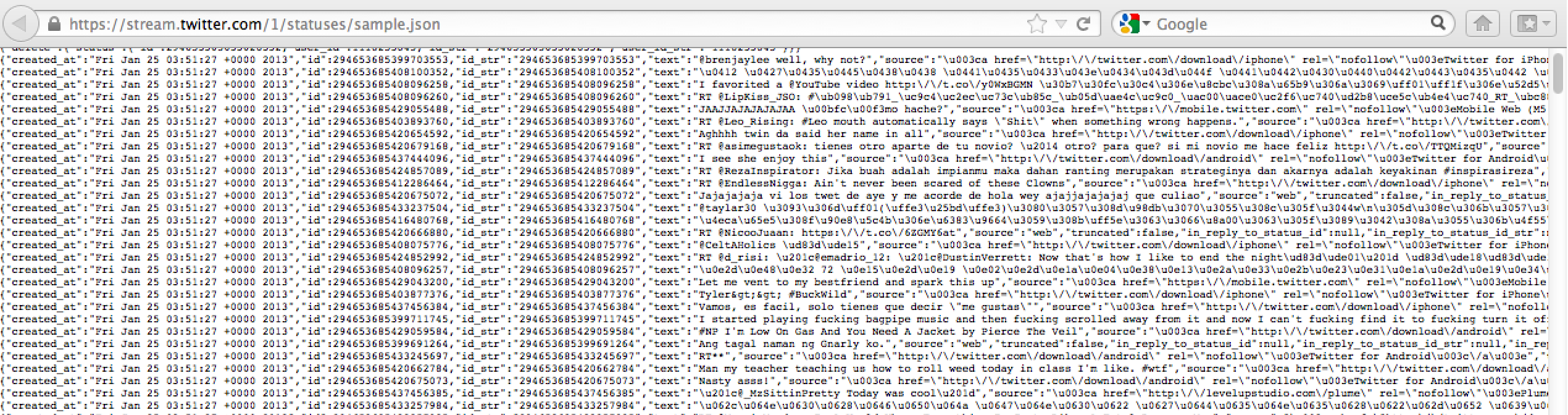

You should see a growing, unwieldy list of raw tweets flowing by. It should look something like the following image.

Here’s an example of a “raw” tweet (which comes in JSON, or JavaScript Object Notation):

{"text":"#LetsGoMavs til the end RT @dallasmavs: Are You ALL IN?","truncated":false,"retweeted":false,"geo":null,"retweet_count":0,"source":"web","in_reply_to_status_id_str":null,"created_at":"Wed Apr 25 15:47:39 +0000 2012","in_reply_to_user_id_str":null,"id_str":"195177260792299521","coordinates":null,"in_reply_to_user_id":null,"favorited":false,"entities":{"hashtags":[{"text":"LetsGoMavs","indices":[0,11]}],"urls":[],"user_mentions":[{"indices":[27,38],"screen_name":"dallasmavs","id_str":"22185437","name":"Dallas Mavericks","id":22185437}]},"contributors":null,"user":{"show_all_inline_media":true,"statuses_count":3101,"following":null,"profile_background_image_url_https":"https:\/\/si0.twimg.com\/profile_background_images\/285480449\/AAC_med500.jpg","profile_sidebar_border_color":"eeeeee","screen_name":"flyingcape","follow_request_sent":null,"verified":false,"listed_count":2,"profile_use_background_image":true,"time_zone":"Mountain Time (US & Canada)","description":"HUGE ROCKETS & MAVS fan. Lets take down the Lakers & beat up on the East. Inaugural member of the FC Dallas - Fort Worth fan club.","profile_text_color":"333333","default_profile":false,"profile_background_image_url":"http:\/\/a0.twimg.com\/profile_background_images\/285480449\/AAC_med500.jpg","created_at":"Thu Oct 21 15:40:21 +0000 2010","is_translator":false,"profile_link_color":"1212cc","followers_count":35,"url":null,"profile_image_url_https":"https:\/\/si0.twimg.com\/profile_images\/1658982184\/204970_10100514487859080_7909803_68807593_5366704_o_normal.jpg","profile_image_url":"http:\/\/a0.twimg.com\/profile_images\/1658982184\/204970_10100514487859080_7909803_68807593_5366704_o_normal.jpg","id_str":"205774740","protected":false,"contributors_enabled":false,"geo_enabled":true,"notifications":null,"profile_background_color":"0a2afa","name":"Mandy","default_profile_image":false,"lang":"en","profile_background_tile":true,"friends_count":48,"location":"ATX \/ FDub. From Galveston !","id":205774740,"utc_offset":-25200,"favourites_count":231,"profile_sidebar_fill_color":"efefef"},"id":195177260792299521,"place":{"bounding_box":{"type":"Polygon","coordinates":[[[-97.938383,30.098659],[-97.56842,30.098659],[-97.56842,30.49685],[-97.938383,30.49685]]]},"country":"United States","url":"http:\/\/api.twitter.com\/1\/geo\/id\/c3f37afa9efcf94b.json","attributes":{},"full_name":"Austin, TX","country_code":"US","name":"Austin","place_type":"city","id":"c3f37afa9efcf94b"},"in_reply_to_screen_name":null,"in_reply_to_status_id":null}There is a lot of information in there beyond the tweet text itself, which is simply “#LetsGoMavs til the end RT @dallasmavs: Are You ALL IN?” It is basically a map from attributes to values (and values may themselves be such a map, e.g. for the “user” attribute above). You can see whether the tweet has been retweeted (which will be zero when the tweet is first published), what time it was created, the unique tweet id, the geo-coordinates (if available), and more. If an attribute does not have a value for the tweet, it is ‘null’.

I will return to JSON processing of tweets in a later tutorial, but you can get a head start by seeing my tutorial on using Scala to process JSON in general.

Command line access to tweets

Assuming you were successful in being able to view tweets in the browser, we can now proceed to using the command line. For this, it will be convenient to first set environment variables for your Twitter username and password.

$ export TWUSER=foo $ export TWPWD=bar

Obviously, you need to provide your Twitter account details instead of foo and bar…

Next, we’ll use the program curl to interact with the API. Try it out by downloading this blog post.

$ curl http://bcomposes.wordpress.com/2013/01/25/a-walk-through-for-the-twitter-streaming-api/ > bcomposes-twitter-api.html $ less bcomposes-twitter-api.html

Given that you pulled tweets from the API using your web browser, and that curl can access web pages in this way, it is simple to use curl to get tweets and direct them straight to a file.

$ curl https://stream.twitter.com/1/statuses/sample.json -u$TWUSER:$TWPWD > tweets.json

That’s it: you now have an ever-growing file with randomly sampled tweets. Have a look and try not to lose your faith in humanity.

Pulling tweets with specific properties

You might want to get the tweets from specific users rather than a random sample. This requires user ids rather than the user names we usually see. The id for a user can be obtained from the Twitter API by looking at the /users/show endpoint. For example, the following gives my information:

Which gives:

<user> <id>119837224</id> <name>Jason Baldridge</name> <screen_name>jasonbaldridge</screen_name> <location>Austin, Texas</location> <description> Assoc. Prof., Computational Linguistics, UT Austin. Senior Data Scientist, Converseon. OpenNLP developer. Scala, Java, R, and Python programmer. </description> ...MORE...

So, to follow @jasonbaldridge via the Twitter API, you need user id 119837224. You can pull my tweets via the API using the “follow” query parameter.

$ curl -d follow=119837224 https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

There is a good chance I’m not tweeting right now, so you’ll probably not see anything. Let’s follow more users, which we can do by adding more id’s separated by commas.

$ curl -d follow=1344951,5988062,807095,3108351 https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

This will follow Wired Magazine (@wired), The Economist (@theeconomist), the New York Times (@nytimes), and the Wall Street Journal (@wsj). You can also write those ids to a file and read them from the file. For example:

$ echo 'follow=1344951,5988062,807095,3108351' > following $ curl -d @following https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

You can of course edit the file “following” rather than using echo to create it. Also, the file name can be named whatever you like (“following” as the name is not important here). You can search for a particular term in tweets, such as “Scala”, using the “track” query parameter.

$ curl -d track=scala https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

And, no surprise, you can search for multiple items by using commas to separate them.

$ curl -d track=scala,python,java https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

However, this only requires that a tweet match at least one of these terms. If you want to ensure that multiple terms match, you’ll need to write them to a file and then refer to that file. For example, to get tweets that have both “sentiment” and “analysis” OR both “machine” and “learning” OR both “text” and “analytics”, you could do the following:

$ echo 'track=sentiment analysis,machine learning,text analytics' > tracking $ curl -d @tracking https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

You can pull tweets from a specific rectangular area (bounding box) on the Earth’s surface. For example, the following pulls geotagged tweets from Austin, Texas.

$ curl -d locations=-97.8,30.25,-97.65,30.35 https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

The bounding box is given as latitude (bottom left), longitude (bottom left), latitude (top right), longitude (top right). You can add further bounding boxes to capture more locations. For example, the following captures tweets from Austin, San Francisco, and New York City.

$ curl -d locations=-97.8,30.25,-97.65,30.35,-122.75,36.8,-121.75,37.8,-74,40,-73,41 https://stream.twitter.com/1/statuses/filter.json -u$TWUSER:$TWPWD

Conclusion

It’s all pretty straightforward, and quite handy for many kinds of tweet-gathering needs. One of the problems is that Twitter will drop the connection at times, and you’ll end up missing tweets until you start a new process. If you need constant monitoring, see UT Austin’s Twools (Twitter tools) for obtaining a steady stream of tweets that picks up whenever Twitter drops your connection.

Reference: A walk-through for the Twitter streaming API from our JCG partner Jason Baldridge at the Bcomposes blog.

When I click on the link : “https://stream.twitter.com/1/statuses/sample.json” , I get authentication required. What to put in as username and password? I tried putting my Twitter credentials but it failed. Kindly help.

Were you able to find a solution as I have not been able to login.