Database Week at the AWS Loft

Here are my notes:

https://databaseweekoctober2019sf.splashthat.com

Databases on AWS: The Right Tool for the Right Job

I’m not taking super deep notes for many of these talks. I’m focusing on the highlights.

PostgreSQL came after MySQL.

8 categories of DBs on AWS:

- Relational

- Key value

- Document

- In-memory

- Graph

- Search

- Time series

- Ledger

Search for: AWS Database Services

For relational, they have Amazon Aurora. It’s compatible with MySQL and PostgreSQL. It has 5x throughput compared to MySQL (on RDS) and 3x compared to PostgreSQL (on RDS). It can scale out to 15 read replicas. It’s fault tolerant and self-healing. It keeps 6 copies of the data across three AZs. It can provide continuous backup to S3. It has encryption at rest and in transit. It’s fully managed by Amazon. They support cross region (unclear) and multimaster.

Amazon.com itself uses Aurora at least for some stuff.

There’s a database migration service.

They also support other DBs such as SQL Server using RDS.

Next, she covered key-value datastores.

DynamoDB is their key value store. It doesn’t require server provisioning. It provides consistent, single-digit ms response times at any scale. It has unlimited throughput. It’s encrypted at rest and in transit. It supports global DB replication across regions.

Next, she covered document databases. JSON documents are first-class objects in the DB. DocumentDB is their solution. This is good for user profiles, etc. It can scale up to 15 replicas. It provides milisecond latency. It’s MongoDB compatible.

In-memory databases: No persistence. In-memory. Microsecond performance. Strings, hashes, lists, sets, etc. Like Redis. They have ElastiCache. They are Redis and Memcached compatible. You can use shards and replicas to scale.

Full text search: They have Elasticsearch Service. It’s fully managed. It’s replicated across AZs with monitoring and self-healing.

Graph databases: Relationships are first-class objects. Data is modeled and queried as a graph. Vertices are connected by edges. It allows you to create and navigate relations between data easily and quickly. Amazon Neptune is a fully-managed graph database. It provides six replicas of your data. It supports Gremlin and SparkQL for querying it.

Time series data: It’s a sequence of data points recorded over an interval of time. Storing time series data in a relational DB is challenging. Amazon Timestream is their solution. It’s “serverless”.

Ledger: It’s related to block chain. The data is append only. These are ledgers with centralized control. This is great for healthcare. There’s a ledger database. There’s a journal. It’s all cryptographically verifiable. Imagine an insert, an update, and another update. Each step is audited. See Amazon Quantum Ledger Database (QLDB).

Use different DBs for different purposes.

She is going to show demos where most of the stuff is setup using a Cloud Formation template.

(She’s using a Windows laptop.)

Apparently, we were all given AWS credits. Remember to shut down and clean up resources at the end of the day.

See: https://github.com/aws-samples/aws-bookstore-demo-app

I asked what to do for developers’ machines when using Aurora. I was very confused by the answer. They mentioned AWS Control Tower and Landing Zone. You can create DB users or federate users. You can have a single or multiple accounts. You can control things… Someone in the crowd said you could just create a single account that everyone shares. You can federate your ActiveDirectory to AWS and then use that user account. You can create DB users…

Aurora supports a minimal version of MySQL 5.7, etc. You have to verify your version of MySQL compared to Aurora. You want to configure things similarly (buffer sizes, etc.).

I’m not sure if it’s okay for developers to simply using MySQL on their machines and Aurora in production.

Later, we’ll talk about schema migration tools. You can connect to your local MySQL. It’ll sample rows in your local DB. It’ll generate a SQL file for what it’ll look like in Aurora. You can determine the compatibility. Aurora is wire compatible. You can use existing drivers. But there are some specific parameters that might be custom, advanced engine stuff. You need to test for that.

There’s an Aurora session tomorrow afternoon.

Lunch & Workshop: Full-Stack Apps in 15 Minutes or Less

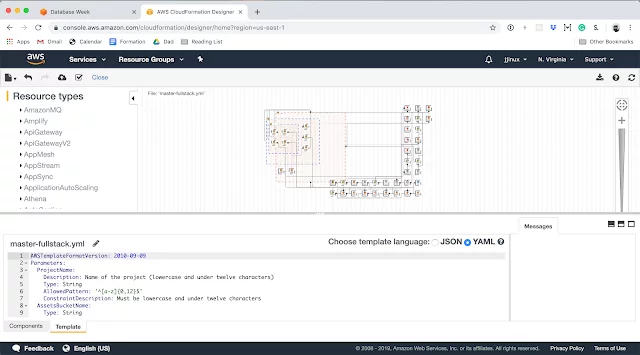

https://github.com/awslabs/aws-full-stack-template

(I really don’t know what 15 minutes or less is supposed to mean. You can’t write this in 15 minutes. You can’t even read the docs and set it up if you’re new in less than 15 minutes.)

Pay attention to which region you launch in.

Pay close attention when naming S3 buckets. Use lowercase. Start with letters and then letters or numbers. Use something like DNS. No underscores. Make it unique. I think it has to be globally unique.

For your project name in CloudFormation, lowercase, letters only, and under ten characters.

The interesting bit is this CloudFormation .yml file. It’s 1100 lines long! There are some interesting things in there. For instance, each individual lambda function has to be configured with which data stores it can access.

It looks like they’re using create-react-app, TypeScript, React Router, React Bootstrap, etc. It’s a client-side app.

AWS Database Migration Service (DMS)

DMS = Database Migration Service. It’s easy and quick. It’s light weight. It’s simple and straightforward.

SCT = Schema Conversion Tool. It’s advanced. It’s useful for data warehouses in Redshift, etc.

You can use them to modernize, migrate, or replicate.

SCT helps covert tables, views, and code.

Here’s what can you migrate from to: https://aws.amazon.com/dms/schema-conversion-tool/

SCT will generate an assessment report.

There are some things that it can’t port; things that are highly engine specific.

There’s a huge guide that talks about all the details of how it handles database features when porting from one type of DB to another.

You can use DMS for 90% of what you need to do.

DMS can even migrate SQL to and from NoSQL.

DMS is a HIPAA certified service.

DMS runs in the cloud. SCT runs as a machine image (I think).

You can even go from cloud to on-prem.

Use DMS + Snowball for large scale usage (over 5TB).

Snowball is a hardened PC in a box. It has a super rugged case. You load it up with your data and then send it back. It can hold up to 72TB. It’s $250. Shipping isn’t included. Note, it’s encrypted, and the keys aren’t on the device.

Ingestion is free. You have to pay for storage and egress.

You can use DMS for replication. You can use it for cross-region read replicas. They even said you can use it to keep your prod/dev in sync.

They’ve migrated over 170,000 databases.

You can migrate from Oracle to RDS for PostgreSQL. (I assume you can use Aurora’s PostgreSQL as well.)

A “snowmobile” is a tractor trailer that is a rugged container which you can use to ship exabytes worth of data.

You can migrate your data using Snowball and then use DMS to replicate recent changes.

It’s easier to go SQL Server to MySQL and Oracle to PostgreSQL.

- Convert or copy your schema.

- Move your data.

You don’t have to bring over all of your data if you don’t want to. You can filter only for the stuff you want.

You can use DMS to go multi-AZ for high availability.

You can map multiple instances to a single target. You can also map from a single source to multiple targets.

S3 is the foundation of most AWS data lake architectures.

Aurora MySQL is ahead of Aurora PostgreSQL.

You can install any legacy or open source DB on EC2. RDS is a managed service for this. They handle patching, the engine, the OS, etc. You define the instance size, etc. Aurora is the next step. He calls it serverless. You provide the data, and they provide the rest.

Workshop: Running SQL Server on Amazon RDS and Migrating to MySQL

Skipped.

Amazon Relational Database Service (RDS)

I missed the Amazon RDS talk. I’m more focused on Aurora anyway since it’s the more advanced, more managed option.

https://github.com/wrbaldwin/db-week

Lunch & Workshop: Getting Started with Amazon RDS

Skipped.

Amazon Aurora

He says that data is the most strategic asset a copy has these days.

They keep saying “‘Lift and shift’ existing apps to the cloud.”

Oracle started charging double the price if you move to the cloud. Hence, people are moving from the “old guard commercial databases” to MySQL, PostgreSQL, and MariaDB.

There’s a lot of tuning required to get scale and performance from a commercial DB.

Aurora is 1/10th the cost of a commercial DB.

He said it has the speed and availability of a commercial DB, but with the simplicity and cost-effectiveness of an open source DB.

It has drop-in compatability with MySQL and PostgreSQL.

It has pay-as-you-go pricing.

It’s a fully-managed service.

Three tenants:

- Scale-out, distributed architecture.

- Service-oriented architecture leveraging AWS.

- Automate admin tasks. It’s a fully-managed service.

They decoupled compute and storage.

It uses a “shared storage pool.”

It’s integrated with Lambda (invoke Lambda events from stored procedures and triggers), S3 (snapshots and backups), IAM, and CloudWatch.

AWS provides automatic failover, backup and recovery, isolation and security, industry compliance, push-button scaling, patching, monitoring, mainenance. You have to take care of schema design, queries, etc.

Compared to open source platforms, it has 5x better performance. It provides better availability and durability.

It’s 5x faster than MySQL (compared to RDS), 3x faster than PostgreSQL (based on SysBench). I asked how it compared to running MySQL on big hardware with local disks. He said that’s not apples to apples. (I think the 5x is kind of BS.)

Compute and storage are completely separate.

They optimize it to do less I/O and minimize network packets.

They take care of connection pooling. You can have up to 5000 connections.

You can have 6 copies across 3 AZs.

They require 4 out of 6 for a write quorum and 3 out of 6 for a read quorum.

They use peer-to-peer replication for repairs.

The volume is striped across hundreds of storage nodes.

You can have up to 15 read replicas. They read from the same shared distributed storage volume.

The undelying storage replays redo records on demand as part of a disk read. This is parallel, distributed, and async. There is no replay for startup. Hence, you get much, much faster recovery from failover. It only takes 10-20s.

He says it’s “cheaper than MySQL” (which is weird to say since MySQL is free). His explanation is that it’s cheaper compared to other managed services (i.e. RDS). It’s cheaper because of the shared storage pool. There’s also no need for an idle, standby instance.

You don’t have to use HA Proxy to fail over to the replica. They make a DNS change for you.

He showed an example cost breakdown:

- He mentioned r5.4XL machines.

- Each replica is $2.32 per hr.

- The instance cost is $6.96 per hr.

- The storage cost is $4.43 per hr.

- The total cost is $11.39 per hr. That’s a 28.7% savings compared to RDS.

In RDS, your standby is separate from your replicas. In Aurora, the replica acts as the standby. You don’t need an idle standby instance.

It’s backed by SSDs.

Amazon.com moved from Oracle to lots of different DBs. Anything needing ACID went to Aurora. Some of their services used the MySQL version. Some used the PostgreSQL version.

(He was using Microsoft Outlook on a Mac.)

He setup autoscaling to automatically add new read replicas. When you autoscale, you have to decide what to autoscale on. He set it up so that if the CPU usage on his replica goes over 20%, he adds another replica. He can also do it based on the number of connections.

There is an “enhanced monitoring” feature. Enhanced monitoring happens at the hypervisor layer. It’s an extra charge, but gives you better granularity.

It can show you threads running, active transactions, innodb_rows_read.avg.

It shows you which query is causing a CPU spike.

We would use binary snapshot ingestion through S3 and catch up via binlog replication.

Alternatively, if you have more complex needs, you can use schema conversion using SCT and data migration via DMS.

This is not NoSQL. It’s ACID compliant.

If you max out your write capacity, you need to beef up your instances. (I’m guessing that it would make sense to move some things out of Aurora into DynamoDB.)

Again, it’s fully managed.

Important: He was saying all reads should go to the read replicas. This is really interesting. You don’t have to worry about replication delay since they read from the same shared storage. There are only microseconds of delay when reading from the read replica instead of the master.

They can apply DB patches with zero downtime most of the time. The only time they can’t do it is if there is never a time where there are no long running transactions.

They have fast DB cloning. Creation of a clone is nearly instantaneous. It’s copy on write. This is great for troubleshooting. You can clone a production DB to run tests. You can have up to 15 clones per copy. However, you can’t clone across AWS regions.

They only support up to 64 TB.

Backtrack is a feature that brings the DB back to a point in time without requiring a restore from backups. It’s not destructive. You can backtrack multiple times to find the right point in time. It’s a rolling 72 hour window. You must enable it when you create the cluster. It has to be done at the database level, not a table level.

It costs $0.012/million change records per hour.

Important: They have support for logical or physical replication cross region for a global DB. Replication is under 1 second and failover is under 1 minute. It’s done at the storage layer, not the compute layer.

Aurora Serverless is for infrequently used applications. You can turn off your DB at night or on the weekends. When it’s shut down, you only pay for storage costs, not compute costs.

1 ACU = 2 gigs of memory.

They can go from 1 ACU to 256 ACUs.

Lots of people move from SQL Server to Aurora. (This time, I think he said the PostgreSQL version, but earlier, I think they said the MySQL version.)

You can use Lambda with Aurora serverless. You would use their API gateway.

There’s an online query editor.

He showed an architecture diagram where each box is an AWS service.

Aurora Serverless isn’t for everyone. It’s useful if you need to turn down your DB for periods of time.

They’re trying to get you out of thinking you need to manage it.

Workshop: Accelerate Database Development and Testing with Amazon Aurora

Skipped.

Matching the Database to the Workload

I missed the first 15 minutes. When I came in, he was talking about RDS. He never talked about Aurora, so I wonder if that was covered in the first 15 minutes.

A lot of this matched the first talk.

DynamoDB is a fast and flexible NoSQL DB service for any scale. You don’t have to worry about servers. Much of amazon.com is built on DynamoDB. It supports event-driven programming (I wonder if this means you can use it to build realtime applications). You can have triggers that execute a lambda function. It’s “fast and consistent”. It’s both a document and a key-value store.

“Local secondary indexes” (LSIs) have to be created when creating the table and cannot be changed.

They have DynamoDB auto scaling that can adopt your throughput to your actual traffic.

Partitions are three-way replicated using 3 DCs within a region.

(DocumentDB is compatible with MongoDB, but it’s home grown.)

Amazon Elasticsearch Service: It’s open source, easy to use, scalable, secure, highly available, and integrated. A document represents entities. The data is in the fields.

DynamoDB and Elasticsearch can work well together. You can have an inverted index for all fields.

Amazon Neptune is a fully-managed graph database. It’s for extremely relational data across a number of tables. It’s great for modelling a social network.

Amazon Redshift is for data warehousing. It provides fast, powerful, and simple data warehousing at 1/10th the cost (compared to what?). It’s massively parallel and provides petabyte scale. It is a columnar data store. It’s an offshoot of PostgreSQL in the backend. It’s for analytical queries. You wouldn’t use it for the online database for a website. It wasn’t built for a bunch of small inserts. You can use different compression algorithms per column. It’s for traditional data warehousing, SaaS analytics, and big data analytics.

Amazon Athena is for interactive analysis. All the data is in S3. It’s built on Hive and Presto. You can even query CSV, wrapping it with a virtual SQL table. It’s compatible with SQL. It’s serverless. Zero infrastructure. Zero administration. It’s for analytical usage or reporting needs.

Quantum Ledger Database (QLDB): It’s a ledger database. It’s pretty new. Once you write to a ledger, you can never make changes to it. Immutable and transparent. Cryptographically verifiable. Highly scalable. Easy to use.

This is different from Amazon Managed Blockchain. Amazon QLDB is owned by a single, trusted authority. With Amazon Managed Blockchain, there is no single owner of the ledger. Instead, you have joint ownership by multiple parties.

He made up the “PIE theorem”: pattern flexibility, infinite scale, efficiency: pick two. He had a couple good info graphics.

He used Kahoot to quiz us in a competitive manner. I came in second. I tried to recruit the guy who came in first ;)

Amazon Crypto no longer exists.

Workshop: Search DynamoDB Data with Amazon Elasticsearch Service

Skipped.

Graph & Amazon Neptune

Skipped.

Workshop: Building Your First Graph Application with Amazon Neptune

Skipped.

Published on Java Code Geeks with permission by Shannon Behrens, partner at our JCG program. See the original article here: Database Week at the AWS Loft Opinions expressed by Java Code Geeks contributors are their own. |