OpenCV Java Object Detection

In this article, OpenCV Java Object Detection demonstrates how to identify specific objects in a real image using OpenCV DNN(Deep Neural Network) module, powered by the OpenCV inference engine.

Moreover, object detection technology has become a widely used method for identifying specific objects in images from pictures or videos across various fields.

Additionally, OpenCV provides the OpenCV DNN module as an inference engine. Therefore, the goal of OpenCV Java Object Detection is to detect real-world objects from different image sources using both the Mask R-CNN model, trained with TensorFlow, and the YOLO model, implemented in Darknet.

1. OpenCV Java Object Detection Overview

The following figure represents my intended approach to implementing OpenCV Java Object Detection from a programmer’s perspective.

The OpenCV Java Object Detection process works as follows:

- Takes user input for the CNN models and image information.

- Processes object detection based on the provided input.

- Displays the identified objects on the screen.

2. DNN Models For Object Detection

The Mask R-CNN and YOLO models are essential components for implementing OpenCV Java Object Detection. Therefore, see the References section for more details.

Specifically, for the YOLO model, YOLOv4 remains a powerful choice in terms of reusability with YOLOv3, offering an improved model that is still very effective, even though the latest release is YOLOv7.

On the other hand, Mask R-CNN, it is an extension of Faster R-CNN, designed as a deep learning model for both object detection and object segmentation. Mask R-CNN performs object segmentation by predicting a pixel-level mask for each object, allowing you to obtain not only the bounding box but also the exact shape of the object.

2.1 YOLO Model

YOLO (You Only Look Once) is a single Convolutional Neural Network (CNN) structure:

- Optimizes detection speed by dividing the image into grids.

- Predicts the class (type) and bounding box for each grid cell simultaneously.

- Allows real-time, high-speed detection.

- Achieves approximately 43-50% accuracy (Coco dataset) in object detection.

2.2 Mask R-CNN Model

Based on Faster R-CNN, Mask R-CNN offers higher accuracy than YOLO, but at a lower speed.

- Predicts the bounding box, class (object type), and mask (at the pixel level) for each object.

- Provides more detailed predictions by adopting ROI Align.

- Achieves approximately 60-70% accuracy (Coco dataset) in object detection.

3. Download Pre-trained Model

The OpenCV DNN module is an inference engine in OpenCV that supports various frameworks, including:

- TensorFlow (.pb, .pbtxt)

- Caffe (.prototxt, .caffemodel)

- ONNX (.onnx)

- Darknet (YOLO, .cfg, .weights)

- Torch (.t7)

- OpenVINO Model (.xml, .bin)

To use both YOLO and Mask R-CNN models, the OpenCV DNN module requires downloading pre-trained models for both Darknet and TensorFlow.

3.1 Download Pre-trained Model for YOLO

In OpenCV Java Object Detection, the following two files for YOLOv4 must be downloaded:

The yolov4.weights file contains the learned parameters of the Darknet model, while the yolov4.config file defines the model’s architecture and training method. Therefore, these two files are required as input parameters for OpenCV’s inference engine.

3.2 Download Pre-trained Model for Mask R-CNN

You can use a model in the Frozen Graph (.pb) format, which is compatible with TensorFlow 1.x and can be loaded into the OpenCV DNN module. Additionally, the latest version of the Mask R-CNN model uses the Inception V2 architecture, released on January 28, 2018. You can download the model from the following site:

In case the link I provided for downloading the Mask R-CNN frozen model is blocked, you can avoid this issue by copying the link, pasting it in a new tab, and allowing the file to download.

4.Setting Up OpenCV Java Object Detection

Before diving into OpenCV Java Object Detection, we need to set up the necessary tools and identify the main class to execute. Therefore, it’s important to familiarize yourself with the tools required for development and launch the main program.

4.1 Tools for Downloading

The following tools must be downloaded or installed for the development of OpenCV Java Object Detection:

- JDK 17 or higher: The base SDK for OpenCV Java Object Detection.

- IDE: Apache NetBeans or Eclipse can be used. NetBeans includes the Matisse design tool, which makes building GUI applications easier.

- OpenCV: The Computer Vision library for object detection from various image sources.

You can refer to the previous post for instructions on how to set up Apache NetBeans and OpenCV.

4.2 How to Launch the Program

There are several ways to run the OpenCV Java Object Detection program. For example, you can use development tools like JDK with the command line, Eclipse IDE, or any other Java IDE. However, I will explain how to run it using Apache NetBeans IDE, where I wrote and tested the source code. First, you can download and extract the zip file from the download section to any location on your computer.

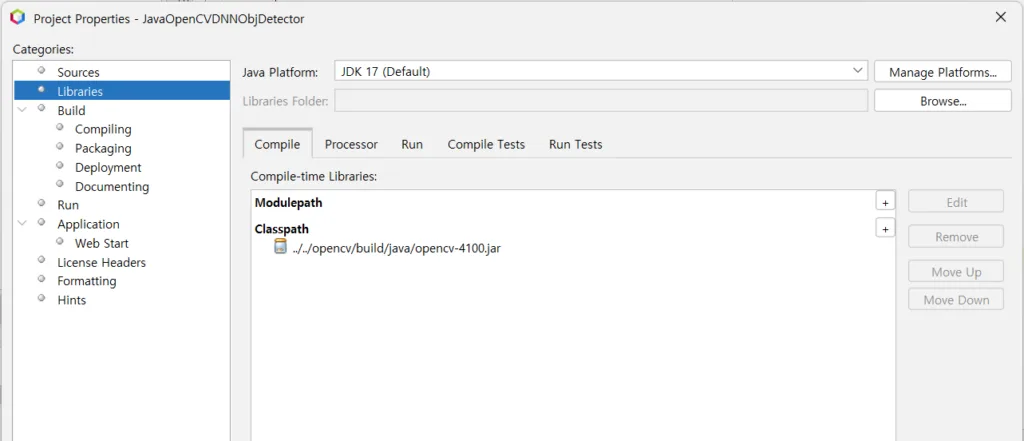

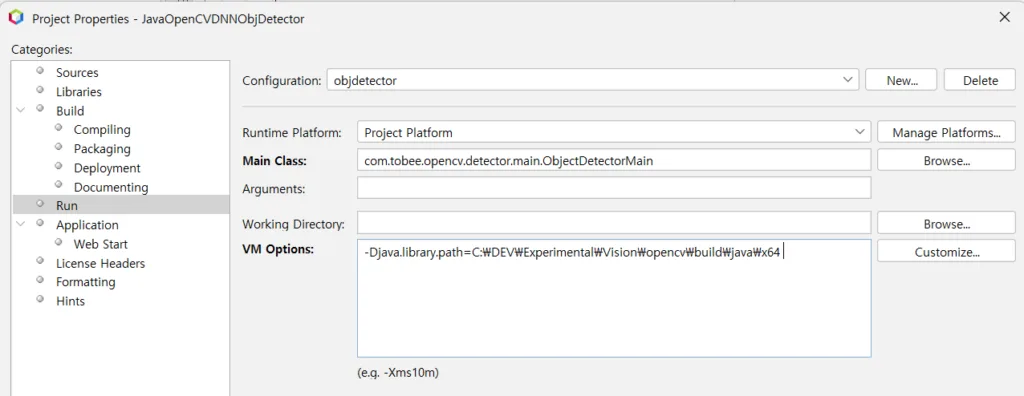

a. Open Properties

Right-click on the project named ‘JavaOpenCVObjDetector’ and select Properties.

a. Add OpenCV Java library

You need to add the OpenCV Java library under the Libraries category.

b. Add Native OpenCV library

In the Run category, the ObjectDetectorMain class is our main class to launch. In addition, you need to set the VM options as follows:

-Djava.library.path=where_to_opecv\opencv\build\java\x64

5. Implement OpenCV Java Object Detection

OpenCV’s inference engine, the DNN module, supports various deep learning models. Therefore, the implementation of our OpenCV Java Object Detection is ready using this module, as we have downloaded the model files for both YOLO and Mask R-CNN, as described in Section 3, ‘Download Pre-trained Models.’

5.1 Process of OpenCV Java Object Detection How-to

We will review the steps and methods involved in the process before implementing OpenCV Java Object Detection.

a. User Input:

Set up the model, configuration file, and image file from user input.

b. Load CNN Model:

Use different methods depending on the model type:

readNetFromTensorflow→ Load Mask R-CNN modelreadNetFromDarknet→ Load YOLO model

c. Prepare the Blob for CNN Input:

blobFromImage: Convert the image into a blob, suitable for CNN input.VideoCapture: Extract a frame from an image source (e.g., video).

d. Perform Inference with the Blob:

forwardsetInput

e. Retrieve the Detection Results:

- Mask R-CNN: Extract Bounding Box and Mask (Segmentation).

- YOLO: Extract Bounding Box Coordinates, Class ID, and Confidence Score.

f. Draw the Detected Objects on the Original Image:

rectangleputText- For Mask R-CNN, render the mask on the image.

g. Save or Display the Output Result Base on User Option:

imshowVideoWriter

5.2 Configuring Output Layers for Models

First, we must define or specify which layer will serve as the output layer before processing object detection. The output layer refers to the layer that we need to identify.

In contrast, the YOLO model can automatically detect its output layers, whereas Mask R-CNN cannot. Therefore, you need to manually add an output layer named detection_out_final if you choose the Mask R-CNN model.

5.2.1 YOLO Model Output Layers

The YOLO model can detect predicted values from its connected layers. As a result, we can obtain detection outputs even if we do not explicitly define an output layer, since the predicted values are derived from these connected layers.

- YOLO predicts objects across multiple output layers, requiring us to select the predictions with the highest confidence scores. To produce the final detection results, we apply the Non-Maximum Suppression (NMS) technique, which eliminates redundant and overlapping bounding boxes.

- The output results can be extracted directly without explicitly defining output layers, even though necessary information can be found across disconnected layers.

5.2.2 Output Layers of the Mask R-CNN Model

The Mask R-CNN model performs object detection along with object segmentation.

This model processes two layers simultaneously:

- detection_out_final: Bounding boxes and confidence scores of detected objects.

- detection_masks: Masks for each detected object.

The detection_out_final output layer contains bounding boxes and class information, which identify the locations and types of objects. However, this layer is not considered the output layer for mask predictions. On the other hand, the detection_masks layer is responsible for predicting the masks. This means that mask prediction is crucial for the Mask R-CNN model.

5.2.3 Structure of the detection_out_final Layer

This layer is composed of a 4D array, and the description of each axis is as follows:

a. Meaning of each dimension:

- First axis (Batch size): Represents the batch size. Mask R-CNN typically processes one image at a time, so the batch size is usually 1.

- Second axis (Number of Channels): Represents the number of channels, which is not used in this context.

- Third axis (Number of Objects): The maximum number of detected objects. Mask R-CNN supports a maximum of 100 objects for detection.

- Fourth axis (Attributes of Objects): Contains information about each detected object, including 7 values:

- detection_out_final[0, 0, i, 0]: Confidence score

- detection_out_final[0, 0, i, 1]: Class ID

- detection_out_final[0, 0, i, 2]: Confidence score (same as the one in index 0)

- detection_out_final[0, 0, i, 3]: Bounding box (x1)

- detection_out_final[0, 0, i, 4]: Bounding box (y1)

- detection_out_final[0, 0, i, 5]: Bounding box (x2)

5.2.3 Structure of the detection_masks Layer

The structure of the detection_masks layer is a 4D array, similar to the detection_out_final layer. This layer provides object masks, which are an important output. To better understand its function, let’s take a closer look at the structure of the detection_masks layer and its dimensions.

a. Background

This layer contains binary masks of the detected objects in the Mask R-CNN model. The binary mask array represents the object within the bounding box at the pixel level. A value of 1 indicates the presence of the object, while 0 represents the absence of the object.

b. Structure of the detection_masks Layer

The structure of the detection_masks layer is a 4D array with a general shape of (1, 1, 100, H, W):

- First axis (Batch size):

- Typically, one image is processed at a time, so this is generally set to 1.

- Second axis (Number of channels):

- This represents the number of channels, which is usually set to 1.

- Third axis (Number of detected objects):

- This axis represents the maximum number of detected objects. The Mask R-CNN model supports a maximum of 100 objects for detection.

- Fourth and fifth axes (Size of Mask: H x W):

- These axes represent the size of the mask for each object. The mask indicates the exact bounding box of the object, and the size (H, W) is smaller than the original image size.

5.3 Input Parameters of blobFromImage

Typically, the readNetFromDarknet method is used for the YOLO model, while the readNetFromTensorflow method is used for the Mask R-CNN model, both of which require a CNN configuration.

Additionally, a blob is a type of parameter for the CNN and is one of the key parameters of the blobFromImage function.

Method signature:

1 | blob = cv2.dnn.blobFromImage(image, scalefactor=1/255.0, size=input_size, swapRB=True, crop=False) |

Let’s find out the general input size of image fit used by YOLO or Mask R-CNN model .

5.3.1 Arguments of blobFromImage for YOLO Model

- scalefactor=1/255.0: Normalizes pixel values to a range between 0 and 1.

- size=(416, 416): Adjusts the image size for the YOLO model.

- swapRB=true: Converts the image to RGB, as OpenCV uses BGR order by default.

- crop=false: Resizes the image while keeping the original aspect ratio (no cropping).

5.3.2 Arguments of blobFromImage for Mask R-CNN Model

- scalefactor=1.0: No normalization of pixel values (keeps original scale).

- size=(1024, 1024): Adjusts the image size. Common sizes are 800×800 or 1024×1024.

- swapRB=true: Converts the image to RGB, as OpenCV uses BGR order by default.

- crop=false: Resizes the image while maintaining the original aspect ratio.

5.4 YOLO Model Size Parameters Explained

The size parameter of the YOLO model defines the input dimensions of the Deep Neural Network (DNN), typically represented as (width, height). As a result, you must resize your images to match the model’s expected input size, which was defined during the training of the Convolutional Neural Network (CNN).

For example:

- YOLOv4 → (416, 416)

- SSD MobileNet → (300, 300)

- ResNet → (224, 224)

Using the correct input size is crucial for achieving accurate results. Furthermore, in YOLO models, the input size is typically fixed and determined by the model’s architecture.

| Model | Input Size (size) |

|---|---|

| YOLOv3 | 416×416 |

| YOLOv4 | 416×416 or 608×608 |

| YOLOv5 | 640×640 |

| YOLOv6 | 640×640 |

| YOLOv7 | 640×640 |

| YOLOv8 | 640×640 |

- The default size for YOLOv3 and YOLOv4 is 416×416, while a size of 608×608 is also available for YOLOv4.

- Starting from YOLOv5, the default size is 640×640.

5.5 Mask R-CNN Model Size Parameters Explained

- Mask R-CNN with the COCO Dataset:

- Mask R-CNN supports various input sizes, but sizes of (800, 800) or (1024, 1024) are commonly used. The exact input size is specified by the Mask R-CNN model type.

- For certain models, 1024×1024 is used as the input size.

- Research has shown that using a 1024×1024 image can lead to higher accuracy.

6. Class Design for OpenCV Java Object Detection

Now that we have an understanding of both the YOLO and Mask R-CNN models for the OpenCV DNN module, we can move on to the classes involved in our OpenCV Java Object Detection. Therefore, I believe six classes are necessary for the implementation of our OpenCV Java Object Detection.

6.1 Class Diagram and Associations

Here is my concept of the classes for OpenCV Java Object Detection.

The responsibilities of each class are described as follows:

- YoloDetector: The implementation class for object detection using the YOLO model. It is inherited from

ObjectDetector. - DNNOption: The user input class that accepts files and options for detection.

- ObjectDetectorMain: The main class that contains the entry point the user must run.

- IObjectDetector: The interface class that defines the

detectObjectmethod, which subclasses must implement. - ObjectDetector: The parent class of both YOLO and Mask R-CNN implementations, which has an abstract

detectObjectmethod. - MaskRCNNDetector: The implementation class for object detection using the Mask R-CNN model. It is inherited from

ObjectDetector.

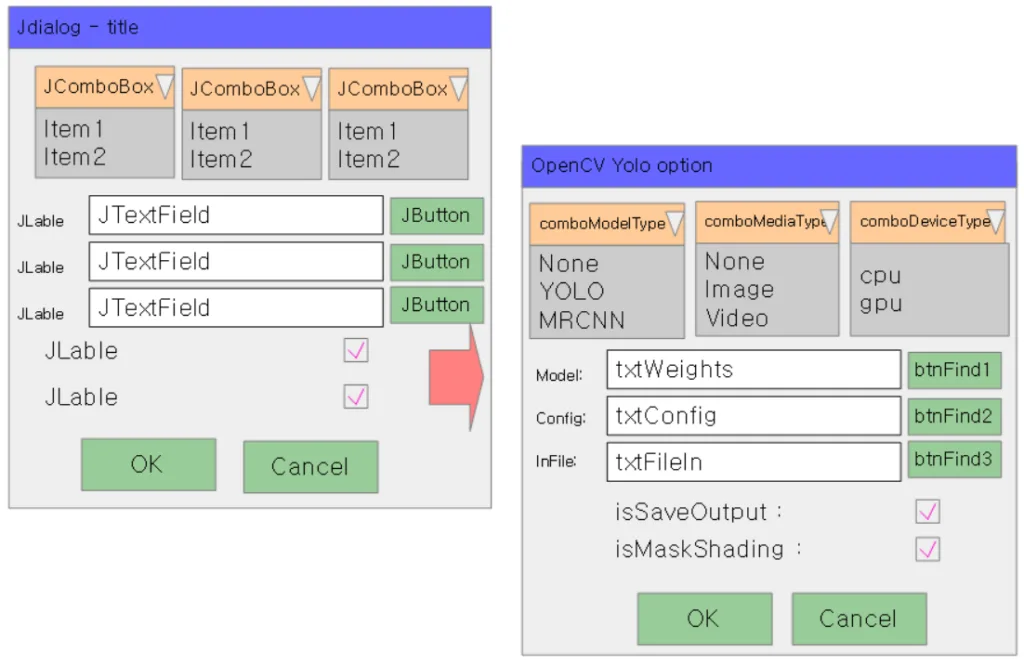

7. User Input UI Layout

Using the OpenCV DNN module requires several parameters, which vary depending on the selected model for the OpenCV Java Object Detection implementation. Initially, I considered using a command-line parser to gather input from the user, but this would make the program more complicated. Therefore, I decided to create a simple window for user input. Afterward, we will focus on the methods provided by OpenCV.

Next, we will discuss the components and their associated variables, along with a description table.

| Swing Components | Variable Name | Value | Note |

| JComboBox | comboModelType | ‘MRCNN’ or ‘YOLO’ | Select Mask R-CNN Model or YOLO Model. |

| JComboBox | comboMediaType | ‘Image’ or ‘Video’ | Select Image or Video. |

| JComboBox | comboDeviceType | ‘cpu’ or ‘gpu’ | Select CPU or GPU |

| JTextField | txtModel | Path to weights (YOLO) or .pb files (Mask R-CNN) | Set the file extension according to the ComboModelType selection. |

| JTextField | txtConfig | config file path | configuration file for YOLO or Mask-RCNN model. |

| JTextField | txtFileIn | Image or Video file path | File path for Image or Video file by mediaType |

| JTextField | txtThreshold | Confidence Score | Skip objects if the confidence score is below the threshold. Keep objects if the score is above it. The default threshold is 0.5. |

| JCheckBox | isSaveOutput | true or false | Save images or videos with detected objects highlighted. |

| JCheckBox | isMaskShading | true or false | Mask objects only if the Mask R-CNN model is selected. |

7.1 Implementation of User Input Layout

You can save the parameters from the user input GUI to the DNNOption class for later use. In this case, the role of this class is to store the user input.

This is the GUI implementation:

setUpOption – The main method is based on the showConfirmDialog method of JOptionPane and uses JPanel for user input.

1 | int result = JOptionPane.showConfirmDialog(null, panel, WinName,JOptionPane.OK_CANCEL_OPTION, JoptionPane.PLAIN_MESSAGE); |

The JPanel uses GridBagLayout for user input. All information entered by the user can be saved when the “OK” button is clicked, as shown below:

01 02 03 04 05 06 07 08 09 10 11 12 13 | if (result == JOptionPane.OK_OPTION) { mediaType = comboMediaType.getSelectedItem().toString().trim(); deviceType = comboDeviceType.getSelectedItem().toString().trim(); modelPath = txtModel.getText(); modelConfiguration = txtConfig.getText(); mediaFile = txtFileIn.getText(); isSaveOutput = chkSaveVdo.isSelected(); threshold = Double.parseDouble(txtThreshold.getText()); return this;} else { //System.out.println("Cancelled"); return null;} |

Next, here is the full source code of the setUpOption method.

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 016 017 018 019 020 021 022 023 024 025 026 027 028 029 030 031 032 033 034 035 036 037 038 039 040 041 042 043 044 045 046 047 048 049 050 051 052 053 054 055 056 057 058 059 060 061 062 063 064 065 066 067 068 069 070 071 072 073 074 075 076 077 078 079 080 081 082 083 084 085 086 087 088 089 090 091 092 093 094 095 096 097 098 099 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 | public DNNOption setUpOptions() { // Create main panel JPanel panel = new JPanel(); panel.setPreferredSize(new Dimension(400,350)); panel.setLayout(new GridBagLayout()); GridBagConstraints gbc = new GridBagConstraints(); gbc.insets = new Insets(5, 5, 5, 5); gbc.fill = GridBagConstraints.HORIZONTAL; gbc.gridx = 0; gbc.gridy = 0; // Create a JComboBox group JPanel comboBoxGroup = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JComboBox comboModelName = new JComboBox(new String[]{ObjectDetector.TYPE_NONE, ObjectDetector.MODEL_YOLO, ObjectDetector.MODEL_MASK_RCNN}); JComboBox comboMediaType = new JComboBox(new String[]{ObjectDetector.TYPE_NONE, ObjectDetector.TYPE_IMAGE, ObjectDetector.TYPE_VIDEO}); JComboBox comboDeviceType = new JComboBox(new String[]{ObjectDetector.TYPE_CPU, ObjectDetector.TYPE_GPU}); comboBoxGroup.add(comboModelName); comboBoxGroup.add(comboMediaType); comboBoxGroup.add(comboDeviceType); comboModelName.setPreferredSize(new Dimension(100,30)); comboMediaType.setPreferredSize(new Dimension(100,30)); comboDeviceType.setPreferredSize(new Dimension(100, 30)); panel.add(comboBoxGroup, gbc); comboBoxGroup.setPreferredSize(new Dimension(320,40)); // Create JTextField + JButton Group gbc.gridy++; JPanel group1 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup1 = new JPanel(new BorderLayout()); JLabel lblModel = new JLabel("Model: "); JTextField txtModel = new JTextField(10); JButton btnFind1 = new JButton("Find"); txtModel.setPreferredSize(new Dimension(200,30)); btnFind1.setPreferredSize(new Dimension(60,30)); ingroup1.add(lblModel,BorderLayout.WEST); ingroup1.add(txtModel,BorderLayout.CENTER); ingroup1.add(btnFind1,BorderLayout.EAST); ingroup1.setPreferredSize(new Dimension(310,30)); group1.setPreferredSize(new Dimension(350,40)); group1.add(ingroup1); panel.add(group1, gbc); // Create JTextField + JButton Group gbc.gridy++; JPanel group2 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup2 = new JPanel(new BorderLayout()); group2.setPreferredSize(new Dimension(100,100)); JLabel lblConfig = new JLabel("Config: "); JTextField txtConfig = new JTextField(10); JButton btnFind2 = new JButton("Find"); txtConfig.setPreferredSize(new Dimension(200,30)); btnFind2.setPreferredSize(new Dimension(60,30)); ingroup2.add(lblConfig,BorderLayout.WEST); ingroup2.add(txtConfig,BorderLayout.CENTER); ingroup2.add(btnFind2,BorderLayout.EAST); ingroup2.setPreferredSize(new Dimension(310,30)); group2.setPreferredSize(new Dimension(350,40)); group2.add(ingroup2); panel.add(group2, gbc); // Create JTextField + JButton Group gbc.gridy++; JPanel group3 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup3 = new JPanel(new BorderLayout()); group3.setPreferredSize(new Dimension(100,100)); JLabel lblFileIn = new JLabel("InFile: "); JTextField txtFileIn = new JTextField(10); JButton btnFind3 = new JButton("Find"); txtFileIn.setPreferredSize(new Dimension(200,30)); btnFind3.setPreferredSize(new Dimension(60,30)); ingroup3.add(lblFileIn,BorderLayout.WEST); ingroup3.add(txtFileIn,BorderLayout.CENTER); ingroup3.add(btnFind3,BorderLayout.EAST); ingroup3.setPreferredSize(new Dimension(310,30)); group3.setPreferredSize(new Dimension(350,40)); group3.add(ingroup3); panel.add(group3, gbc); // Create JLabel + JCheckBox Group gbc.gridy++; JPanel group4 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup4 = new JPanel(new BorderLayout()); group4.setPreferredSize(new Dimension(100,100)); JLabel lblThreshold = new JLabel("Threshold: "); JTextField txtThreshold = new JTextField(); txtThreshold.setHorizontalAlignment(JTextField.RIGHT); txtThreshold.setText("0.5"); ingroup4.add(lblThreshold,BorderLayout.WEST); ingroup4.add(txtThreshold,BorderLayout.CENTER); ingroup4.setPreferredSize(new Dimension(310,30)); group4.setPreferredSize(new Dimension(350,40)); group4.add(ingroup4); panel.add(group4, gbc); // Create JLabel + JCheckBox Group gbc.gridy++; JPanel group5 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup5 = new JPanel(new BorderLayout()); JLabel lblChkSaveVdo = new JLabel("Save Output: "); JCheckBox chkSaveVdo = new JCheckBox(); ingroup5.add(lblChkSaveVdo,BorderLayout.CENTER); ingroup5.add(chkSaveVdo,BorderLayout.EAST); ingroup5.setPreferredSize(new Dimension(310,30)); group5.setPreferredSize(new Dimension(350,40)); group5.add(ingroup5); panel.add(group5, gbc); gbc.gridy++; JPanel group6 = new JPanel(new FlowLayout(FlowLayout.LEFT, 5, 0)); JPanel ingroup6 = new JPanel(new BorderLayout()); //ingroup6.setPreferredSize(new Dimension(100,100)); JLabel lblChkMaskShade = new JLabel("Shading Mask: "); JCheckBox chkhkMaskShade = new JCheckBox(); chkhkMaskShade.setEnabled(false); ingroup6.add(lblChkMaskShade,BorderLayout.CENTER); ingroup6.add(chkhkMaskShade,BorderLayout.EAST); ingroup6.setPreferredSize(new Dimension(310,30)); group6.setPreferredSize(new Dimension(350,40)); group6.add(ingroup6); panel.add(group6, gbc); comboModelName.addActionListener((ActionEvent e) -> { modelName = (String) comboModelName.getSelectedItem(); if(modelName.equals(ObjectDetector.MODEL_MASK_RCNN)) { chkhkMaskShade.setEnabled(true); } else { chkhkMaskShade.setEnabled(false); } }); comboMediaType.addActionListener((ActionEvent e) -> { mediaType = (String) comboMediaType.getSelectedItem(); }); comboDeviceType.addActionListener((ActionEvent e) -> { deviceType = (String) comboDeviceType.getSelectedItem(); }); btnFind1.addActionListener((ActionEvent e) -> { JFileChooser fileChooser = new JFileChooser(); fileChooser.setCurrentDirectory(new File("C:\\")); String model = comboModelName.getSelectedItem().toString().trim(); if(MODEL_YOLO.equals(model)) { FileNameExtensionFilter filter = new FileNameExtensionFilter( "YOLO File (.weights)", "weights"); fileChooser.setFileFilter(filter); } else if(MODEL_MASK_RCNN.equals(model)) { FileNameExtensionFilter filter = new FileNameExtensionFilter( "MRCNN File (.pb)", "pb"); fileChooser.setFileFilter(filter); } int returnValue = fileChooser.showOpenDialog(null); if (returnValue == JFileChooser.APPROVE_OPTION) { File selectedFile = fileChooser.getSelectedFile(); txtModel.setText(selectedFile.getAbsolutePath()); } }); btnFind2.addActionListener((ActionEvent e) -> { JFileChooser fileChooser = new JFileChooser(); fileChooser.setCurrentDirectory(new File("C:\\")); String model = comboModelName.getSelectedItem().toString().trim(); if(MODEL_YOLO.equals(model)) { FileNameExtensionFilter filter = new FileNameExtensionFilter( "YOLO Config (.cfg)", "cfg"); fileChooser.setFileFilter(filter); } else if(MODEL_MASK_RCNN.equals(model)) { FileNameExtensionFilter filter = new FileNameExtensionFilter( "MRCNN Config (.pbtxt)", ".pbtxt"); fileChooser.setFileFilter(filter); } int returnValue = fileChooser.showOpenDialog(null); if (returnValue == JFileChooser.APPROVE_OPTION) { File selectedFile = fileChooser.getSelectedFile(); txtConfig.setText(selectedFile.getAbsolutePath()); } }); btnFind3.addActionListener((ActionEvent e) -> { JFileChooser fileChooser = new JFileChooser(); fileChooser.setCurrentDirectory(new File("C:\\")); // Default directory String mType = comboMediaType.getSelectedItem().toString().trim(); if(TYPE_IMAGE.equals(mType)) { FileNameExtensionFilter filter = new FileNameExtensionFilter( "Image Files (JPG, PNG)", "jpg", "jpeg", "png"); fileChooser.setFileFilter(filter); } else if(TYPE_VIDEO.equals(mType)) { //chkSaveVdo.setEnabled(true); FileNameExtensionFilter filter = new FileNameExtensionFilter( "Video Files (MP4)", "mp4"); fileChooser.setFileFilter(filter); } int returnValue = fileChooser.showOpenDialog(null); if (returnValue == JFileChooser.APPROVE_OPTION) { File selectedFile = fileChooser.getSelectedFile(); txtFileIn.setText(selectedFile.getAbsolutePath()); } }); // OK / CANCEL button dialog int result = JOptionPane.showConfirmDialog(null, panel, WinName, JOptionPane.OK_CANCEL_OPTION, JOptionPane.PLAIN_MESSAGE); if (result == JOptionPane.OK_OPTION) { mediaType = comboMediaType.getSelectedItem().toString().trim(); deviceType = comboDeviceType.getSelectedItem().toString().trim(); modelPath = txtModel.getText(); modelConfiguration = txtConfig.getText(); mediaFile = txtFileIn.getText(); threshold = Double.parseDouble(txtThreshold.getText()); isSaveOutput = chkSaveVdo.isSelected(); isMaskRendering = chkhkMaskShade.isSelected(); return this; } else { return null; }} |

7.2 OpenCV Java Object Detection Main Method

The ObjectDetectorMain class contains the main method for OpenCV Java Object Detection. Specifically, this class uses the DNNOption class to store the user input parameters.

Here is the source code:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | public static void main(String[] args){ DNNOption dnnOption = new DNNOption(); IObjectDetector detector = null; if(dnnOption.setUpOptions() != null && dnnOption.isValid()) { if(dnnOption.modelName.equals(ObjectDetector.MODEL_MASK_RCNN)) { detector = new MaskRCNNDetector(dnnOption); } else if(dnnOption.modelName.equals(ObjectDetector.MODEL_YOLO)) { detector = new YoloDetector(dnnOption); } if(detector != null) { detector.detectObject(); } } else { JOptionPane.showMessageDialog(null, "Please retry after checking your options.", "Insufficient option", JOptionPane.WARNING_MESSAGE); }} |

It calls the setUpOptions method for user input and performs a validation check on the options.

1 | if(dnnOption.setUpOptions() != null && dnnOption.isValid()) |

The MaskRCNNDetector class is instantiated if the model selected by the user is Mask R-CNN, while the YoloDetector class is instantiated if the user selects the YOLO model. In either case, each class must implement the detectObject method inherited from the IObjectDetector interface.

Based on the modelName selected by the user, the appropriate class is instantiated.

1 2 3 4 5 6 7 8 | if(dnnOption.modelName.equals(ObjectDetector.MODEL_MASK_RCNN)){ detector = new MaskRCNNDetector(dnnOption);}else if(dnnOption.modelName.equals(ObjectDetector.MODEL_YOLO)){ detector = new YoloDetector(dnnOption);} |

Once the class instantiation is complete, the detectObject method of the implementation class is called.

1 2 3 4 | if(detector != null){ detector.detectObject();} |

8. ObjectDetector The Parent Class

The ObjectDetector class is an abstract class that serves as the parent class for both the YOLO and Mask R-CNN models. It implements the IObjectDetector interface, which contains the detectObject method as an abstract method

Below is the full source code of the ObjectDetector class:

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 016 017 018 019 020 021 022 023 024 025 026 027 028 029 030 031 032 033 034 035 036 037 038 039 040 041 042 043 044 045 046 047 048 049 050 051 052 053 054 055 056 057 058 059 060 061 062 063 064 065 066 067 068 069 070 071 072 073 074 075 076 077 078 079 080 081 082 083 084 085 086 087 088 089 090 091 092 093 094 095 096 097 098 099 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 | /* * Click nbfs://nbhost/SystemFileSystem/Templates/Licenses/license-default.txt to change this license * Click nbfs://nbhost/SystemFileSystem/Templates/Classes/Class.java to edit this template */package com.tobee.opencv.detector;import com.tobee.opencv.detector.intrfce.IObjectDetector;import com.tobee.opencv.detector.input.DNNOption;import conf.CocoLabel;import java.util.ArrayList;import java.util.HashMap;import java.util.List;import java.util.Map;import org.opencv.core.Core;import org.opencv.core.Mat;import org.opencv.core.MatOfInt;import org.opencv.core.Rect;import org.opencv.core.Scalar;import org.opencv.core.Size;import org.opencv.dnn.Dnn;import org.opencv.dnn.Net;import org.opencv.imgproc.Imgproc;/** * * @author Tobee */public abstract class ObjectDetector implements IObjectDetector { protected String winName; protected String modelName; protected String mediaType; protected String deviceType; protected String mediaFile; protected String modelPath; protected String modelConfiguration; protected boolean isSaveOuput; public boolean isMaskRendering; protected double threshold; private final DNNOption dnnOption; static{ System.loadLibrary(Core.NATIVE_LIBRARY_NAME); } protected ObjectDetector(final DNNOption dnnOption) { this.dnnOption = dnnOption; setupOption(); setupLabelSet(); } private void setupLabelSet() { if(modelName.equals(MODEL_MASK_RCNN)) { CocoLabel.setupCocoLabelSet(MASK_RCNN_LABEL_FILE); } else if(modelName.equals(MODEL_YOLO)) { CocoLabel.setupCocoLabelSet(YOLO_LABEL_FILE); } } private void setupOption() { winName = dnnOption.WinName.replace("${Model}",dnnOption.modelName); modelName = dnnOption.modelName; mediaType = dnnOption.mediaType; deviceType = dnnOption.deviceType; mediaFile = dnnOption.mediaFile; modelPath = dnnOption.modelPath; modelConfiguration = dnnOption.modelConfiguration; isSaveOuput = dnnOption.isSaveOutput; isMaskRendering = dnnOption.isMaskRendering; threshold = dnnOption.threshold; } protected Net readDNNetByModelName() { Net dnnNet = null; if(modelName.equals(MODEL_YOLO)) { dnnNet = Dnn.readNetFromDarknet(modelConfiguration, modelPath); } else if(modelName.equals(MODEL_MASK_RCNN)) { dnnNet = Dnn.readNetFromTensorflow(modelPath, modelConfiguration); } if(dnnNet == null) return null; switch(deviceType) { case "cpu" -> { dnnNet.setPreferableTarget(org.opencv.dnn.Dnn.DNN_TARGET_CPU); } case "gpu" -> { dnnNet.setPreferableBackend(Dnn.DNN_BACKEND_CUDA); dnnNet.setPreferableTarget(Dnn.DNN_TARGET_CUDA); } } return dnnNet; } protected Map getMapOutputLayer(final Net net) { if(modelName.equals(MODEL_YOLO)) { return getYoloOutputLayer(net); } else if(modelName.equals(MODEL_MASK_RCNN)) { return getMaskRCnnOutputLayer(net); } return null; } protected Map getMaskRCnnOutputLayer(final Net net) { Map retMap = new HashMap(); // Auto detection of the ouput layer. List layerNames = net.getLayerNames(); MatOfInt outLayers = net.getUnconnectedOutLayers(); int[] outLayersArray = outLayers.toArray(); List outputLayerNames = new ArrayList(); for (int i : outLayersArray) { retMap.put(layerNames.get(i - 1), null); outputLayerNames.add(layerNames.get(i - 1)); // OpenCV uses 1-based index } // Generally, Both `detection_out_final` and `detection_masks` layers are used by Mask R-CNN boolean foundDetectionOutFinal = false; for (String layer : outputLayerNames) { if (layer.contains("detection_out_final")) { foundDetectionOutFinal = true; break; } } // Add `detection_out_final` layer. if (!foundDetectionOutFinal) { outputLayerNames.add("detection_out_final"); retMap.put("detection_out_final", null); } return retMap; } protected Map getYoloOutputLayer(final Net net) { Map retMap = new HashMap(); // Retrieve all layer names in the Net. List layerNames = net.getLayerNames(); // Get indexes for output layers MatOfInt outLayers = net.getUnconnectedOutLayers(); int[] outLayersArray = outLayers.toArray(); // Auto detection of the ouput layer and add the index to the map for (int i : outLayersArray) { retMap.put(layerNames.get(i - 1), null); } return retMap; } /** * * @param orgMat * @param targetSize * @return java.util.Map * */ protected Map resizeMatImage(Mat orgMat, Size targetSize) { Map retMap = new HashMap(); Rect padding = new Rect(); int targetWidth = (int)targetSize.width; int targetHeight = (int)targetSize.height; int originalWidth = orgMat.cols(); int originalHeight = orgMat.rows(); // Minimum scale against the original ratio. float scaleX = (float) targetWidth / originalWidth; float scaleY = (float) targetHeight / originalHeight; float minScale = Math.min(scaleX, scaleY); int newWidth = (int) (originalWidth * minScale); int newHeight = (int) (originalHeight * minScale); // Padding value calculation. int left = (targetWidth - newWidth) / 2; int top = (targetHeight - newHeight) / 2; int right = targetWidth - newWidth - left; int bottom = targetHeight - newHeight - top; // Resizing and padding adjustment. Mat resizedMat = new Mat(); Imgproc.resize(orgMat, resizedMat, new Size(newWidth, newHeight)); Mat paddedMat = new Mat(); Core.copyMakeBorder(resizedMat, paddedMat, top, bottom, left, right, Core.BORDER_CONSTANT, new Scalar(0, 0, 0)); // The padding information. padding.x = left; padding.y = top; padding.width = right; padding.height = bottom; retMap.put("mat", paddedMat); retMap.put("padding", padding); retMap.put("scale", minScale);// The scale information. return retMap; } public static Mat getMrcnnROI(Mat frame, DetectedBBox mybbox) { int frameHeight = frame.rows(); int frameWidth = frame.cols(); Rect bbox = new Rect(mybbox.left, mybbox.top, mybbox.right - mybbox.left + 1, mybbox.bottom - mybbox.top + 1); // Adjust coordinates to ensure the image stays within range. int top = Math.max(0, Math.min(bbox.y, frameHeight - 1)); int bottom = Math.max(0, Math.min(bbox.y + bbox.height, frameHeight)); int left = Math.max(0, Math.min(bbox.x, frameWidth - 1)); int right = Math.max(0, Math.min(bbox.x + bbox.width, frameWidth)); //Add another check to validate the ROI, ensuring the width and height are greater than 0. if (top >= bottom || left >= right) { System.err.println("Invaild ROI!"); return new Mat(); // retun empty Mat. } // return Safe Mat return frame.submat(top, bottom, left, right); } protected static class DetectedBBox { protected final double confidenceValue; protected final int classId; protected final int left; protected final int top; protected final int right; protected final int bottom; DetectedBBox( final double confidence, final int classId, final int left, final int top, final int right, final int bottom) { this.confidenceValue = confidence; this.classId = classId; this.left = left; this.right = right; this.top = top; this.bottom = bottom; } } } |

8.1 Setting Output Layers for Models

The getMapOutputLayer method implements the section in “5.2 Configuring Output Layers for Models” The method selects the appropriate output layer based on the model, as follows:

01 02 03 04 05 06 07 08 09 10 11 12 13 | protected Map getMapOutputLayer(final Net net){ if(modelName.equals(MODEL_YOLO)) { return getYoloOutputLayer(net); } else if(modelName.equals(MODEL_MASK_RCNN)) { return getMaskRCnnOutputLayer(net); } return null;} |

8.1.1 Setting Output Layers for YOLO

This method saves an unconnected output layer in the model’s CNN network.

The signature of the getYoloOutputLayer method is as follows:

1 | protected Map getYoloOutputLayer(final Net net) |

The method searches for an unconnected layer among all layers in the CNN network.

The implementation code is as follows:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 | protected Map getYoloOutputLayer(final Net net) { Map retMap = new HashMap(); // Retrieve all layer names in the Net. List layerNames = net.getLayerNames(); // Get indexes for output layers MatOfInt outLayers = net.getUnconnectedOutLayers(); int[] outLayersArray = outLayers.toArray(); // Auto detection of the ouput layer and add the index to the map for (int i : outLayersArray) { retMap.put(layerNames.get(i - 1), null); } return retMap;} |

8.1.2 Setting Output Layers for Mask R-CNN

The getMaskRCnnOutputLayer method implements the section “5.2.2 Output Layers of the Mask R-CNN Model.” Specifically, this method saves the unconnected output layers and adds the detection_out_final layer to the model’s CNN network. The key point of this method is adding the detection_out_final layer, as both the detection_out_final and detection_masks layers are used in the Mask R-CNN model.

The method signature is:

1 | protected Map getMaskRCnnOutputLayer(final Net net) |

The method uses a Net object as a parameter and returns a map containing layer names and Mat objects. In this process, the current status of the Mat object is set to null because the blobFromImage method is called, which applies object detection.

1 2 3 4 | if (!foundDetectionOutFinal) { outputLayerNames.add("detection_out_final"); retMap.put("detection_out_final", null);} |

This is source code of the method :

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | protected Map getMaskRCnnOutputLayer(final Net net) { Map retMap = new HashMap(); // Auto detection of the ouput layer. List layerNames = net.getLayerNames(); MatOfInt outLayers = net.getUnconnectedOutLayers(); int[] outLayersArray = outLayers.toArray(); List outputLayerNames = new ArrayList(); for (int i : outLayersArray) { retMap.put(layerNames.get(i - 1), null); outputLayerNames.add(layerNames.get(i - 1)); // OpenCV uses 1-based index } // Generally, Both `detection_out_final` and `detection_masks` layers are used by Mask R-CNN boolean foundDetectionOutFinal = false; for (String layer : outputLayerNames) { if (layer.contains("detection_out_final")) { foundDetectionOutFinal = true; break; } } // Add `detection_out_final` layer. if (!foundDetectionOutFinal) { outputLayerNames.add("detection_out_final"); retMap.put("detection_out_final", null); } return retMap;} |

8.2 CNN Net Initialization

The readDNNetByModelName method creates a DNN from the model and configuration files based on the user input.

The method signature is:

1 | protected Net readDNNetByModelName() |

Implementation code :

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | protected Net readDNNetByModelName(){ Net dnnNet = null; if(modelName.equals(MODEL_YOLO)) { dnnNet = Dnn.readNetFromDarknet(modelConfiguration, modelPath); } else if(modelName.equals(MODEL_MASK_RCNN)) { dnnNet = Dnn.readNetFromTensorflow(modelPath, modelConfiguration); } if(dnnNet == null) return null; switch(deviceType) { case "cpu" -> { dnnNet.setPreferableTarget(org.opencv.dnn.Dnn.DNN_TARGET_CPU); } case "gpu" -> { dnnNet.setPreferableBackend(Dnn.DNN_BACKEND_CUDA); dnnNet.setPreferableTarget(Dnn.DNN_TARGET_CUDA); } } return dnnNet;} |

The readNetFromDarknet method is used for YOLO, and the readNetFromTensorflow method is used for Mask R-CNN during DNN generation.

1 2 3 4 5 6 7 8 | if(modelName.equals(MODEL_YOLO)){ dnnNet = Dnn.readNetFromDarknet(modelConfiguration, modelPath);}else if(modelName.equals(MODEL_MASK_RCNN)){ dnnNet = Dnn.readNetFromTensorflow(modelPath, modelConfiguration);} |

The rest of the code handles the selection of CPU or GPU for processing.

01 02 03 04 05 06 07 08 09 10 | switch(deviceType){case "cpu" -> { dnnNet.setPreferableTarget(org.opencv.dnn.Dnn.DNN_TARGET_CPU); }case "gpu" -> { dnnNet.setPreferableBackend(Dnn.DNN_BACKEND_CUDA); dnnNet.setPreferableTarget(Dnn.DNN_TARGET_CUDA); }} |

9. Setting up Labels and Colors

The CocoLabel class stores the appropriate label data based on the selected model and assigns a randomly generated color to each label. Therefore, the class is instantiated when the setupLabelSet() method is called in the constructor of the ObjectDetector class, as shown in the following code:

1 2 3 4 5 6 | protected ObjectDetector(final DNNOption dnnOption){ this.dnnOption = dnnOption; setupOption(); setupLabelSet(); } |

9.1 Label Initialization

“The setupLabelSet method selects the YOLO_LABEL_FILE option for the YOLO model; otherwise, it selects the MASK_RCNN_LABEL_FILE option for the Mask R-CNN. Additionally, both YOLO_LABEL_FILE and MASK_RCNN_LABEL_FILE are parameters of the setupCocoLabelSet method in the CocoLabel class.

01 02 03 04 05 06 07 08 09 10 11 | private void setupLabelSet(){ if(modelName.equals(MODEL_MASK_RCNN)) { CocoLabel.setupCocoLabelSet(MASK_RCNN_LABEL_FILE); } else if(modelName.equals(MODEL_YOLO)) { CocoLabel.setupCocoLabelSet(YOLO_LABEL_FILE); }} |

9.2 Save Label and Color

The setupCocoLabelSet method saves a List object containing both COCO_LABELS and the labels read from the label data file.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | public static void setupCocoLabelSet(String labelFileName){ BufferedReader breader; COCO_LABLE_FILE = labelFileName; try { String line; breader = new BufferedReader(new InputStreamReader(CocoLabel.class.getResourceAsStream(COCO_LABLE_FILE))); String labels[]; while((line = breader.readLine()) != null) { if(line.isBlank() || line.isEmpty()) continue; if(line.startsWith("#")) continue; labels = line.split("\\:"); if(labels.length == 2) { COCO_LABLES.put(Integer.valueOf(labels[0]), labels[1].trim()); } } } catch(IOException ie) { ie.printStackTrace(); } // generate radnom color in order to draw bounding boxes Random random = new Random(); for (int i = 0; i < COCO_LABLES.size(); i++) { COLORS.add( new Scalar( new double[] { random.nextInt(255), random.nextInt(255), random.nextInt(255) })); }} |

9.3 Label File

Each detected object has a class ID, which is used to identify the object during the training process. Additionally, there are two different label files for each model: coco.names for YOLO and mscoco_labels.names for Mask R-CNN. These are the files used as labels for the respective models.

Make sure to use the appropriate file for each model to ensure proper functionality.

01 02 03 04 05 06 07 08 09 10 11 12 | while((line = breader.readLine()) != null){ if(line.isBlank() || line.isEmpty()) continue; if(line.startsWith("#")) continue; labels = line.split("\\:"); if(labels.length == 2) { COCO_LABLES.put(Integer.valueOf(labels[0]), labels[1].trim()); }} |

Below is a sample label file that saves the labels and their corresponding class IDs.

01 02 03 04 05 06 07 08 09 10 | 0:person1:bicycle2:car3:motorcycle4:airplane5:bus6:train7:truck8:boat... |

9.3 Colors and Labels for Bounding Box

The following code describes how the labels are matched with colors for the bounding box. Specifically, these colors are randomly generated when the label data is first read from the file.

1 2 3 4 5 6 7 8 | // generate radnom color in order to draw bounding boxesRandom random = new Random();for (int i = 0; i < COCO_LABLES.size(); i++) { COLORS.add( new Scalar( new double[] { random.nextInt(255), random.nextInt(255), random.nextInt(255) }));} |

You can use the method as follows:

1 | List colors = CocoLabel.getColorsByLabel(); |

10. Object detection in Mask R-CNN Model

The mediaType can be either ‘Image’ or ‘Video’. The ‘Image’ type calls the detectObjectOnImage method, while the ‘Video’ type calls the detectObjectOnVideo method.

10.1 User Input Values

The user must fill in the following information: Select ‘MRCNN’, choose either ‘Image’ or ‘Video’, and select either ‘GPU’ or ‘CPU’ in the respective combo boxes. Based on the selected file type (‘Image’ or ‘Video’), choose the corresponding file for the ‘InFile’.

Here is the description of each component:

- Model: The file path for

frozen_inference_graph.pb. You can select this file by clicking the Find button. - Config: The file path for

mask_rcnn_inception_v2_coco_2018_01_28.pbtxt. You can select this file by clicking the Find button. - InFile: The media file path, which can either be an image or a video file depending on what you select from the first combo box.

- Save Output: A flag that determines whether to save the result or not. The output will be saved in the ‘out’ folder within the image or video file path selected by the user.

- Shading Mask: A flag that determines whether to shade masks on the original image. If unchecked, the masks will not be shaded on the original image.

You can get some test images from the Reference section.

a. Rendering masks, labels, and scores will be displayed when the checkbox is checked.

b. Labels will be shaded on the image only when the checkbox is checked.

10.2 detectObject Method in Object Detection

This method implements the detectObject method from the IObjectDetector interface.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | @Overridepublic void detectObject(){ if(!new File(mediaFile).exists()) { JOptionPane.showMessageDialog(null, String.format("File9[%s] Not Found", mediaFile), "File Error", JOptionPane.WARNING_MESSAGE); return; } if(!new File(modelPath).exists() || !new File(modelConfiguration).exists()) { JOptionPane.showMessageDialog(null, String.format("Can not open Model[%s] \n config[%s]", modelPath,modelConfiguration), "Model Error", JOptionPane.WARNING_MESSAGE); return; } Net dnnNet = readDNNetByModelName(); Map outputLayers = getMapOutputLayer(dnnNet); if (mediaType.equals("Image")) { detectObjectOnImage(winName, dnnNet, outputLayers, mediaFile, threshold, isSaveOuput, isMaskShading); } else if (mediaType.equals("Video")) { System.out.println(outputLayers.size()); detectObjectOnVideo(winName, dnnNet, outputLayers, mediaFile, threshold, isSaveOuput, isMaskShading); }} |

In the case of ‘Image’, the method calls detectObjectOnImage, while in the case of ‘Video’, it calls detectObjectOnVideo, based on the mediaType variable selected by the user.

01 02 03 04 05 06 07 08 09 10 | if (mediaType.equals("Image")) { detectObjectOnImage(winName, dnnNet, outputLayers, mediaFile, threshold, isSaveOuput, isMaskShading); } else if (mediaType.equals("Video")) { System.out.println(outputLayers.size()); detectObjectOnVideo(winName, dnnNet, outputLayers, mediaFile, threshold, isSaveOuput, isMaskShading); }} |

10.3 detectObjectOnImage for Image Detection

This is more streamlined while still effectively communicating the core idea. Therefore, the method performs object detection on image inputs.

The method signature is as follows:

1 2 3 4 | public void detectObjectOnImage( String winName, Net dnnNet, Map outputLayers, String mediaFile, double threshold, boolean isSaveOuput, boolean isMaskShading); |

Description of parameters:

- winName: The title of the window.

- dnnNet: The CNN network loaded from the

readDNNetByModelNamemethod. - outputLayers: The list of output layers returned by the

getMaskRCnnOutputLayermethod. - mediaFile: The path to the media file (image or video).

- isSaveOutput: A flag indicating whether to save the results.

- isMaskShading: A flag indicating whether to draw masks on the result.

The full source code is as follows:

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 016 017 018 019 020 021 022 023 024 025 026 027 028 029 030 031 032 033 034 035 036 037 038 039 040 041 042 043 044 045 046 047 048 049 050 051 052 053 054 055 056 057 058 059 060 061 062 063 064 065 066 067 068 069 070 071 072 073 074 075 076 077 078 079 080 081 082 083 084 085 086 087 088 089 090 091 092 093 094 095 096 097 098 099 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 | public void detectObjectOnImage( String winName, Net dnnNet, Map outputLayers, String mediaFile, double threshold, boolean isSaveOuput, boolean isMaskShading) { HighGui.namedWindow(winName, HighGui.WINDOW_NORMAL); Mat frame = Imgcodecs.imread(mediaFile); int idx = new File(mediaFile).getName().split("\\.").length - 2; String outputFile = new File(mediaFile).getParent() + File.separator + "out" + File.separator + new File(mediaFile).getName().split("\\.")[idx] + "_mrcnn_out.jpg"; // Stop the program if reached end of video if (frame.empty()) { System.out.println( "can not read!!!"); System.out.println( "Output file is stored as " + outputFile); HighGui.waitKey(3000); return; } Map resultMap = resizeMatImage(frame, BLOB_MASK_RCNN_SIZE); Mat resizedMat = (Mat)resultMap.get("mat"); Rect padding = (Rect)resultMap.get("padding"); float scale = (float)resultMap.get("scale"); Mat blob = Dnn.blobFromImage(resizedMat, 1.0, BLOB_MASK_RCNN_SIZE, Scalar.all(0), true, false); dnnNet.setInput(blob); blob.release(); for(Iterator iter = outputLayers.keySet().iterator(); iter.hasNext();) { String key = iter.next(); Mat dtectMat = dnnNet.forward(key); outputLayers.put(key, dtectMat); } // create an object for label information. Map cocoLabels = CocoLabel.getCocoLabel(); // generate radnom color in order to draw bounding boxes List colors = CocoLabel.getColorsByLabel(); Mat detection = outputLayers.get("detection_out_final"); Mat detectionMasks = outputLayers.get("detection_masks"); float[] data = new float[(int) (detection.total())]; detection.get(0, 0, data); int rows = detection.size(2); boolean foundObject = false; detection = detection.reshape(1, (int) detection.total() / 7); // extract confidence value int cols = detection.cols(); data = new float[(int) detection.total()]; detection.get(0, 0, data); // Mat data to 1D array. for (int i = 0; i THRESHOLD) { foundObject = true; int classId = (int)data[i * cols + 1] ; // convert to Bounding Box coordinates int left = (int)((data[i * cols + 3] * MASK_RCNN_SIZE - padding.x) / scale); int top = (int)((data[i * cols + 4] * MASK_RCNN_SIZE - padding.y) / scale); int right = (int)((data[i * cols + 5] * MASK_RCNN_SIZE - padding.x) / scale); int bottom = (int)((data[i * cols + 6] * MASK_RCNN_SIZE - padding.y) / scale); DetectedBBox bbox = new DetectedBBox(confidence, classId, left, top, right, bottom); String label = String.format("%s: %.2f", cocoLabels.get(bbox.classId), confidence * 100); Scalar classColor = colors.get(bbox.classId); if(isMaskShading) { // 1. Retrieve a mask data of specific class from rowMat to 2D array conversion. Mat rowMat = detectionMasks.row(i).col(bbox.classId); // 2. Set the size of mask, gray scale conversion and creation of the object mask. Mat objectMask = new Mat(rowMat.size(2), rowMat.size(3), CvType.CV_32F); rowMat.reshape(1, (int) rowMat.total()).copyTo(objectMask.reshape(1, (int) rowMat.size(2))); drawMaskedBBox(frame, bbox, objectMask, label,classColor); } else { drawBoxSimple(frame, bbox, label, classColor); } bbox = null; } } // Put efficiency information. The function getPerfProfile returns the overall time for inference(t) // and the timings for each of the layers(in layersTimes) MatOfDouble layersTimes = new MatOfDouble(); double freq = Core.getTickFrequency() / 1000; double t = dnnNet.getPerfProfile(layersTimes) / freq; String label = String.format("Mask-RCNN, Inference time for a frame : %f ms", t); Imgproc.putText(frame, label, new Point(0, 15), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar(0, 0, 0)); if (!foundObject) { System.out.println("Object not found"); } HighGui.imshow(winName, frame); if(isSaveOuput) { // Write the frame with the detection boxes Mat detectedFrame = new Mat(); frame.convertTo(detectedFrame, CvType.CV_8U); Imgcodecs.imwrite(outputFile, detectedFrame); detectedFrame.release(); } layersTimes.release(); // Handle to exit int key = HighGui.waitKey(0); // Waits key input if (key == 27) { // Exit when ESC key pressed System.out.println("Window closing with ESC key"); } // Call destroyAllWindows to close. HighGui.destroyAllWindows(); // Destroy all windows. System.exit(0); // exit} |

10.4 Resizing The Image Source

The optimal image size for the Mask R-CNN model is 800; therefore, the input image may be resized if its original size is out of range.

10.4.1 Resize Mat Object

The resizeMatImage method resizes the source image to fit the Mask R-CNN model’s optimized input size of 800. Additionally, the method adjusts the image size accordingly, filling any extra space with padding to ensure the image meets the required dimensions if its original size is either too large or too small.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | /** * * @param orgMat : the original Mat object * @param targetSize : target size to resize * @return java.util.Map * */protected Map resizeMatImage(Mat orgMat, Size targetSize) { Map retMap = new HashMap(); Rect padding = new Rect(); int targetWidth = (int)targetSize.width; int targetHeight = (int)targetSize.height; //float[] scale = null; int originalWidth = orgMat.cols(); int originalHeight = orgMat.rows(); // Minimum scale against the original ratio. float scaleX = (float) targetWidth / originalWidth; float scaleY = (float) targetHeight / originalHeight; float minScale = Math.min(scaleX, scaleY); int newWidth = (int) (originalWidth * minScale); int newHeight = (int) (originalHeight * minScale); // Padding value calculation. int left = (targetWidth - newWidth) / 2; int top = (targetHeight - newHeight) / 2; int right = targetWidth - newWidth - left; int bottom = targetHeight - newHeight - top; // Resizing and padding adjustment. Mat resizedMat = new Mat(); Imgproc.resize(orgMat, resizedMat, new Size(newWidth, newHeight)); Mat paddedMat = new Mat(); Core.copyMakeBorder(resizedMat, paddedMat, top, bottom, left, right, Core.BORDER_CONSTANT, new Scalar(0, 0, 0)); // The padding information. padding.x = left; padding.y = top; padding.width = right; padding.height = bottom; retMap.put("mat", paddedMat); retMap.put("padding", padding); retMap.put("scale", minScale); // The scale information. return retMap;} |

The method returns a HashMap object containing the following items:

- Adjustment Scale: The scale factor used to resize the original image to the new size.

- Resized Mat Object: The resized image that has been adjusted to the target size.

- Padding Information: Information about the padding applied to the image to maintain the aspect ratio.

1 2 3 | retMap.put("mat", paddedMat);retMap.put("padding", padding);retMap.put("scale", minScale); |

10.5 Find Blob for Mask R-CNN Model

Now, you can use the blobFromImage method to create a blob as the input for the Mask R-CNN model using the resized Mat object, adjusted to an 800-pixel size.

1 2 3 4 5 6 7 8 9 | Map resultMap = resizeMatImage(frame, BLOB_MASK_RCNN_SIZE); Mat resizedMat = (Mat)resultMap.get("mat");Rect padding = (Rect)resultMap.get("padding");float scale = (float)resultMap.get("scale");Mat blob = Dnn.blobFromImage(resizedMat, 1.0, BLOB_MASK_RCNN_SIZE, Scalar.all(0), true, false);dnnNet.setInput(blob);blob.release(); |

The information about the detected objects is now saved after the forward pass of the OpenCV DNN module.

1 2 3 4 5 6 | for(Iterator iter = outputLayers.keySet().iterator(); iter.hasNext();){ String key = iter.next(); Mat dtectMat = dnnNet.forward(key); outputLayers.put(key, dtectMat);} |

We have declared the saved information, namely detection_out_final and detection_masks, as output layers, as described in section 5.2.2 ‘Output Layers of the Mask R-CNN Model.’

1 2 | Mat detection = outputLayers.get("detection_out_final");Mat detectionMasks = outputLayers.get("detection_masks"); |

Here are sample representations of both the detection_out_final and detection_masks layers:

a The detection_out_final layer

1 | Mat [ 1*1*100*7*CV_32FC1, isCont=true, isSubmat=true, nativeObj=0x1eff1c35870, dataAddr=0x1ef80776080 ] |

b. The detection_masks layer

1 | Mat [ 100*90*15*15*CV_32FC1, isCont=true, isSubmat=true, nativeObj=0x1eff1c3b450, dataAddr=0x1effeedb080 ] |

The dimensional information for both layers, as shown above, has already been described in sections 5.2.3 (‘Structure of detection_out_final layer’) and 5.2.4 (‘Structure of detection_masks layer’).

10.5.1 Obtain Information of detection_out_final

We can obtain bounding boxes and confidence scores from the detection_out_final layer, and this information is used to generate bounding boxes and labels on the original images.

The following outlines the steps and code involved in this process.

a. Reshape the Mat object by 7 groups.

1 | detection = detection.reshape(1, (int) detection.total() / 7); |

b. Assign the confidence value, convert the Mat data to 1D array.

1 2 3 | int cols = detection.cols();float[] data = new float[(int) (detection.total())];detection.get(0, 0, data); |

c. Draw the detected objects.

The following code selects bounding boxes with a default threshold value of 0.5 and draws the boxes on the image. You can adjust the threshold value if necessary.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | for (int i = 0; i (threshold <= 0 ? DEFAULT_THRESHOLD : threshold)) { foundObject = true; int classId = (int)data[i * cols + 1] ; // convert to Bounding Box Conversion int left = (int)((data[i * cols + 3] * MASK_RCNN_SIZE - padding.x) / scale); int top = (int)((data[i * cols + 4] * MASK_RCNN_SIZE - padding.y) / scale); int right = (int)((data[i * cols + 5] * MASK_RCNN_SIZE - padding.x) / scale); int bottom = (int)((data[i * cols + 6] * MASK_RCNN_SIZE - padding.y) / scale); DetectedBBox bbox = new DetectedBBox(confidence, classId, left, top, right, bottom); if(isMaskShading) { // 1. Retrieve a mask data of specific class from rowMat to 2D array conversion. Mat rowMat = detectionMasks.row(i).col(bbox.classId); // 2. Set the size of mask, gray scale conversion and creation of the object mask. Mat objectMask = new Mat(rowMat.size(2), rowMat.size(3), CvType.CV_32F); rowMat.reshape(1, (int) rowMat.total()).copyTo(objectMask.reshape(1, (int) rowMat.size(2))); drawMaskedBBox(frame, bbox, objectMask, confidence); } else { drawBoxSimple(frame, bbox, confidence); } } detection.release();} |

10.5.2 Drawing Bounding Box

In this final step, we draw bounding boxes using either the drawMaskedBBox or drawBoxSimple method. The choice of method is determined by the boolean value isMaskShading, which is set based on the user’s input. For example, Specifically, if isMaskShading is true, the drawMaskedBBox method is used; otherwise, drawBoxSimple is applied.

a. drawMaskedBBox method

This method draws bounding boxes, masks, and labels on the detected objects.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | // Draw the predicted bounding box, colorize and show the mask on the imageprivate boolean drawMaskedBBox( Mat frame, DetectedBBox bbox, Mat objectMask,float confidence) { boolean foundObject = true; // create an object for label information. Map cocoLabels = CocoLabel.getCocoLabel(); // generate radnom color in order to draw bounding boxes List colors = CocoLabel.getColorsByLabel(); String label = String.format("%s: %.2f", cocoLabels.get(bbox.classId), confidence * 100); Scalar classColor = colors.get(bbox.classId); Rect box = new Rect(bbox.left, bbox.top, bbox.right - bbox.left + 1, bbox.bottom - bbox.top + 1); // Display the label at the top of the bounding box int[] baseLine = { 0 }; Size labelSize = Imgproc.getTextSize(label, Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, 1, baseLine); box.y = Math.max(box.y, (int) labelSize.height); // Drawing a Bounding Box Only // Draw background for text Imgproc.rectangle( frame, new Point(box.x, box.y - Math.round(1.5 * labelSize.height)), new Point(box.x + Math.round(1.5 * labelSize.width), box.y + baseLine[0]), new Scalar(255, 255, 255), Imgproc.FILLED); Imgproc.putText(frame, label, new Point(bbox.left, bbox.top - 5), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, classColor, 2); // Check if frame is empty if (frame.empty()) { System.out.println("frame is empty! failed to load an image."); return false; } // check the frame vaildation int width = frame.cols(); int height = frame.rows(); if (width <= 0 || height <= 0) { System.out.println("invalid frame size."); return false; } // 3. Set the threshold for adjusting binarizatiion of the mask Imgproc.threshold(objectMask, objectMask, 0.5, 1.0, Imgproc.THRESH_BINARY); // 4. About to fuilfil color by Converting `objectMask` to `CV_8UC1` objectMask.convertTo(objectMask, CvType.CV_8UC1); // Convert // 5. Shading Color making a gray scale `objectMask` Mat colorMask = new Mat(); Imgproc.cvtColor(objectMask, colorMask, Imgproc.COLOR_GRAY2BGR); // 6. Apply the color mask : classColor Core.multiply(colorMask, classColor, colorMask); // Adjust masks mutiply by the color // 7. Smoothing the edges Mat smoothMask = new Mat(); Imgproc.GaussianBlur(colorMask, smoothMask, new Size(objectMask.rows(), objectMask.cols()), 0); // 8. Apply the ROI. Mat roi = getMrcnnROI(frame, bbox); // 9. Check the same size of both the colcoMask and roi, if not resize the image. if (roi.size().width != smoothMask.size().width || roi.size().height != smoothMask.size().height) { Imgproc.resize(smoothMask, smoothMask, roi.size()); } // 10. Convert the data type of `smoothMask` to `CV_8U`covered by `roi` smoothMask.convertTo(smoothMask, CvType.CV_8U); // 11. Draw a BBox (adding a boundary for the original image) Imgproc.rectangle(frame, new Point(bbox.left, bbox.top), new Point(bbox.right, bbox.bottom), classColor, 2); // BBox // 12. Paint the mask to the original image(Alpha Blending) Core.addWeighted(roi, 0.8, smoothMask, 0.5, 0, roi); return foundObject;} |

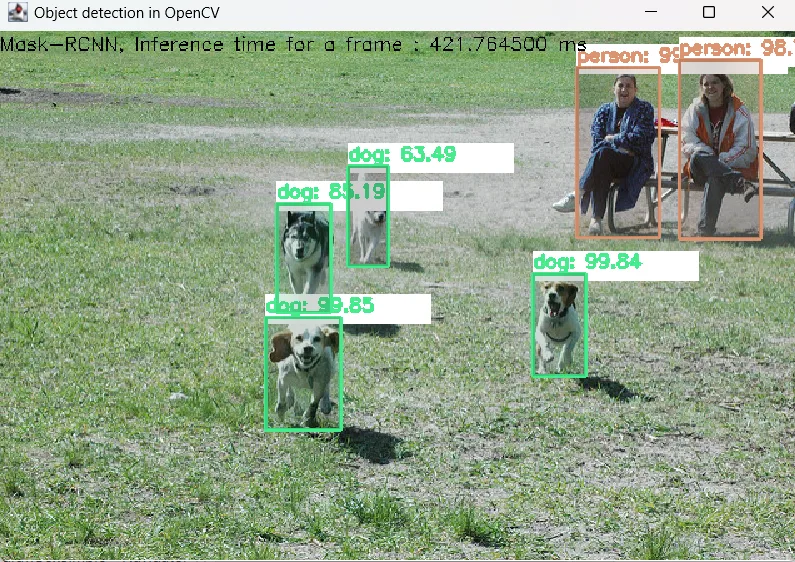

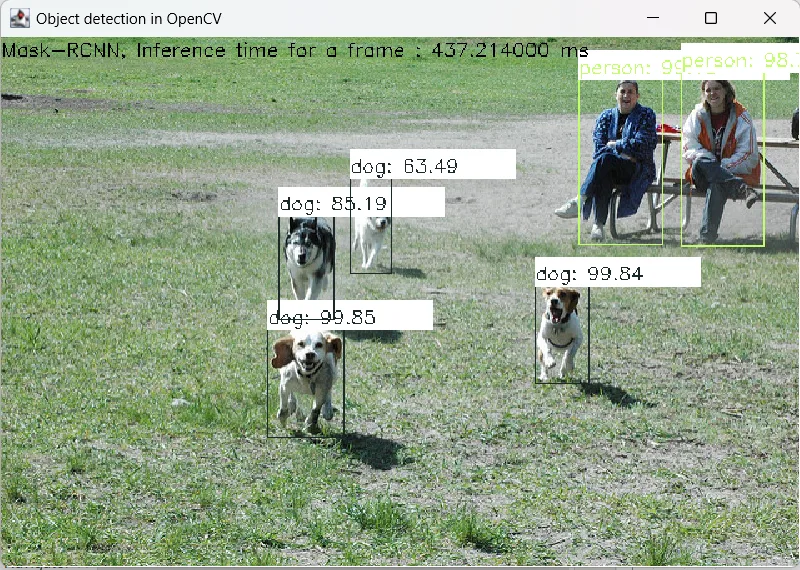

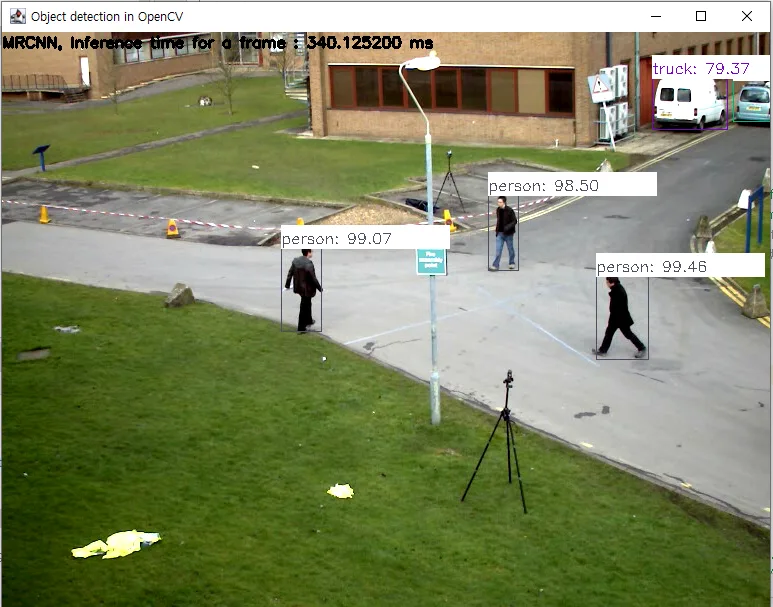

The output is shown in the following figure:

b. Simple Bounding Box Drawing

This method draws bounding boxes around the objects without applying masks or labels.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | private static void drawBoxSimple( Mat frame, DetectedBBox bbox, String label, Scalar selectedColor) { Rect box = new Rect(bbox.left, bbox.top, bbox.right - bbox.left + 1, bbox.bottom - bbox.top + 1); //Scalar selectedColor = colors.get(classId); Point x_y = new Point(box.x, box.y); Point w_h = new Point(box.x + box.width, box.y + box.height); Imgproc.rectangle(frame, w_h, x_y, selectedColor, 1); int baseLine[] = { 0 }; Size labelSize = Imgproc.getTextSize(label, Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, 1, baseLine); box.y = Integer.max(box.y, (int) labelSize.height); // Draw a Bounding Box Only //Imgproc.rectangle(frame, new Point(box.x, box.y), new Point(bbox.right, bbox.bottom), classColor, 2); // Draw background for text Imgproc.rectangle( frame, new Point(box.x, box.y - Math.round(1.5 * labelSize.height)), new Point(box.x + Math.round(1.5 * labelSize.width), box.y + baseLine[0]), new Scalar(255, 255, 255), Imgproc.FILLED); Imgproc.putText(frame, label, new Point(box.x, box.y), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, selectedColor,1);} |

The output is slightly different from the previous one:

10.6 Object Detection in Video

The detectObjectOnVideo method detects objects in a video in which The same detection technique is applied. However, the key difference is that the video is processed frame by frame. Specifically, each frame is converted to a Mat object, and then objects are detected individually in each frame.

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 016 017 018 019 020 021 022 023 024 025 026 027 028 029 030 031 032 033 034 035 036 037 038 039 040 041 042 043 044 045 046 047 048 049 050 051 052 053 054 055 056 057 058 059 060 061 062 063 064 065 066 067 068 069 070 071 072 073 074 075 076 077 078 079 080 081 082 083 084 085 086 087 088 089 090 091 092 093 094 095 096 097 098 099 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 | public void detectObjectOnVideo( String winName, Net dnnNet, Map outputLayers, String mediaFile, double threshold, boolean isSaveOuput, boolean isMaskShading) { HighGui.namedWindow(winName, HighGui.WINDOW_NORMAL); Mat frame = new Mat(); VideoCapture capture = new VideoCapture(mediaFile); if (capture.open(mediaFile)) { capture.read(frame); HighGui.imshow(winName, frame); } else { System.out.println("Capture is not opened!"); return; } VideoWriter vwriter = new VideoWriter(); Mat detectedFrame = new Mat(); String outputFile = mediaFile.substring(0, mediaFile.length() - 4) + "_yolov4_out.avi"; Mat detection; Mat detectionMasks; while (capture.isOpened()) { if(HighGui.waitKey(100) == 27) //ESC key to exit { System.out.println( "[ESC]Done processing !!!"); HighGui.waitKey(1000); break; } // get frame from the video capture.read(frame); // Stop the program if reached end of video if (frame.empty()) { System.out.println( "Done processing !!!"); System.out.println( "Output file is stored as " + outputFile); HighGui.waitKey(3000); if(vwriter.isOpened()) { vwriter.release(); } break; } Map resultMap = resizeMatImage(frame, BLOB_MASK_RCNN_SIZE); Mat resizedMat = (Mat)resultMap.get("mat"); Rect padding = (Rect)resultMap.get("padding"); float scale = (float)resultMap.get("scale"); // Create a 4D blob from a frame. Mat blob = Dnn.blobFromImage(resizedMat, 1.0, BLOB_MASK_RCNN_SIZE, Scalar.all(0), true, false); //Sets the input to the network dnnNet.setInput(blob); //List outNames = new ArrayList(2); for(Iterator iter = outputLayers.keySet().iterator(); iter.hasNext();) { String key = iter.next(); //outNames.add(iter.next()); //System.out.println(key); Mat dtectMat = dnnNet.forward(key); outputLayers.put(key, dtectMat); } resizedMat.release(); blob.release(); for(Iterator iter = outputLayers.keySet().iterator(); iter.hasNext();) { String key = iter.next(); Mat dtectMat = dnnNet.forward(key); outputLayers.put(key, dtectMat); } detection = outputLayers.get("detection_out_final"); detectionMasks = outputLayers.get("detection_masks"); int maxObjCnt = detection.size(2); int attrSize = detection.size(3); detection = detection.reshape(1, (int) detection.total() / attrSize); // extract confidence value int cols = detection.cols(); float[] data = new float[(int) (detection.total())]; detection.get(0, 0, data); // Mat data to 1D array. for (int i = 0; i (threshold <= 0 ? DEFAULT_THRESHOLD : threshold)) { int classId = (int)data[i * cols + 1] ; // convert to Bounding Box Conversion int left = (int)((data[i * cols + 3] * MASK_RCNN_SIZE - padding.x) / scale); int top = (int)((data[i * cols + 4] * MASK_RCNN_SIZE - padding.y) / scale); int right = (int)((data[i * cols + 5] * MASK_RCNN_SIZE - padding.x) / scale); int bottom = (int)((data[i * cols + 6] * MASK_RCNN_SIZE - padding.y) / scale); DetectedBBox bbox = new DetectedBBox(confidence, classId, left, top, right, bottom); if(isMaskShading) { // 1. Retrieve a mask data of specific class from rowMat to 2D array conversion. Mat rowMat = detectionMasks.row(i).col(bbox.classId); // 2. Set the size of mask, gray scale conversion and creation of the object mask. Mat objectMask = new Mat(rowMat.size(2), rowMat.size(3), CvType.CV_32F); rowMat.reshape(1, (int) rowMat.total()).copyTo(objectMask.reshape(1, (int) rowMat.size(2))); drawMaskedBBox(frame, bbox, objectMask,confidence); objectMask.release(); rowMat.release(); } else { drawBoxSimple(frame, bbox,confidence); } } detection.release(); } // Put efficiency information. The function getPerfProfile returns the overall time for inference(t) // and the timings for each of the layers(in layersTimes) MatOfDouble layersTimes = new MatOfDouble(); double freq = Core.getTickFrequency() / 1000; double t = dnnNet.getPerfProfile(layersTimes) / freq; String label = String.format("%s, Inference time for a frame : %f ms", modelName, t); Imgproc.putText(frame, label, new Point(0, 15), Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar(0, 0, 0), 2); HighGui.imshow(winName, frame); if(isSaveOuput) { double fps = capture.get(Videoio.CAP_PROP_FPS); if(fps == 0D) { continue; } Size size = new Size(capture.get(Videoio.CAP_PROP_FRAME_WIDTH), capture.get(Videoio.CAP_PROP_FRAME_HEIGHT)); int fourcc_mjpg = VideoWriter.fourcc('M', 'J', 'P', 'G'); //# Motion JPEG format isSaveOuput = vwriter.open(outputFile, fourcc_mjpg, fps, size, true); if(!isSaveOuput) { JOptionPane.showMessageDialog(null, String.format("Can not open the video file[sz:%s, fps:%f]", size.toString(), fps), "error", JOptionPane.INFORMATION_MESSAGE); break; } frame.convertTo(detectedFrame, CvType.CV_8U); if(vwriter.isOpened()) { vwriter.write(detectedFrame); } else { System.out.println("========vwriter is not opened======="); } } layersTimes.release(); } // Explicit termination of window HighGui.destroyAllWindows(); // close all windows System.exit(0); // exit} |

Next, you can refer to the following skeleton code to extract frames from a video file, one by one.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | public void detectObjectOnVideo( String winName, Net dnnNet, Map outputLayers, String mediaFile, double threshold, boolean isSaveOuput, boolean isMaskShading) { ... HighGui.namedWindow(winName, HighGui.WINDOW_NORMAL); Mat frame = new Mat(); VideoCapture capture = new VideoCapture(mediaFile); if (capture.open(mediaFile)) { capture.read(frame); HighGui.imshow(winName, frame); } else { System.out.println("Capture is not opened!"); return; } VideoWriter vwriter = new VideoWriter(); ... while (capture.isOpened()) { if(HighGui.waitKey(100) == 27) //ESC key to exit { ... HighGui.waitKey(1000); break; } // get frame from the video capture.read(frame); // Stop the program if reached end of video if (frame.empty()) { HighGui.waitKey(3000); if(vwriter.isOpened()) { vwriter.release(); } break; } ... HighGui.imshow(winName, frame); ... } HighGui.destroyAllWindows(); System.exit(0); } |

The result is :

a. Video rendering with OpenCV, displaying labels, bounding boxes, and masks.

b. Video rendering with OpenCV, displaying labels and bounding boxes without masks.

10.6.1 Threshold Adjustment

In the figure below, we can see a bird identified as a false detection because the confidence score is approximately 0.65 or lower. Therefore, to improve the result, we can adjust the threshold from 0.5 to 0.655, as shown in the following figure.

Now, we have a clean result that no longer contains the falsely detected object, the bird.

10.6.2 Check Out of Range ROI

We also need to check whether the ROI(Region of Interest) is out of range when drawing masked bounding boxes. For example, if the ROI is out of range and not checked, you may encounter an error like this :

1 | Exception in thread "main" CvException [org.opencv.core.CvException: cv::Exception: OpenCV(4.10.0) C:\GHA-OCV-1\_work\ci-gha-workflow\ci-gha-workflow\opencv\modules\core\src\matrix.cpp:767: error: (-215:Assertion failed) 0 <= _rowRange.start && _rowRange.start <= _rowRange.end && _rowRange.end <= m.rows in function 'cv::Mat::Mat' |

The following getMrcnnROI method can eliminate this error:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 | public static Mat getMrcnnROI(Mat frame, DetectedBBox mybbox) { int frameHeight = frame.rows(); int frameWidth = frame.cols(); Rect bbox = new Rect(mybbox.left, mybbox.top, mybbox.right - mybbox.left + 1, mybbox.bottom - mybbox.top + 1); // Adjust coordinates to ensure the image stays within range. int top = Math.max(0, Math.min(bbox.y, frameHeight - 1)); int bottom = Math.max(0, Math.min(bbox.y + bbox.height, frameHeight)); int left = Math.max(0, Math.min(bbox.x, frameWidth - 1)); int right = Math.max(0, Math.min(bbox.x + bbox.width, frameWidth)); //Add another check to validate the ROI, ensuring the width and height are greater than 0. if (top >= bottom || left >= right) { System.err.println("Invaild ROI!"); return new Mat(); // retun empty Mat. } // return Safe Mat return frame.submat(top, bottom, left, right);} |

11. Object detection by YOLO Model

In this section, we explore the object detection technique using the YOLO model in our OpenCV Java Object Detection.

Similar to the Mask R-CNN approach, detection can be performed using either the detectObjectOnImage or detectObjectOnVideo method, depending on the mediaType value provided by the user, which must be either ‘Image’ or ‘Video’.

11.1 User Input Values

The user fills in the following information: For example, select ‘YOLO’, choose ‘Image’, and either ‘GPU’ or ‘CPU’ in the respective combo boxes. Alternatively, select ‘YOLO’, choose ‘Video’, and either ‘GPU’ or ‘CPU’. Depending on the file type (‘Image’ or ‘Video’), choose the appropriate file for the ‘InFile’ label.

The Description of Components:

- Model: The file path for

yolov4.weights. You can select this file by clicking the Find button. - Config: The file path for

yolov4.cfg. You can select this file by clicking the Find button. - InFile: The path to the media file. The type (image or video) depends on the selection made in the first combo box.

- Save Output: A flag that determines whether to save the result. The output will be saved in the

outfolder within the directory of the selected image or video file. - Shading Mask: Disabled — applicable only to the Mask R-CNN model.

11.2 Explanation of Detection Results

We can extract a blob using the blobFromImage method, which serves as an argument for the setInput method of the CNN network.

1 2 3 4 | Mat blob = Dnn.blobFromImage(frame, 1 / 255.0, BLOB_YOLO_V4_SIZE, new Scalar(new double[] { 0.0, 0.0, 0.0 }), true, false);dnnNet.setInput(blob);blob.release(); |

Then, the forward method processes the blob and returns a Mat containing information about the detected objects based on the outputLayers names.

1 2 3 4 5 6 | for(Iterator iter = outputLayers.keySet().iterator(); iter.hasNext();){ String key = iter.next(); Mat dtectMat = dnnNet.forward(key); outputLayers.put(key, dtectMat);} |

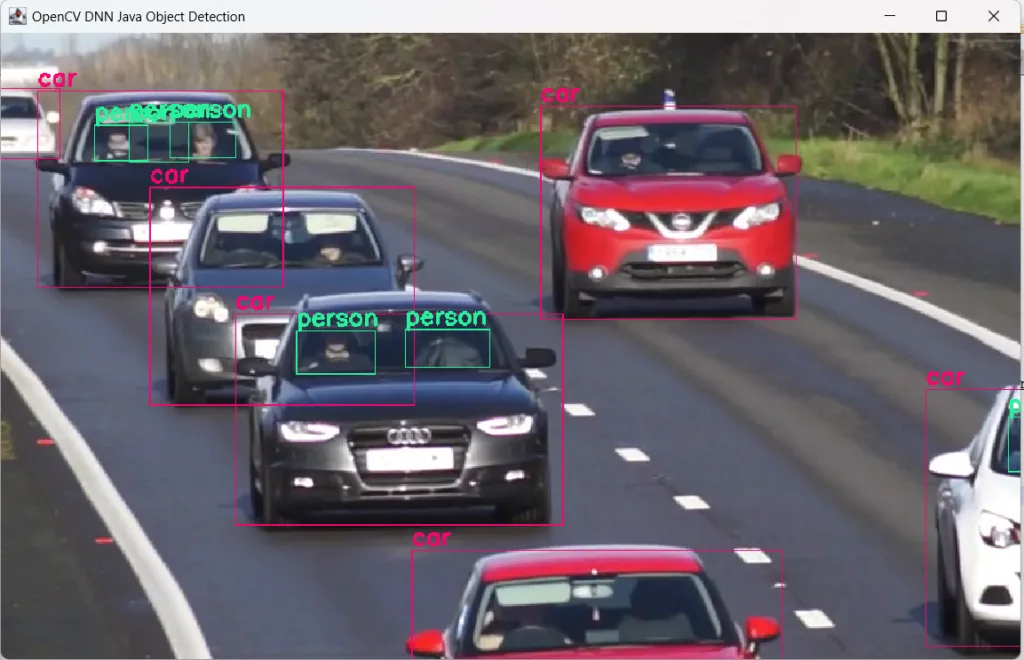

The following table describes the three different output layers from the code above, where the YOLO model produces three output layers containing detection information.

| Output Layers | Grid Count (Predicted BBox) | Role |

|---|---|---|

| yolo_139 | 17328 × 85 | The small size object detection(Finest resolution). |

| yolo_150 | 4332 × 85 | The middle size object detection. |

| yolo_161 | 1083 × 85 | The large size object detection. ( lowest resolution) |

Each row of the Mat represents a grid cell, and each column contains 85 items, including the bounding box, objectness score, and class scores. Additionally, these outputs are analyzed and saved at three different resolutions: yolo_150, yolo_161, and yolo_139.

Now, we are ready to draw the detections on the original image.

11.3 Image Detection Method

If the user selects ‘Image’ from the combo box, the detectObjectOnImage method is activated. This method performs object detection on the image using the OpenCV DNN module.

The signature of the method:

1 2 3 4 | public void detectObjectOnImage( String winName, Net dnnNet, Map outputLayers, String mediaFile, double threshold, boolean isSaveOuput, boolean isMaskShading); |

The following describes the method parameters:

- winName: The title of the activated window.

- dnnNet: The CNN model returned from the

readDNNetByModelNamemethod. - outputLayers: The output layers from the

getMaskRCnnOutputLayermethod. - mediaFile: The file path of the image.

- isSaveOutput: A flag indicating whether to save the image.

- isMaskShading: Not available (Mask R-CNN only).

The full source code of the method: