Python Beautifulsoup Example Cheat Sheet

1. Introduction

Beautiful Soup is a popular Python library designed for web scraping, which refers to the extraction of data from websites. It allows programmers to easily parse HTML and XML documents, making it a powerful tool for web scraping tasks. Beautiful Soup was created by Rob Knight, and the project has been maintained by the community since 2014. This article is about beautifulsoup example cheat sheet.

Key features of Beautiful Soup include:

- Parsing HTML and XML documents: Beautiful Soup can parse HTML and XML documents with ease, allowing users to navigate, search, and modify the parsed data.

- NavigableString and Tag objects: Beautiful Soup uses two primary objects, NavigableString and Tag, to represent elements within a document. NavigableString represents a piece of text within a Tag, while Tag represents an individual HTML or XML element.

- Parsing options: Beautiful Soup supports several parsers, such as lxml, html.parser, and the built-in Python html.parser. These parsers help to handle various document formats and ensure that the parsing process is accurate and efficient.

- Searching and filtering: Beautiful Soup provides several methods for searching and filtering elements within a document, such as find_all(), find(), and select(). These methods make locating specific elements and data within a parsed document easy.

- Modifying parsed data: Beautiful Soup allows users to modify the parsed data, including adding, removing, and changing elements, attributes, and text within a document.

There are several alternatives to Beautiful Soup for web scraping in Python, each with its strengths and use cases. Here are some popular ones:

- lxml

- Scrapy

- Selenium

- Requests-HTML

- PyQuery

Each of these tools has its own set of features and is suited to different types of web scraping tasks. For example, Scrapy is great for large projects, Selenium is essential for scraping dynamic content, and lxml is preferred for its speed in parsing.

We will look into Beautiful Soup example cheatsheet in the next section.

2. BeautifulSoup Example Cheat Sheet

BeautifulSoup is a Python library used for web scraping to parse HTML and XML documents. Here is a cheat sheet to help you get started with BeautifulSoup:

2.1 Importing BeautifulSoup

1 | from bs4 import BeautifulSoup |

2.2 Creating a Soup Object

1 2 3 | soup = BeautifulSoup(html_doc, 'lxml')soup = BeautifulSoup(html_doc, 'html.parser') |

2.3 Modifying the Tree

1 2 3 4 5 6 7 | element = soup.find('p')element.string = "New text"element['class'] = 'new-class'element.decompose() |

2.4 Beautifulsoup Examples

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 | soup = BeautifulSoup(html_doc, 'lxml')title = soup.title.stringlinks = soup.find_all('a')for link in links: print(link.get('href'))link2 = soup.find(id='link2')link2_href = link2['href']paragraphs = soup.find_all('p')for p in paragraphs: print(p.get_text()) |

2.5 Additional Beautifulsoup Tips

- Parsing Options: BeautifulSoup supports different parsers (

html.parser,lxml,html5lib). Choose one based on your needs. - Error Handling: BeautifulSoup is designed to handle imperfect HTML and will try to parse even malformed documents.

- Performance: For large documents, consider using

lxmlfor faster parsing.

This cheat sheet provides a basic overview of BeautifulSoup’s capabilities.

2.6 Setting up BeautifulSoup

To use Beautiful Soup, you first need to install the libraries requests,beautifulsoup4, lxml using pip:

1 2 3 4 5 | python3 -m venv beautiful_soupsource beautiful_soup/bin/activatepip3 install -r requirements.txt |

You can look at the dependencies in the file: requirements.txt

1 2 3 4 5 6 7 8 | beautifulsoup4==4.12.3certifi==2024.7.4charset-normalizer==3.3.2idna==3.7lxml==5.2.2requests==2.32.3soupsieve==2.5urllib3==2.2.2 |

2.7 Load and Parse a Webpage to Beautiful Soup and Requests Modules

Here’s a simple example of how to use Beautiful Soup to parse an HTML document and extract information:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | from bs4 import BeautifulSoup as bsoupimport requests as reqsresp = reqs.get(url)html_doc = resp.contentbeautsoup = bsoup(html_doc, 'lxml')content_headline = beautsoup.title.stringcontent_links = beautsoup.find_all('a')print(content_headline)for content_link in content_links: print(content_link.get('href')) |

You can use this command below to execute the code within the Python environment.

1 | python3 BeautifulSoup.py |

The output of the above-executed command will be:

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | (beautiful_soup) bhagvanarch@Bhagvans-MacBook-Air beautiful_soup_14_jul % python3 BeautifulSoup.pyGooglehttps://www.google.com/imghp?hl=en&tab=wihttps://maps.google.co.in/maps?hl=en&tab=wlhttps://play.google.com/?hl=en&tab=w8https://www.youtube.com/?tab=w1https://news.google.com/?tab=wnhttps://mail.google.com/mail/?tab=wmhttps://drive.google.com/?tab=wohttps://www.google.co.in/intl/en/about/products?tab=whhttp://www.google.co.in/history/optout?hl=en/preferences?hl=enhttps://accounts.google.com/ServiceLogin?hl=en&passive=true&continue=https://www.google.com/&ec=GAZAAQ/advanced_search?hl=en-IN&authuser=0https://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=hi&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAYhttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=bn&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAchttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=te&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAghttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=mr&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAkhttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=ta&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAohttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=gu&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAshttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=kn&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCAwhttps://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=ml&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCA0https://www.google.com/setprefs?sig=0_F-6Lw92bnZbVY-WPzZbv3uT1P_k%3D&hl=pa&source=homepage&sa=X&ved=0ahUKEwiv98b8x6aHAxXCrZUCHQKNCNoQ2ZgBCA4/intl/en/ads/http://www.google.co.in/services//intl/en/about.htmlhttps://www.google.com/setprefdomain?prefdom=IN&prev=https://www.google.co.in/&sig=K_E0keZydLUosGfUDX3HMhNGX8MB8%3D/intl/en/policies/privacy//intl/en/policies/terms/ |

2.8. The Basics of WebScrapping with BeautifulSoup

BeautifulSoup is a powerful Python library used for web scraping, allowing you to parse HTML and XML documents and extract data. Here’s a step-by-step guide to getting started with web scraping using BeautifulSoup.To begin, you need to install BeautifulSoup and requests libraries. Use pip to install them. Start by importing the necessary libraries. Use the requests library to fetch the content of the webpage you want to scrape.

The steps mentioned above are shown in the sections before.For example, let’s scrape a simple webpage:

Step 1: Parse the HTML Content

Create a BeautifulSoup object to parse the HTML content. You can choose different parsers (html.parser, lxml, html5lib), but lxml is recommended for its speed and features:

1 | soup = BeautifulSoup(html_content, 'lxml') |

Step 2: Navigate and Search the Parse Tree

BeautifulSoup provides several methods to navigate and search the parse tree. Here are some common tasks:

Find Elements by Tag Name

1 2 3 | first_h1 = soup.find('h1')all_p_tags = soup.find_all('p') |

Find Elements by Class or ID

1 2 3 | element_by_id = soup.find(id='main')elements_by_class = soup.find_all(class_='example-class') |

Extract Text from Elements

1 | text = first_h1.text |

Extract Attributes from Elements

1 2 | first_a_tag = soup.find('a')href = first_a_tag['href'] |

Using CSS Selectors

1 | elements = soup.select('div.example-class > a') |

Step 3: Example: Scraping a List of Articles

Here’s a full example where we scrape a list of articles from a webpage, extracting the title and URL of each article.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 | import requestsfrom bs4 import BeautifulSoupresponse = requests.get(url)soup = BeautifulSoup(response.content, 'lxml')articles = soup.find_all('div', class_='article')for article in articles: title = article.find('h2').text url = article.find('a')['href'] print(f'Title: {title}') print(f'URL: {url}') |

Step 4: Handle Pagination (Optional)

If the website has multiple pages, you may need to handle pagination. This involves extracting the URL of the next page and repeating the scraping process.

1 2 3 4 5 | next_page = soup.find('a', class_='next')if next_page: next_url = next_page['href'] next_response = requests.get(next_url) next_soup = BeautifulSoup(next_response.content, 'lxml') |

BeautifulSoup is a versatile tool for web scraping, enabling you to extract and manipulate data from web pages effortlessly. By sending requests, parsing HTML, and navigating the parse tree, you can gather information for various applications such as data analysis, research, and more. Always remember to respect the website’s terms of service and ethical considerations when scraping data.

3. Web Scrapping in Action

Web scraping is the automated process of extracting data from websites. It involves using software to access the internet, download web pages, and parse the HTML to retrieve the required information. Web scraping can be used to gather large amounts of data quickly and efficiently, making it a valuable tool for various applications such as data analysis, market research, and competitive analysis.

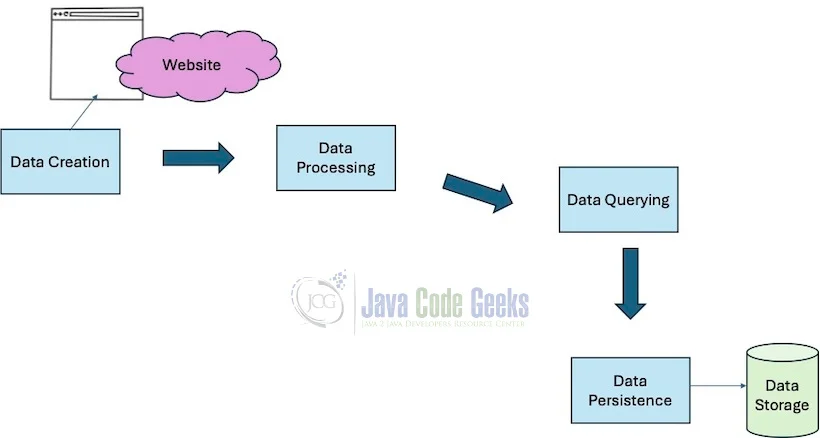

Figure 1: Web Scraping Flow Diagram

Web Scraping Components

- HTTP Requests:

- To access web pages, web scrapers send HTTP requests to the target website’s server.

- Common methods include GET (to retrieve data) and POST (to send data).

- HTML Parsing:

- After fetching the web page, the content (usually in HTML format) is parsed to extract useful information.

- Parsing involves analyzing the HTML structure and identifying the elements containing the desired data.

- Data Extraction:

- Extracting specific data from the HTML content involves locating the relevant tags and attributes.

- Techniques include using regular expressions, XPath, CSS selectors, and libraries like Beautiful Soup.

- Data Storage:

- Once the data is extracted, it can be stored in various formats such as CSV, JSON, databases, or directly into data analysis tools.

Tools and Libraries for Web Scraping

- Python Libraries:

- Beautiful Soup: A library for parsing HTML and XML documents. It creates parse trees from page source codes that can be used to extract data.

- requests: A library for sending HTTP requests. It simplifies the process of sending requests and handling responses.

- Selenium: A tool for automating web browsers. It’s useful for scraping dynamic content generated by JavaScript.

- Scrapy: A powerful and flexible web scraping framework that allows you to build and run web spiders.

- JavaScript Libraries:

- Cheerio: A library that provides a jQuery-like syntax for server-side HTML parsing.

- Puppeteer: A Node library that provides a high-level API to control headless Chrome or Chromium browsers.

- Other Tools:

- Octoparse: A visual web scraping tool that allows users to scrape data without coding.

- ParseHub: A visual data extraction tool that can handle dynamic content.

Use Cases for Web Scraping

- Market Research:

- Collecting data on products, prices, and reviews from e-commerce sites.

- Data Analysis:

- Aggregating information from various sources for research and analysis.

- Competitive Analysis:

- Monitoring competitors’ websites for changes in pricing, product offerings, and promotions.

- Content Aggregation:

- Gathering articles, news, and updates from multiple websites for content curation.

- Lead Generation:

- Extracting contact information from business directories or social media platforms.

Best Practices

- Respect Website’s Terms of Service:

- Always review and comply with the terms of service and robots.txt file of the website you’re scraping.

- Avoid Overloading Servers:

- Implement rate limiting and delays between requests to avoid overwhelming the server.

- Use Proxies and User Agents:

- Rotate IP addresses and user-agent strings to mimic human browsing behaviour and avoid getting blocked.

- Legal Compliance:

- Ensure that your scraping activities comply with data privacy laws and regulations.

3.1 Planning for Web Scraping

Planning for web scraping involves several steps to ensure you extract data efficiently, legally, and ethically. Here is a comprehensive guide to help you plan your web scraping project:

Define Your Objective

- Identify the Data: Determine the specific data you need. For example, product prices, reviews, news articles, or financial information.

- Purpose: Understand why you need this data. Are you conducting market research, building a data analysis project, or monitoring competitors?

Select Target Websites

- Relevance: Choose websites that contain the data relevant to your objective.

- Structure: Prefer websites with a consistent and well-structured HTML layout.

Review Legal and Ethical Considerations

- Terms of Service: Check the website’s terms of service to ensure web scraping is allowed.

- Robots.txt: Review the

robots.txtfile to see which parts of the website can be crawled and which are restricted. - Data Privacy Laws: Ensure compliance with data privacy laws such as GDPR, CCPA, etc.

Plan the Technical Approach

- Choose Tools and Libraries:

- Requests: For sending HTTP requests.

- Beautiful Soup: For parsing HTML content.

- Selenium: For handling dynamic content.

- Scrapy: For large-scale scraping projects.

- Set Up Development Environment:

- Install necessary libraries using

pip.

Design the Scraper

- Identify HTML Elements: Use browser developer tools to inspect the HTML structure and identify the tags and classes containing the data.

- Handle Pagination: Plan how to navigate through multiple pages if the data spans several pages.

- Rate Limiting: Implement delays between requests to avoid overloading the server.

- Proxies and User Agents: Use proxies and rotate user-agent strings to avoid getting blocked.

Write and Test the Scraper

- Start with a Prototype: Write a basic script to scrape a small amount of data.

- Handle Exceptions: Add error handling for common issues like network errors, missing elements, or CAPTCHA challenges.

- Test the Script: Run the script and verify that it correctly extracts the data. Make adjustments as needed.

Data Storage and Processing

- Storage Formats: Decide how to store the data (CSV, JSON, databases).

- Data Cleaning: Plan for cleaning and preprocessing the data to remove duplicates, handle missing values, etc.

- Automation: If you need to run the scraper regularly, consider automating it using cron jobs or other scheduling tools.

Document Your Work

- Code Documentation: Comment your code and provide clear documentation for future reference or collaboration.

- Project Documentation: Write down the objective, scope, and methodology of the scraping project.

3.2 Example Project Plan

Objective: Scrape headlines from a news website for sentiment analysis.

Target Website: https://news.ycombinator.com/

Tools and Libraries:

requestsfor HTTP requests.BeautifulSoupfor HTML parsing.pandasfor data storage and processing.

Steps (demonstrated in previous sections):

- Send a GET Request

- Parse HTML Content

- Extract and Store Data

- Handle Pagination (if applicable)

- Determine how the website paginates content (e.g., URL patterns or “Next” buttons).

- Implement logic to navigate through pages and repeat the extraction process.

By carefully planning your web scraping project, you can ensure that you collect the data you need efficiently and responsibly. This includes defining your objective, selecting target websites, complying with legal and ethical guidelines, choosing the right tools, designing and testing your scraper, handling data storage, and documenting your work. This structured approach will help you build robust and effective web scrapers.

4. Challenges in Data Extraction

Data extraction involves retrieving and processing data from various sources to make it useful for analysis and decision-making. Here are some common challenges faced in data extraction:

- Data Variety: Data can come in many formats, such as structured, semi-structured, and unstructured data, from different sources like databases, web pages, documents, and more. Handling this variety can be complex.

- Data Quality: Ensuring the accuracy, completeness, and consistency of the extracted data is crucial. Poor data quality can lead to incorrect analysis and decisions.

- Volume of Data: Dealing with large volumes of data can be challenging in terms of storage, processing power, and time required for extraction.

- Data Integration: Combining data from different sources into a coherent dataset can be difficult, especially when dealing with different data formats and structures.

- Real-time Extraction: Extracting data in real-time or near-real-time requires efficient processing and handling of streaming data.

- Access and Permissions: Gaining access to certain data sources can be restricted due to permissions, privacy, and security concerns.

- Data Transformation: Transforming the extracted data into a format suitable for analysis can be complex, involving tasks like cleaning, normalization, and aggregation.

- Automation: Automating the extraction process can be challenging, especially when dealing with dynamic or frequently changing data sources.

- Error Handling: Detecting and managing errors during the extraction process is critical to ensure data integrity.

- Scalability: Ensuring that the data extraction process can scale efficiently as the volume and variety of data grow.

- Compliance and Legal Issues: Ensuring that the data extraction process complies with legal and regulatory requirements, such as data privacy laws, can add another layer of complexity.

- Performance: Balancing the need for timely data extraction with the performance limitations of the systems involved.

- Technical Expertise: Extracting data, especially from complex or proprietary systems, often requires specialized technical skills and knowledge.

Addressing these challenges often involves using a combination of tools, technologies, and best practices tailored to the specific needs and constraints of the data extraction project. When working with APIs, you often don’t need an HTML parser like Beautiful Soup. Instead, you can use libraries designed to handle JSON, XML, or other data formats that APIs typically return. Here are some alternatives for working with APIs in Python:

- For simple synchronous API requests: Use

requestsorhttpx. - For asynchronous requests: Use

aiohttporhttpx(for both sync and async). - For built-in library: Use

urllib. - For cURL capabilities: Use

pycurl. - For async

requestscompatibility: Userequests-async.

Each library has its strengths, and your choice will depend on your specific needs, such as the need for asynchronous support or a preference for built-in libraries.

5. Ethical Considerations

Web scraping, the process of extracting data from websites, raises several ethical considerations that should be carefully addressed. Here are some key ethical concerns:

- Respecting Terms of Service: Many websites have terms of service that explicitly prohibit scraping. Ignoring these terms can lead to legal issues and is considered unethical.

- Data Privacy: Scraping personal data without consent can violate privacy rights. It’s important to avoid extracting sensitive information and ensure compliance with data protection laws like GDPR.

- Intellectual Property Rights: Content on websites is often protected by intellectual property laws. Unauthorized scraping can infringe on copyrights and other IP rights.

- Server Load and Performance: Aggressive scraping can place a significant load on a website’s server, potentially degrading performance for other users or even causing the site to crash. Ethical scraping involves being mindful of the impact on the website’s performance.

- Transparency and Disclosure: When using data obtained through scraping, it’s ethical to disclose the source of the data and ensure that it’s used transparently.

- Fair Use: Consider whether the data being scraped is intended for fair use. For instance, scraping for research or educational purposes may be more ethically justifiable than for commercial gain without permission.

- Content Misrepresentation: Ensure that the data is not taken out of context or misrepresented. This is especially important when scraping news or information that can influence public opinion.

- User Harm: Avoid scraping in ways that can cause harm to users, such as extracting and exposing sensitive or misleading information.

- Consent and Notification: Where possible, obtain consent from the website owner or notify them of your intentions to scrape their data. This can help mitigate ethical concerns and build trust.

- Data Accuracy and Integrity: Ensure that the data collected is accurate and maintained with integrity. Misleading or incorrect data can have harmful consequences.

- Beneficence: Consider the potential benefits versus the harm of scraping. Ethical scraping should aim to provide positive outcomes or public good.

- Responsibility and Accountability: Be prepared to take responsibility for the consequences of your scraping activities. This includes being accountable for any harm caused or legal issues that arise.

By addressing these ethical considerations, web scraping can be conducted in a manner that respects the rights and interests of all parties involved. Practising web scraping can be a great way to learn and improve your skills in extracting data from websites.

5.1 Tips for Practicing Web Scraping

- Start Simple: Begin with static websites that have simple HTML structures.

- Understand HTML: Learn basic HTML to understand the structure of the web pages you’re scraping.

- Handle Different Data Formats: Be prepared to handle different data formats like JSON and XML.

- Respect the Website’s Terms of Service: Always check the website’s terms of service and robots.txt file to ensure that you are allowed to scrape their data.

- Avoid Overloading Servers: Be considerate of the server load by adding delays between requests.

- Use Proxies and User Agents: To avoid getting blocked, you can use different user agents and proxies.

5.2 Practice Projects

- Weather Data: Scrape weather data from a weather forecasting site.

- E-commerce Prices: Scrape product prices from an e-commerce site.

- Movie Ratings: Scrape movie ratings and reviews from a movie database.

- Stock Prices: Scrape stock prices from a financial news site.

- Event Listings: Scrape event listings from an events site.

These exercises and tips should help you get started with web scraping and build your skills through practice. Happy scraping!

6. FAQs

Here are some frequently asked questions (FAQs) about BeautifulSoup in Python:

6.1. What is BeautifulSoup?

BeautifulSoup is a Python library used for parsing HTML and XML documents. It creates a parse tree for parsed pages that can be used to extract data from HTML, which is useful for web scraping.

6.2. How do I install BeautifulSoup?

You can install BeautifulSoup using pip:

1 2 | pip3 install beautifulsoup4pip3 install lxml |

6.3. How do I parse an HTML document with BeautifulSoup?

First, import BeautifulSoup and then parse the HTML document:

1 2 3 4 | from bs4 import BeautifulSouphtml_doc = "<title>Title</title>"soup = BeautifulSoup(html_doc, 'lxml') |

6.4. What are the different parsers available for BeautifulSoup?

BeautifulSoup supports multiple parsers:

html.parser: Python’s built-in HTML parser.lxml: A fast and feature-rich parser (requires installation oflxml).html5lib: A pure-python library for parsing HTML as it is parsed by modern web browsers (requires installation ofhtml5lib).

6.5. How do I find an element by its tag name?

Use the find method:

1 | element = soup.find('tagname') |

6.6 How do I find all elements with a specific tag name?

Use the find_all method:

1 | elements = soup.find_all('tagname') |

6.7 How do I search for elements by class or ID?

Use the find or find_all method with the class_ or id attribute:

1 2 3 | element_by_class = soup.find('div', class_='classname')elements_by_class = soup.find_all('div', class_='classname')element_by_id = soup.find(id='elementid') |

6.8 How do I extract the text from an element?

Use the .text or .string attribute:

1 | text = element.text |

6.9 How do I get the value of an attribute of an element?

Access the attribute like a dictionary key:

1 | href = element['href'] |

6.10 How do I use CSS selectors with BeautifulSoup?

Use the select method:

1 | elements = soup.select('div.classname') |

6.11 How do I modify the content of an element?

You can modify the .string attribute or use methods like append, insert, or clear:

1 | element.string = "New content" |

6.12 How do I handle errors in BeautifulSoup?

BeautifulSoup is designed to handle imperfect HTML, so it can parse even poorly formatted documents. However, you should handle exceptions in your code to manage unexpected issues:

1 2 3 4 | try: element = soup.find('tagname')except Exception as exception: print(f"An error occurred: {exception}") |

6.13 Is web scraping legal?

Web scraping legality depends on various factors including the website’s terms of service, the type of data being scraped, and local laws. Always check the website’s terms and legal requirements before scraping.

6.14 How do I speed up BeautifulSoup parsing?

Using the lxml parser instead of html.parser can significantly improve performance. Install lxml and use it as the parser:

1 | soup = BeautifulSoup(html_doc, 'lxml') |

6.15 How do I extract all links from a webpage?

1 2 3 | links = soup.find_all('a')for link in links: print(link.get('href')) |

These FAQs cover common questions and provide a starting point for using BeautifulSoup effectively.

7. Conclusion

BeautifulSoup is a powerful and versatile Python library for parsing HTML and XML documents, making it an essential tool for web scraping. Its intuitive API allows developers to navigate, search, and modify the parse tree efficiently, handling even poorly structured documents with ease.

By leveraging BeautifulSoup, you can extract valuable data from websites for various purposes, such as data analysis, machine learning, and content aggregation. Its compatibility with multiple parsers like html.parser, lxml, and html5lib ensures that you can choose the best tool for your specific needs, balancing performance and compatibility.

However, while using BeautifulSoup, it’s important to consider the ethical and legal implications of web scraping. Always respect the terms of service of the websites you scrape, protect user privacy, and ensure compliance with relevant data protection laws.

In summary, BeautifulSoup empowers developers to transform raw HTML into structured data effortlessly, making it a cornerstone of web scraping projects. Whether you’re a beginner or an experienced developer, mastering BeautifulSoup will significantly enhance your ability to extract and manipulate web data effectively. For a deeper dive into its capabilities and best practices, the BeautifulSoup documentation is an excellent resource.